Connected Autonomous Vehicle (MPAI-CAV) – Architecture

This is the public page of the Connected Autonomous Vehicle (CAV) project providing technical information. Also see the MPAI-CAV homepage.

The goal of the MPAI-CAV standards is to promote the development of a Connected Autonomous Vehicle (CAV) industry by specifying CAV Components and Subsystems. To achieve this goal, MPAI is publishing a series of standards each adding more details to enhance CAV component interoperability.

Technical Specification: Connected Autonomous Vehicles (MPAI-CAV) – Architecture is the first of the planned series of standards. MPAI-CAV – Architecture partitions a CAV into Subsystems that are further partitioned into Components. Both Subsystems and Components are specified by their functions and interfaces, i.e., Subsystems are additionally specified by their Component topology. Subsystems are implemented as MPAI-AIF AI Workflows (AIW) and Components as AI Modules (AIM).

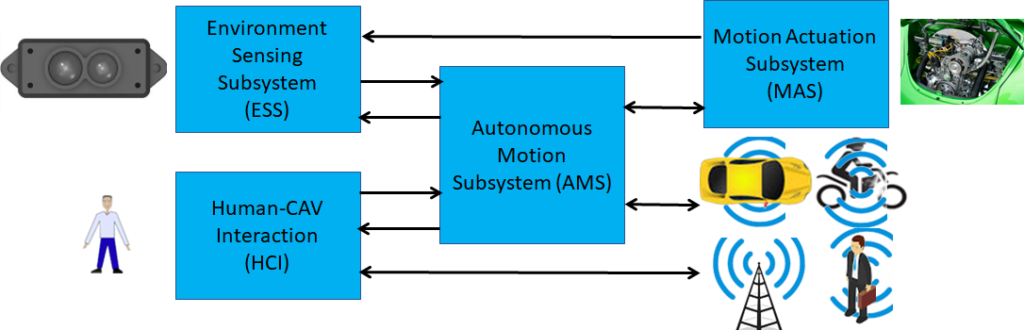

MPAI-CAV addresses 4 main interacting CAV subsystems The Human-CAV Interaction HCI gives instructions to the CAV. Sensors in the Environment Sensing Subsystem ESS and Motion Actuation Subsystem MAS allow the CAV to create a Basic Environment Representation (BER). The Autonomous Motion Subsystem AMS exchanges BERs with Remote CAVs to create the Full Environment Representation (FER). Based on the FER, the AMS issues commands to the MAS.

Fig. 1 – The four MPAI-CAV subsystems

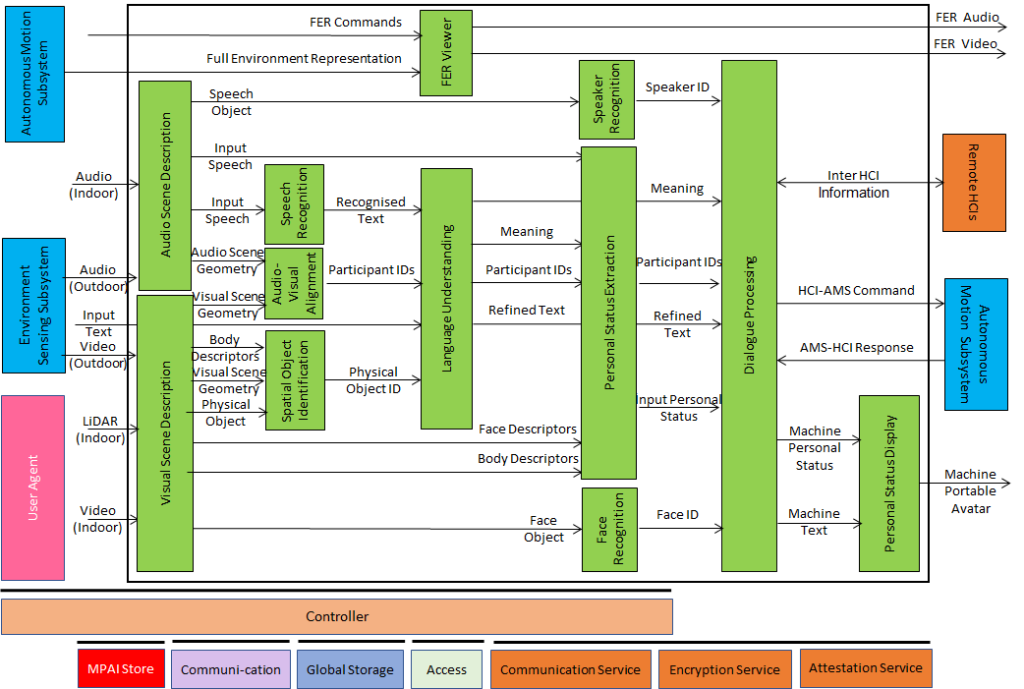

Human-CAV interaction (HCI) performs a set of functions: 1) Recognise the human CAV rights holder by their speech and face. 2) Respond to commands and queries from humans outside the CAV. 3) Instruct the Autonomous Motion Subsystem to find the route to destination. 4) Provide extended environment representation (Full Environment Representation) for humans to use in the cabin. 5) Sense human activities during the travel. 6) Converse with humans. 7) Show itself as a Portable Avatar whose speech and body convey the HCI’s Personal Status. 8) May activate other Subsystems as required by humans.

Fig. 2 – Human-CAV Interaction (HCI)

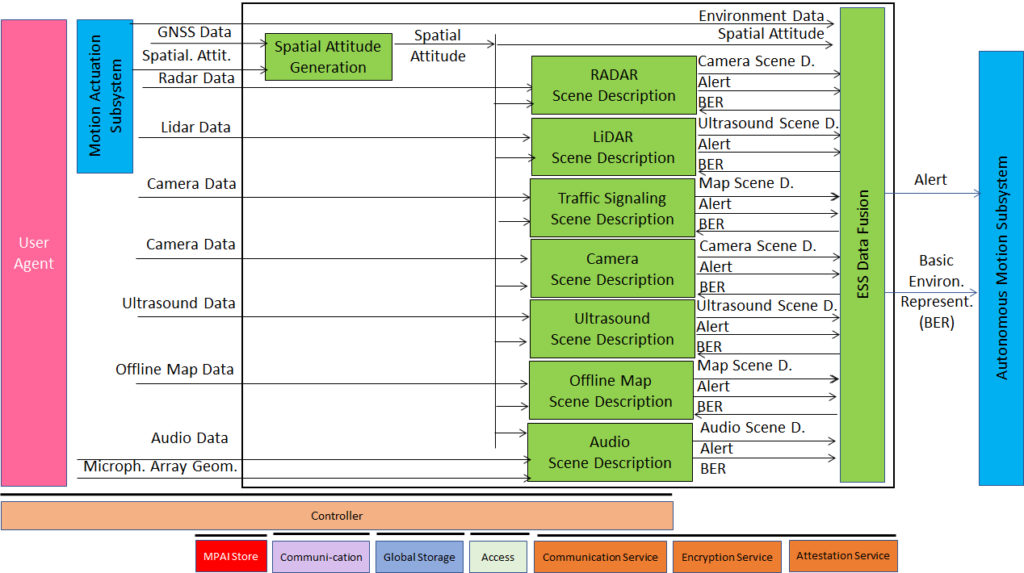

The Environment Sensing Subsystem (ESS) acquires Information via Electromagnetic sensors (GPS, LiDAR, RADAR, Visual), Acoustic sensors (Ultrasound and Audio), and other sensors (e.g. temperature, air pressure and Ego CAV’s spatial attitude) . The acquired information is used to create the Basic Environment Representation (BER) which is handed over to the Autonomous Motion Subsystem (AMS).

Fig. 3 – Environment Sensing Subsystem (ESS)

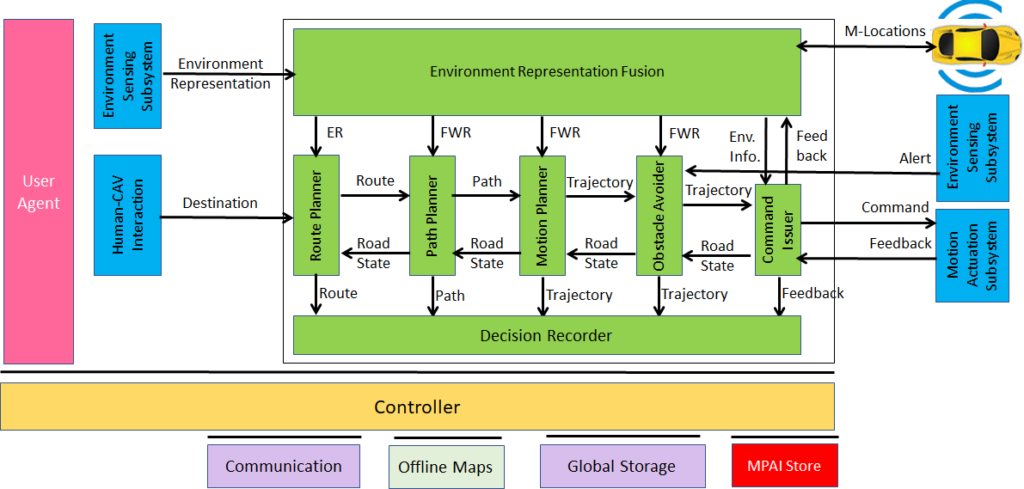

The Autonomous Motion Subsystem (AMS) is the “brain” of the CAV performing a set of functions: 1) Converse with the HCI to receive high-level instructions about CAV’s direction (e.g., to move itself to a Goal). 2) Receive the BER from the ESS. 3) Send the BER to CAVs in range and receive same from them. 4) Compute the Full Environment Representation (FER) using all available information. 5) Send commands to the Motion Actuation Subsystem.

Fig. 4 – Autonomous Motion Subsystem (AMS)

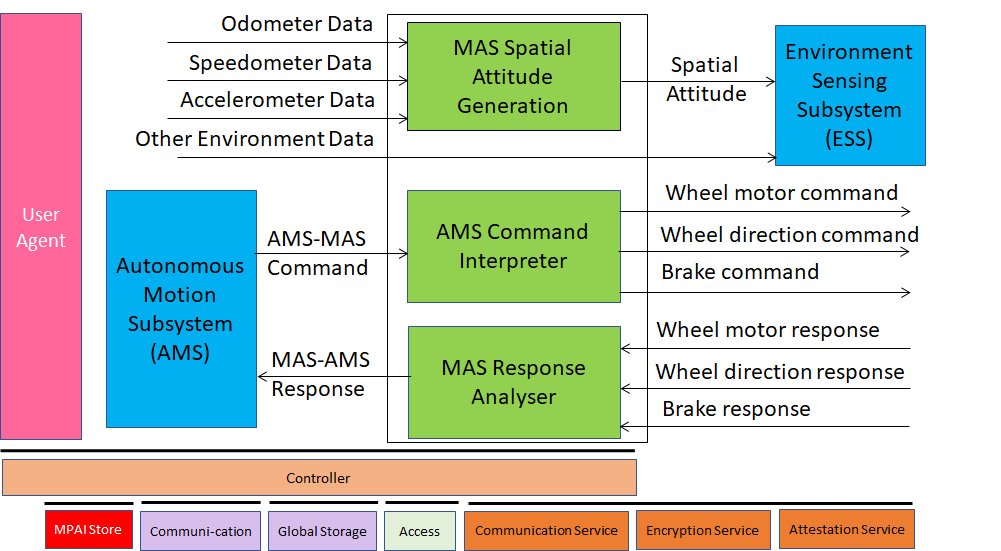

The Motion Actuation Subsystem (MAS) performs a set of functions: 1) Send all useful data from its sensors, e.g., spatial attitude and environmental (e.g., temperature, humidity), to the ESS. 2) Receive commands to move the CAV to a new Pose within an assigned period of time from AMS. 3) Send response about command execution to the AMS.

Figure 5 – Motion Actuation Subsystem (MAS)

More details about MPAI-CAV, Subsystems, and Components can be found in the links below:

- Connected Autonomous Vehicles in MPAI

- Why an MPAI-CAV standard?

- An Introduction to the MPAI-CAV Subsystems

- Human-CAV interaction

- Environment Sensing Subsystem

- Autonomous Motion Subsystem

- Motion Actuation Subsystem

If you wish to participate in the development of further MPAI-CAV standards, you have the following options:

- Join MPAI

- Keep an eye on this page.

Return to the MPAI-CAV page