Go to ToC Terms and Definitions–>

MPAI, Moving Picture, Audio, and Data Coding by Artificial Intelligence – the international, unaffiliated, non-profit organisation developing standards for AI-based data coding – is publishing a Call for Technologies related to the architecture and the data exchanged by the components of the architecture.

MPAI intends to develop a Technical Specification for the architecture of a Connected Autonomous Vehicle (CAV), to be called Technical Specification – Connected Autonomous Vehicle – Architecture. MPAI defines a CAV as a system that:

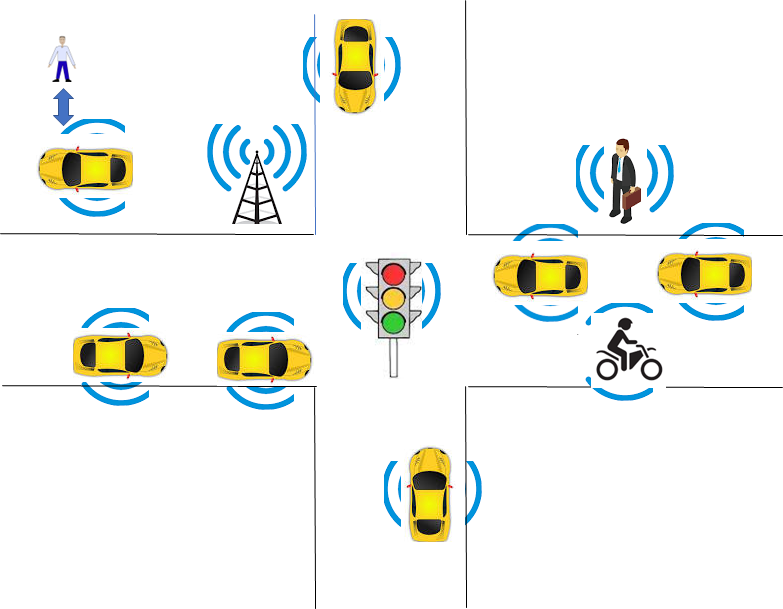

- Moves in an environment like the one depicted in Figure 1.

Figure 1 – An environment of CAV operation

- Has the capability to autonomously reach a target destination by:

- Understanding human utterances, e.g., the human’s request to be taken to a certain location.

- Planning a Route.

- Sensing the external Environment and building Representations of it.

- Exchanging such Representations and other Data with other CAVs and CAV-aware entities, such as, Roadside Units and Traffic Lights.

- Making decisions about how to execute the Route.

- Acting on the CAV motion actuation to implement the decisions.

The CAV architecture is composed of four Subsystems depicted in Figure 2.

- Human-CAV Interaction (HCI).

- Environment Sensing Subsystem (ESS)

- Autonomous Motion Subsystem (AMS).

- Motion Actuation Subsystem (MAS).

Figure 2 – The CAV Subsystems

MPAI does not intend to include the mechanical parts of a CAV in the planned Technical Specification: Connected Autonomous Vehicle – Architecture. MPAI only intends to refer to the interfaces of the Motion Actuation Subsystem with such mechanical parts.

The functions of the Subsystems are summarily described in Table 1 and specified in each of the Chapters 4-5-6-7.

Table 1 – The Functions of the MPAI-CAV Subsystems

| Subsystem name | Function |

| Human-CAV Interaction (HCI) |

|

| Environment Sensing Subsystem (ESS) |

|

| Autonomous Motion Subsystem (AMS) |

|

| Motion Actuation Subsystem (MAS) |

|

The following high-level workflow illustrates a CAV operation example and the role of CAV Subsystems:

- A human with appropriate credentials requests the CAV, via Human-CAV Interaction, to take the human to a given Pose.

- Human-CAV Interaction authenticates the human, interprets the request, communicates with the HCIs of other CAVs on matters that directly impact the human passengers, and passes commands to the Autonomous Motion Subsystem. The human may subsequently integrate/correct their instructions.

- Autonomous Motion Subsystem:

- Requests Environment Sensing Subsystem to provide the current Pose.

- Computes the Route and may offer options to authenticated humans.

- Environment Sensing Subsystem computes and sends the Basic Environment Representation to the Autonomous Motion Subsystem.

- Autonomous Motion Subsystem:

- Receives the Basic Environment Representations from the Environment Sensing Subsystem

- Exchanges the Basic Environment Representation with other CAVs and computes the Full Environment Representation.

- Makes decision on how to best move the CAV to reach the destination, e.g., by avoiding a car suddenly appearing on the horizon.

- Issues appropriate commands to the Motion Actuation Subsystem.

- While the CAV moves, the humans in the cabin may:

- Interact and hold conversation with other humans on board and the Human-CAV Interaction Subsystem.

- Issue commands.

- Requests the Full Environment Representation to render the environment.

Interact with (humans in) other CAVs.

MPAI assumes that each of the four Subsystems of a CAV is an implementation of MPAI Technical Specification: AI Framework (MPAI-AIF) V2 [2]. A AI Framework (AIF) V2 executes an AI Workflow composed of AI Modules in a secure environment. Annex 3 – Chapter 1 provides a concise description of the AI Framework.

Each of the four Chapters 4-5-6-7 addresses a Subsystem (corresponding to an AI Workflow of Annex 3 – Chapter 1) providing the following:

- The Function of the Subsystem.

- The input/output data of the Subsystem.

- The topology of the Components (AI Modules) of the Subsystem.

- For each AI Module of the Subsystem:

- The Function.

- The input/output data.

A fifth Chapter includes the elements of the so-called Communication Device enabling a CAV to communicate with other CAVs.

Note that this document:

- Does not make any assumption regarding the Location carrying out the processing required by Subsystem or AI Modules.

- Assumes that information processing, collection, and storage is performed according to the laws of the Location.

This Technical Report has been developed by the Connected Autonomous Vehicles group of the Requirements Standing Committee. MPAI may publish more versions of this Technical Report and intends to publish a Technical Specification where the AIM and AIW I/O Data Formats will all be specified.