1 Introduction

Mixed-reality Collaborative Spaces (MPAI-MCS) is an MPAI standard project applicable to scenarios where geographically separated Humans – called Participants – have real-time collaborations in virtual-reality spaces called Ambients via speaking Avatars for the purpose of achieving goals generally defined by the Use Case and specifically carried out by Avatars as directed by the Participants.

2 Components of an MCS

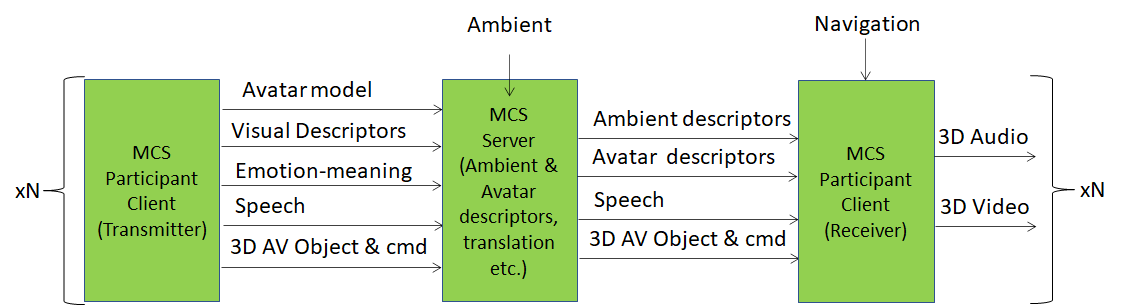

An MCS can be implemented with N Clients, where N is the number of Participants. In general a Server is also required. For the following analysis, it is convenient to split a Client in the transmitting (TX) and receiving (RX) parts.

The functions of the Client TX, Server and Client RX identified are

- Client TX generates the following types of information: Text, Speech, Audio – mono- or multi-channel, Video – 2D or volumetric, 3D audio-visual objects (in real time or retrieved).

- Client TX (or Server) generates:

- An animated Avatar that realistically represents the Participant so that it can perform the functions proper of the role in the collaboration.

- Participant’s speech transmitted as is or synthesised from input text.

- Server

- Authenticate the Avatars in the Ambient.

- Translates input languages into to the languages preferred by each Participant.

- Client RX composes and renders the Ambient and its components to the extent permitted by the format of data received (e.g., individual object or a packages scene).

There are several possible ways to allocate the MCS functionalities among Client TX, Server and Client RX in order to implement an MCS. Two extreme cases are:

- Client-based

- Client TX sends Participants’ media data:

- Speech.

- Animated Avatar.

- Client TX sends Participants’ media data:

- 3D audio-visual objects.

- Server:

- Authenticates Participants.

- Translates Participants’ speech.

- Sends Ambient and original/translated Speech to all Clients RX.

- Forwards Avatars and 3D AV object received from Participants to all Clients RX.

- Client RX renders MCS directly as received from Server or after composing a scene using the individual objects received from Server.

- Server-based

- Client TX sends Participant’s text, speech, video and 3D audio-visual objects.

- Server:

- Authenticates Participants.

- Creates synthetic Speech from text (if any present).

- Translates Participants’ speech.

- Creates Animated Avatars.

- Sends Ambient, original/translated Speech, and Avatars to all Clients RX.

- Client RX renders MCS directly as received from Server or after composing a scene using the individual objects received from Server.

In between these two extreme configurations, there is a variety of ways to allocate the Clients and Server functions.

Figure 1 depicts a possible intermediate arrangement where extraction of personal information – descriptors of face, head, arm and hands of the user – is done by the client, while other functions – such as provision of the static Ambient, processing of audio information and creation of Avatar descriptors – are executed by the server. In general, how much of the computing power is pushed to the edge is decided by the context in which the MCS is created. The functions of Client RX are independent of how the functions between Client TX and Server are allocated.

Figure 1 – The 3 components of an MPAI-MCS Use Case

3 MCS application examples

Examples of applications target of the MPAI-MCS standard are:

- Local Avatar Videoconference where realistic virtual twins (Avatars) of physical twins (Participants) sit around a table and hold a conference. In this case, as many functions as practically possible are assigned to clients because there may be strict security issues in terms of participant identity and avoidance of clear text information (e.g, voice and emotions of participants) sent to the server.

- Virtual eLearning where avatars may still be realistic virtual twins of humans but there may be less concerns about identity and information transmitted to the server. In this case most Client TX functions can be delegated to the Server.

- Teleconsulting where a user having troubles with a machine calls an expert. The avatar’s expert works on a model of the machine teaching the user’s avatar how to use the machine.

4 MCS features

With reference to Figure 1, MPAI-MCS has the the following features:

- Ambients, are 3D virtual spaces representing actual, realistic or fictitious physical spaces, populated by 3D objects representing actual, realistic or fictitious Visual Objects with specified affordances and Audio Objects propagating according to the specific Visual Object affordances (e.g., walls, objects).

- Avatars move around in Ambients, express emotions and perform gestures corresponding to actual, realistic or fictitious Humans.

- Humans generate media information captured by TX Clients generating and transmitting coded representations of Text, Human Features (e.g., emotion extracted from speech and face), Audio and Video.

- The Participant is sensed by different types of sensors:

- Microphones

- Cameras, possibly volumetric

- Sensors of human commands (mouse, haptic, etc.)

- Commands are agnostic of the specific type of sensor.

- Ambients may be supported by Servers whose goal is to create digital representations of Ambient and possibly of their components and distribute them to RX Clients in:

- Final form in which case all participating Humans share exactly the same user experience

- Component form in which case participating Humans may have different user experience depending on how they assemble the components.

- Ambients are populated by 3D Audio-Visual Objects generated by a possibly human-animated file or by a device operating in real time.

- Humans may use Commands to act on 3D Audio-Visual Objects performing such actions as:

- Manually or automatically define a portion of the 3D AV object.

- Count objects per assigned volume size.

- Detect structures in a (portion of) the 3D AV object.

- Assign physical properties to (different parts) of the 3D AV object.

- Annotate portions of the 3D AV object.

- Deform/sculpt the 3D AV object.

- Combine 3D AV objects.

- Call an anomaly detector on a portion with a criterion.

- Create links between different parts of the 3D AV object.

- Follow a link to another portion of the 3D AV object.

- 3D print (portions of) the visual part of the 3D AV object.

Go to the About MPAI-MCS page