| 1 Function | 2 Reference Model | 3 Input/Output Data |

| 4 SubAIMs | 5 JSON Metadata | 6 Profiles |

| 7 Reference Software | 8 Conformance Texting | 9 Performance Assessment |

1 Functions

Multimodal Emotion Fusion (MMC-MEF):

| Receives | Emotion (Text) |

| Emotion (Speech) | |

| Emotion (Face) | |

| Produces | Input Entity’s Emotion |

2 Reference Model

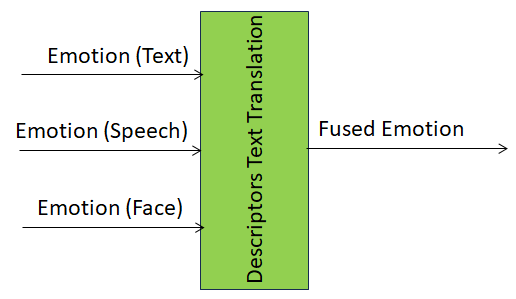

Figure 1 depicts the Reference Model of the Multimodal Emotion Fusion (MMC-MEF) AIM.

Figure 1 – The Multimodal Emotion Fusion (MMC-MEF) AIM Reference Model

3 Input/Output Data

Table 1 specifies the Input and Output Data of the Multimodal Emotion Fusion (MMC-MEF) AIM.

Table 1 – I/O Data of the Multimodal Emotion Fusion (MMC-MEF) AIM

| Input data | From | Description |

| Emotion (Text) | PS-Text Interpretation | Emotion in Text |

| Emotion (Speech) | PS-Speech Interpretation | Emotion in Speech |

| Emotion (Face) | PS-Face Interpretation | Emotion in Face |

| Output data | To | Description |

| Input Emotion | Downstream AIM | The estimated emotion that fuses all inputs |

4 SubAIMs

No SubAIMs.

5 JSON Metadata

https://schemas.mpai.community/MMC/V2.2/AIMs/MultimodalEmotionFusion.json

6 Profiles

No Profiles.

7 Reference Software

8 Conformance Testing

| Input Data | Data Type | Input Conformance Testing Data |

| Emotion (Text) | JSON | All JSON Emotion (Text) JSON files to be drawn from Emotion JSON Files |

| Emotion (Speech) | JSON | All Emotion (Speech) JSON files to be drawn from Emotion JSON Files |

| Emotion (Visual) | JSON | All Emotion (Face) JSON files to be drawn from Emotion JSON Files |

| Output Data | Data Type | Output Conformance Testing Criteria |

| Input Emotion | JSON | All Emotion JSON File shall validate against Emotion Schema |

The two attributes emotion_Name and emotion_SetName must be present in the output JSON file of Emotion. The value of either of the two attributes may be null.