4 Actors affected by NN tracking technology. 3

5.1 Use cases related to tracking technology the Neural Network model 4

5.3 Summary of the use-cases. 9

6 Service and application scenarios. 9

6.1 Traceable newsletter service. 9

6.2 Autonomous vehicle services. 10

6.3 AI generated or processed information services. 11

1 Introduction

During the last decade, Neural Networks have been deployed in an increasing variety of domains. One reason is that the production of Neural Networks is costly, in terms of both resources (GPUs, CPUs, memory) and time. Other reasons affect the user who might be using a service and wants to have a certified service quality.

NN Traceability offers solutions to satisfy both needs, ensuring that a deployed Neural Network is traceable and untampered.

Inherited from the multimedia realm, watermarking regroups a family of methodological and application tools allowing to imperceptibly and persistently insert some metadata (payload) into an original NN model. Subsequently, detecting/decoding this metadata from the model itself or from any of its inferences provides the means to trace the source and to verify the authenticity.

An additional traceability technology is fingerprinting that relates to a family of methodological and applicative tools allowing to extract some salient information from the original NN model and to subsequently identify that model based on the extracted information.

The present document provides a collection of use cases and a set of functional requirements that should be used by respondents to the NNW-TEC Call for Technologies N2416.

2 Purpose of the standard

MPAI has developed Technical Specification: Neural Network Traceability (MPAI-NNT) V1.0. This standard specifies methods to evaluate the following aspects of Active (Watermarking) and Passive (Fingerprinting) Neural Network Traceability Methods:

- The ability of a Neural Network Traceability Detector/Decoder to detect/decode/match Traceability Data when the traced Neural Network has been modified.

- The computational cost of injecting, extracting, detecting, decoding, or matching Traceability Data.

- Specifically for active tracing methods, the impact of inserted Traceability Data on the performance of a neural network and on its inference.

Uses cases and functional requirements included in the present document are intended to be used in the development of the new standard Technical Specification: Neural Network Watermarking (MPAI-NNW) – Technologies (NNW-TEC) V1.0.

The new standard shall make it possible:

- To ensure that data provided by an Actor, and received by another Actor are not compromised, i.e. that the received data or its semantics can be used for the intended scope.

- To identify the data provider and data receiver Actors.

An Actor is a process producing, providing, processing, or consuming information.

3 Definitions

| Term | Definition |

| Actor | A process that produces, provides, processes or consumes information. |

| Attacks | Any transformation, malicious or not, applied after the mark injection. Attacks can be of various types:

Removal attacks: turn undetectable/unreadable the information conveyed by the watermark. Geometric attacks: destroy the watermark synchronization, rather than to remove it. Cryptographic attacks: detect and remove the mark without knowledge of the key, thanks to the knowledge of the embedded mark. Protocol attacks: embed another watermark and/or create an ambiguous situation upon the mark detection/decoding. |

| Black-Box | Do not grant access to the network only to its inference. |

| Data payload | The amount of information injected through watermarking process. |

| Distance | A measure of the difference from one dataset to another, e.g., the Hamming distance or a correlation coefficient. |

| Imperceptibility | Inference quality of the model on its original task should not be degraded significantly through the watermark injection. |

| Modification | A method used to simulate an attack for the purpose of NN testing |

| Ownership metadata | The data carried by the watermark representing the owner and the usage conditions. |

| Parameter | A set of values characterizing the strength of a Modification |

| Robustness | The ability of the watermark to withstand a prescribed class of attacks |

| Symbol Error Rate (SER) | Symbol Error Rate or Multi-Symbol Error Rate represents the error between the retrieved character compared to the embedded. If there is only two symbol (bits) we refer it as Bit Error Rate (BER). |

| Source | An information provider in a workflow. It can be a sensor capturing information from the real world and converting it into data, or a machine producing data (e.g. data produced by any type of NN, classifiers to generative models). |

| Sink | An information consumer in a workflow; it can be a rendering device or a machine performing the final processing of the data in the workflow. A Sink may convert data into information directly perceptible by humans. |

| Task | A specific use of the watermarked Neural Network, such as classification, multimedia coding, etc. |

| Tester | An entity executing a testing process to be specified by the standard. |

| Traceability Technology (TT) | A technology that can be used by an Actor to insert Traceability Data. |

| Traceability Data | The data to be inserted by the Active Traceability method or the result of the application of a Detection algorithm to an NN for a Passive Traceability Method. |

| Watermark decoder | An algorithm able to decode an inserted watermark, when applied to a watermarked network. |

| Watermark detector | An algorithm able to detect an inserted watermark, when applied to a watermarked network. |

| Watermark overwriting | A particular case of Protocol attacks, where the attacker embeds another watermark to create an ambiguous situation where both parties can claim the ownership. |

| White-Box | Grant access to the network and make it possible for the watermark to be embedded inside the weights. |

4 Actors affected by NN tracking technology

Four types of Actors are identified as playing a traceability-related role in the use cases.

- NN owner – the developer of the NN, who needs to ensure that ownership of NN can be claimed.

- NN watermarking provider – the developer of the watermarking technology able to carry a payload in a neural network or in an inference.

- NN customer – the user who needs the NN owner’s NN to make a product or offer a service.

- NN end-user – the user who buys an NN-based product or subscribes to an NN-based service.

Examples of Actors are:

- Edge-devices and software

- Application devices and software

- Network devices and software

- Network services

5 Use case classification

The use cases are structured into two categories: the first relates to the NN per se (i.e., to the data representation of the model, as discussed in Section 4.1) while the second to the inference (i.e., to the result produced by the network when fed with some input data, as discussed in Section 4.2).

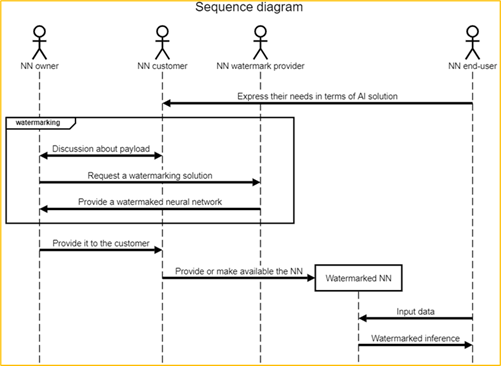

The use cases presentation includes sequence diagrams describing the positions and actions of the four main Actors in the workflow.

5.1 Use cases related to tracking technology the Neural Network model

In this set of use cases, watermarking is embedded into a Neural Network model. The subsequent watermark detection provides either the identity of the actors and models, or information about the loss of integrity of the model.

Two types of use case belong to this category:

- payload, e., data carried by the watermark is used to identify the actors or the model; this case is presented in Section 4.1.1.

- loss of integrity, e., data carried by the watermark is used to identify modifications in the model; this case is presented in Section 4.1.2.

In this set of use cases, the Neural Network Watermarking methods require that the Neural Network model be available during both the stages of watermarking embedding and watermarking detection/decoding.

5.1.1 Payload

Data carried by the watermark can be used to serve various application domains, for instance to identify:

- the ownership of an NN.

- an NN (as if it were a DOI).

5.1.1.1 Identify the ownership of an NN

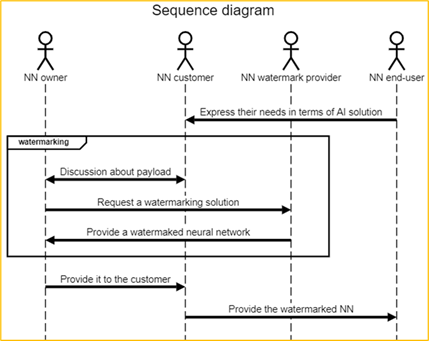

Figure 1: Identify the ownership of an NN use case: NN owner and NN customer identifiers are inserted

Description of Figure 1 workflow:

- NN customer gets needs from product/service from NN end-users.

- NN customer requests NN model from NN owner in order to be able to create the product/service requested by the end user.

- NN customer and NN owner share the need to protect NN intellectual property; NN customer does not want others to use the model to make similar products or offer similar services; NN owner wants to acquire others customers as NN customer; ideally, the NN end-user ID should also be added to the watermark (cf. the workflow in Figure 2).

- NN end-user acquires the product and/or access to the service with the embedded NN watermark.

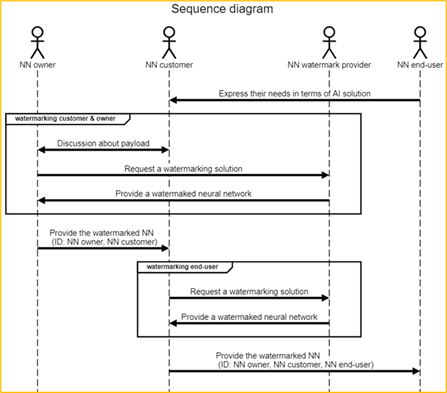

Figure 2: Identify the ownership of an NN use case: in addition to NN owner and NN customer identifiers, the NN end-user identifier is also inserted

Description of Figure 2 workflow:

- NN customer gets needs from product/service from end-users.

- NN customer requests NN model from NN owner in order to be able to create the product/service requested by the NN end-user.

- NN customer and NN owner share the need to protect NN intellectual property; NN customer does not want other to use the model to make similar products or offer similar services ; NN owner wants to acquire other customers as NN customer.

- NN customer needs to make sure that NN end-users do not share the AI solution, thus they insert an identifier for each NN end-user.

- NN end-user acquires the product and/or access to the service with the embedded NN watermark.

5.1.1.2 Identify an NN

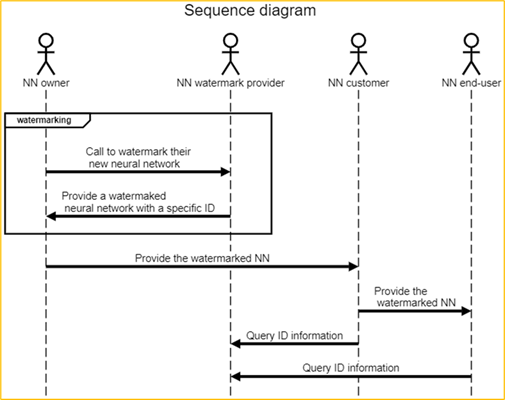

Figure 3: Identify an NN use case: NN receives an ID (e.g. DOI)

Description of Figure 3 workflow:

- NN owner wants its NN to receive a specific identifier.

- NN watermark provider gives a solution with a specific identifier for any new Neural Network and manages the ID usage through its lifecycle (e.g., validation to third parties, or ID record deletion when no longer used).

5.1.2 Loss of integrity

The purpose of this use case is to detect and/or localize any modifications induced in the NN model, by embedding a fragile watermark inside a Neural Network.

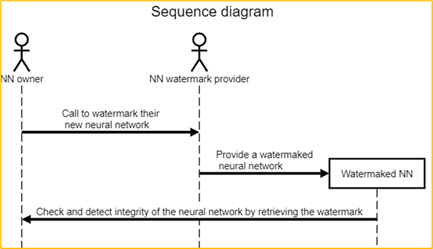

Figure 4: Check the NN integrity use case

Description of Figure 4 workflow:

- NN owner wants a watermark that permits them to check the integrity of the NN.

- NN watermark provider inserts an integrity validation watermark in the NN.

- NN owner can distribute the Watermaked NN to their customers.

- NN owner can check the integrity and detect modifications of their NN.

5.2 Inference

In this set of use cases, watermarking is embedded into the inference of a Neural Network. The subsequent watermark detection provides either the identity of the actors and models, or information about the loss of integrity of the model.

The four use cases described in Section 4.1 are not restricted to watermarking the NN model and can be also applied to the watermarking of the NN inference. For instance, Figure 5 reflects the Identify the ownership of an NN use case for NN inference.

In this set of use cases, the Neural Networking methods require that the Neural Network model be available only during the embedding stage, while the decoding/detection stage is based on the inference produced by the model when fed with application data.

Figure 5: Watermarked NN inference use case

Description of Figure 5 workflow:

- NN customer gets needs from product/service from end-users.

- NN customer requests NN model from NN owner in order to be able to create the product/service requested by the end user.

- NN customer and NN owner share the need to protect NN intellectual property; NN customer does not want others to use the model to make similar products or offer similar services; NN owner wants to acquire other customers as NN customer.

- NN end-user can feed the Watermarked NN with input data and receive the inference which is watermarked. The contained ID related information can be the same as in Section 4.1 Use cases related to watermarking the Neural Network model, for instance.

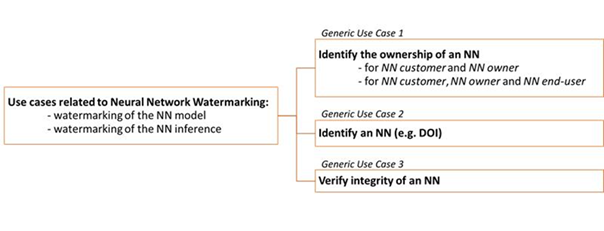

5.3 Summary of the use-cases

The use cases identified so far and presented in this document can be structured according to Figure 6.

Figure 6: Retrospective view on the use cases

6 Service and application scenarios

Relevant services and applications should benefit from one or several conventional NN tasks such as:

- Video/image/audio/speech/text classification

- Video/image/audio/speech/text segmentation

- Video/image/audio/speech/text generation

- Video/image/audio/speech decoding

In the following generic use cases identified in Section 4 are aggregated in 3 complex service and application scenarios. Additional NN tasks can be considered:

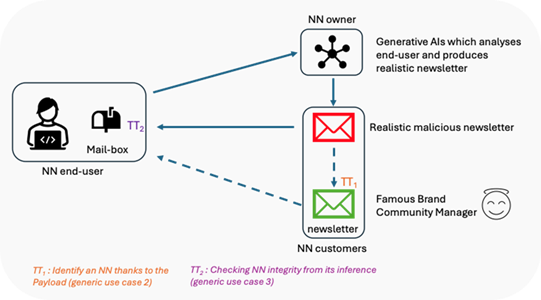

6.1 Traceable newsletter service

An end-user subscribes to a newsletter that is produced by a Generative AI service (stored by an NN customer), according to the end-user profile. This profile is either produced by a generative AI from preferences implicitly expressed by the end-user or explicitly provided by the end-user, as illustrated in Figure 8.

An attacker can maliciously modify the newsletter so as to bring different information than intended by the Generative AI. Such a modification can be made by two types of attacks. With first type of attack, the Generative AI can be attacked by modifying the weights of the network producing the newsletter. This weight modifications can be produced by various means, such as repeated queries to the network. With the second type of attack, the newsletter can be modified during its transmission to the subscriber.

To cope with the first type of attack, the newsletter service provider (NN customers) exploits Traceability Technology (TT) for each newsletter and checks the model authenticity before the newsletter is sent. Such a TT checking refers to Generic Use Case 3 in Section 5.1.1.2, where integrity of the NN is checked from its inference. To cope with the second type of attack, each subscriber receives a key for decoding the TT. The TT decoding process runs in the mail-box and verifies the traceability information. In case this results in an error, it labels the newsletter as a scam. Such a TT checking refers to use case 3 in Section 5.1.1.2, where integrity of the NN is checked from its inference.

From the NN customers perspective, TT allows to identify the NN thanks to the payload (Generic Use case 2 in Section 5.1.1.1).

Figure 7: AI-generated newsletter example

Figure 7: AI-generated newsletter example

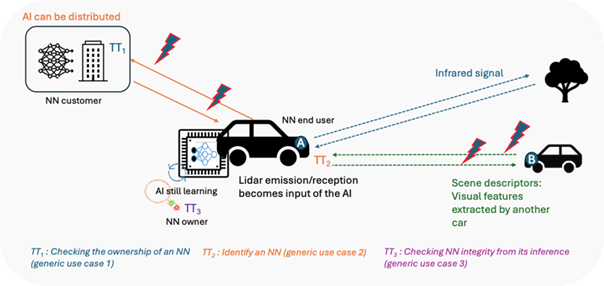

6.2 Autonomous vehicle services

Autonomous vehicles may involve significant volumes of data exchange, as illustrated in Figure 9. These vehicles are controlled by AI or machine learning models that exploit a range of technologies such as LiDAR and visual data acquisition. These solutions perform various tasks, such as scene reconstruction, object detection, or path planning.

In this context, multimodal content can be sent or received in many ways: (1) the car A (acting as an NN end-user) sends to a server (acting as an NN customer or owner) acquired signals for data-processing, (2) An embedded AI transmits instructions such as braking, turning, or accelerating to the car (NN owner and end-user), (3) Another vehicle B in the environment transmits environmental information to vehicle A. Each time data is exchanged, there is a risk of attacks with critical consequences. Such attacks can be detected by traceability technologies identified in Generic Use Case 1, 2 and 3.

6.3 AI generated or processed information services

In the case of real image modified by a deepfake process, a user would like to appear as the archetype secret agent (say, James Bond) by interacting with a generative AI service remotely accessible in the network. The user captures a video sequence via a connected camera (in an edge device), and the encoded stream is transmitted to an edge generative AI module. This module synthesizes novel audiovisual content, which is then rendered on a large display for the user to enjoy.

Such services are not immune from security threats. For example, the attacker can intercept the encoded stream prior to its arrival at the trusted AI server. A malicious edge-deployed generative AI model manipulates the visual content by altering the “agent” appearance, transforming it into a hostile monster. The manipulated stream is then redirected to the output interface, bypassing trust and integrity verification mechanisms.

The attacker may also target the generative AI service itself. By employing adversarial training techniques, the attacker induces model drift or injects conflicting learning signals, resulting in identity substitution or behavioral anomalies in the inference.

These attacks can be detected by traceability technologies identified in Generic Use Case 2 and 3.

Figure 8: AI generated or processed information services

To respondents:

MPAI requests respondents to comment on the illustrative use cases described above.

New illustrative use cases are welcome.

7 Functional requirements

The functional requirements are:

- An Actor applying a tracking technology should be able to detect whether a content (model or inference) includes the tracking technology.

- An Actor applying a tracking technology should be able to detect whether the Source data has been processed during the transmission from a Source to a Sink.

- An Actor transmitting data including a tracking technology should be able to decide whether the data preserves:

- the syntax of the Source data or data resulting from any processing.

- the semantics of the Source data or data resulting from any processing.

- An Actor receiving data including a tracking technology should be able to decide whether the data preserves:

- the syntax of the Source data or data resulting from any processing.

- the semantics of the Source data or data resulting from any processing.

- An Actor applying a watermarking technology to Source data should be able to retrieve the payload from the NN or the NN inference, to:

- allow the identification of the sender,

- allow the identification of the receiver,

- verify whether the data was sent by the intended sender,

- verify whether the data was meant for the intended receiver,

- verify whether the data was received by the intended receiver.

- An Actor applying a fingerprinting technology to Source data should be able to identify the NN or the NN inference.

To respondents:

MPAI requests respondents to propose a traceability technology that fulfills the technology requirements.

MPAI requests respondents to their methods on one or several use cases.

8 References

- MPAI Statutes

- MPAI Patent Policy

- MPAI Technical Specifications

- Call for Technologies: Neural Network Watermarking (MPAI-NNW) – Technologies (NNW-TEC) V1.0; MPAI N2416

- Framework Licence: Neural Network Watermarking (MPAI-NNW) – Technologies (NNW-TEC) V1.0; MPAI N2418

- Template for Responses: Neural Network Watermarking (MPAI-NNW) – Technologies (NNW-TEC) V1.0; MPAI N2419

- Technical Specification: Neural Network Watermarking (MPAI-NNW) – Neural Network Traceability (NNW-NNT) V1.0