(Tentative)

| Function | Reference Model | Input/Output Data |

| SubAIMs | JSON Metadata | Profiles |

| Reference Software | Conformance Testing | Performance Assessment |

1. Function

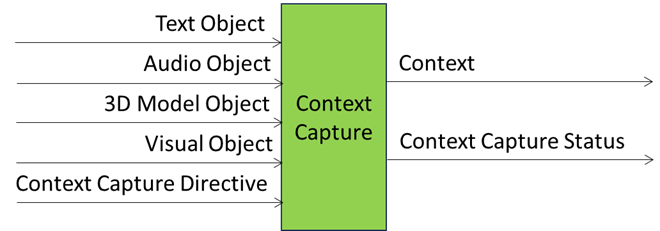

Context Capture AIM (PGM‑CXC)

- Receives

- Text Objects representing user utterances or written input.

- Audio Objects representing speech and environmental sounds.

- Visual Objects representing user gestures, facial expressions, and visual scene elements.

- 3D Model Objects representing spatial geometry and environmental structures.

- Context Capture Directives from A‑User Control guiding modality prioritisation and acquisition strategy.

- Extracts, refines, and interprets multimodal signals to:

- Disambiguate inputs by aligning text, audio, visual, and 3D model objects into a coherent multimodal frame.

-

Integrate standard media‑dependent descriptors produced by different AIMs into a single context instance, preserving their semantic alignment and provenance.

- Infer relationships among signals, such as linking gestures to utterances, or mapping audio cues to spatial anchors.

- Resolve context by applying A‑User Control directives to prioritise modalities and filter noise, and highlight salient features.

- Constructs a representation of the User environment and Entity State, including:

- Scene grounding in spatio‑temporal coordinates.

- User localisation (position, orientation, posture).

- Semantic tagging of objects, actions, and environmental features.

- Framing of cognitive, emotional, attentional, intentional, motivational, and temporal states.

- Sends

- An Entity State for multimodal prompt generation to Prompt Creation.

- Audio and Visual Scene Descriptors for environment understanding and alignment to Spatial Reasoning.

- A Context Capture Status in response to Context Capture Directives to A‑User Control.

- Enables

- Spatial Reasoning and Prompt Creation to operate with full awareness of the audio-visual environment, supporting perception‑aligned interactions and context‑aware orchestration of AIMs.

- A‑User Control to stay informed about the implementation of Context Capture Directives.

For the purpose of this PGM-CXC specification, 3D Model and Visual Scenes and Objects are treated jointly as Visual Scenes and Objects.

2. Reference Model

Figure 3 gives the Context Capture (PGM-CXC) Reference Model.

Figure 1 – The Reference Model of the Context Capture (PGM-CXC) AIM

3. Input/Output Data

Table 1 – Context Capture (PGM-CXC) AIM

| Input | Description |

| Text Object | User input expressed in structured text form, including written or transcribed utterances. |

| Audio Object | Captured audio signals from the scene, covering speech, environmental sounds, and paralinguistic cues. |

| 3D Model Object | Geometric and spatial data describing the environment, including structures, surfaces, and volumetric features. |

| Visual Object | Visual signals from the scene, encompassing gestures, facial expressions, and environmental imagery. |

| Context Capture Directive | Control instructions specifying modality prioritisation, acquisition parameters, or framing rules to guide the perceptual processing of an M‑Location. |

| Output | Description |

| Context | A time‑stamped snapshot integrating multimodal inputs into an initial situational model of the environment and user posture. |

| Context Capture Status | Scene‑level metadata describing User presence, environmental conditions, and confidence measures for contextual framing. |

4. SubAIMs (informative)

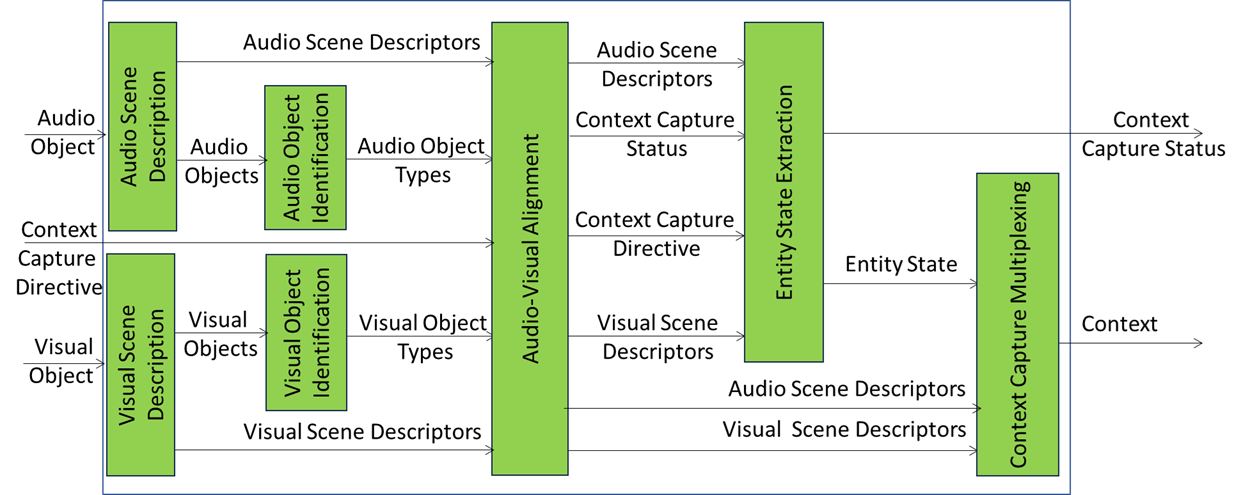

Figure 2 gives the informative Reference Model of the Context Capture (PGM‑CXC) Composite AIM.

Figure 2 – Reference Model of Context Capture (PGM‑CXC) Composite AIM

Figure 1 assumes that PGM-CXC includes the following SubAIMs:

1. Audio Scene Description (ASD)

- Parses raw Audio Objects.

- Produces Audio Scene Descriptors (structured representation of ambient sounds, speech, and spatial audio sources).

- Enables downstream modules (AOI, AVA) to work with semantically enriched audio data.

2. Visual Scene Description (VSD)

- Parses raw Visual Objects and 3D Model Objects.

- Produces Visual Scene Descriptors (structured representation of geometry and objects).

- Enables VOI and AVA to add details to spatial visual features.

3. Audio Object Identification (AOI)

- Analyses Audio Scene Descriptors.

- Classifies discrete Audio Object Types (speech segments, sound events, environmental cues).

- Enables AVA and Entity State Extraction (ESE) to align audio semantics with visual and contextual data.

4. Visual Object Identification (VOI)

- Analyses Visual Scene Descriptors.

- Classifies discrete Visual Object Types (gestures, facial expressions, environmental objects).

- Enables AVA and ESE to integrate visual semantics into context framing.

5. Audio‑Visual Alignment (AVA)

- Combines Audio Scene Descriptors, Audio Object Types, Visual Object Types, Visual Scene Descriptors, and Context Capture Directive.

- Synchronises audio and visual streams into Aligned Audio Scene Descriptors and Aligned Visual Scene Descriptors.

- Enables directive‑aware actions and report Context Capture State (metadata on synchronisation, directive compliance, anchoring).

6. Entity State Extraction (ESE)

- Integrates Aligned Audio Scene Descriptors, Aligned Visual Scene Descriptors, Context Capture Directive, Context Capture State, and Personal Status (via internal PSE).

- Produces the initial User’s Entity State, a highly structured Data Type that may be reduced to Personal Status.

- Enables downstream AIMs to adapt reasoning and expressive stance based on the User’s posture.

7. Context Capture Multiplexing (CCX)

- Consolidates Entity State extracted from the User’s Visual captured representation and Context Capture State with aligned descriptors.

- Produces the final structured output of the CXC AIM.

- Enables external AIMs (Prompt Creation, Audio Spatial Reasoning, and Visual Spatial Reasoning) to consume directive‑aware context frames and A‑User Control to receive a report on the execution of the Directive.

Table 2 gives the AIMs composing the Context Capture (PGM-CXC) Composite AIM.

Table 2 – AIMs composing the Context Capture (PGM‑CXC) Composite AIM

| AIM | AIMs | Names | JSON |

| PGM‑CXC | Context Capture | Link | |

| PGM‑ASD | Audio Scene Description | Link | |

| PGM‑VSD | Visual Scene Description | Link | |

| PGM‑AOI | Audio Object Identification | Link | |

| PGM‑VOI | Visual Object Identification | Link | |

| PGM‑AVA | Audio-Visual Alignment | Link | |

| PGM-ESE | Entity State Extraction | Link | |

| PGM-CCX | Context Capture Multiplexing | Link |

Table 3 defines all input and output data involved in PGM-CXC AIM.

Table 3 – Input and output data of the PGM‑CXC AIM SubAIMs

| AIMs | Input | Output | To |

| Audio Scene Description | Audio Object | Audio Scene Descriptors | AOI, AVA |

| Visual Scene Description | Visual Object, 3D Model Object | Visual Scene Descriptors | VOI, AVA |

| Audio Object Identification | Audio Scene Descriptors | Audio Object Types | AVA |

| Visual Object Identification | Visual Scene Descriptors | Visual Object Types | AVA |

| Audio‑Visual Alignment | Audio Scene Descriptors, Audio Object Types, Visual Scene Descriptors, Visual Object Types, Context Capture Directive | Aligned Audio Scene Descriptors, Aligned Visual Scene Descriptors, Context Capture Directive, Context Capture Status | ESE |

| Entity State Extraction | Aligned Audio Scene Descriptors, Aligned Visual Scene Descriptors, Context Capture Directive, Context Capture Status | Entity State | CCO |

| Context Capture Multiplexing | Aligned Audio Scene Descriptors, Aligned Visual Scene Descriptors, Context Capture Status, Entity State | Context | PRC, ASR, VSR |

| Context Capture Status | AUC |

Table 4 – External and Internal Data Types identified in Context Capture AIM

| Data Type | Definition |

| TextObject | Structured representation of user input expressed in written or transcribed text form. |

| AudioObject | Captured audio signals from the scene, covering speech, environmental sounds, and paralinguistic cues. |

| VisualObject | Visual signals from the scene, encompassing gestures, facial expressions, and environmental imagery. |

| 3DModelObject | Geometric and spatial data describing the environment, including structures, surfaces, and volumetric features. |

| AudioSceneDescriptors | Structured description of environmental audio features and sources. |

| VisualSceneDescriptors | Structured description of visual elements, geometry, and scene layout. |

| 3DModelSceneDescriptors | Structured description of 3D Model elements, geometry, and scene layout. |

| IdentifiedAudioObjects | Identified and classified discrete audio entities. |

| IdentifiedVisualObjects | Identified and classified discrete visual entities. |

| Identified3DModelObjects |

Identified and classified discrete 3D Model entities. |

| AlignedAVDescriptors | Unified multimodal representation synchronising audio and visual streams. |

| EntityState | Representation of user’s overall cognitive, emotional, attentional, and temporal state. |

| Context | Time‑stamped snapshot integrating multimodal inputs into situational model of environment and User posture. |

| ContextCaptureDirective | Instruction provided by A-User Control. |

| ContextCaptureStatus | Metadata describing user presence, environmental conditions, and confidence measures. |

Table 5 maps CXC Inputs/Outputs to Unified Messages.

Table 5 – Table — PGM-CXC Inputs/Outputs mapped to PGM-AUC Unified Messages

| CXC Data Name | Role | Origin / Destination | Unified Schema Mapping |

|---|---|---|---|

| Context Capture Directive | Input | A‑User Control | Directive → TargetAIM=CXC (AIMInstance); acquisition/framing parameters in Parameters and/or Constraints. |

| Text Object | Input | Captured stream | Aggregated into Context (PGM‑CXT) under InputChannels. |

| Audio Object | Input | Captured stream | Same as above; referenced in Context. |

| Visual Object | Input | Captured stream | Same as above; referenced in Context. |

| 3D Model Object | Input | Captured stream | Same as above; referenced in Context. |

| Context | Output | PRC, ASR, VSR | Status → Result (Context snapshot with AVSceneDescriptors + UserState); correlated via Envelope.CorrelationId. |

| Context Capture Status | Output | A‑User Control | Status → State, Progress, Summary, Result (scene-level metadata, confidence, presence). |

5. JSON Metadata

https://schemas.mpai.community/PGM1/V1.0/AIMs/ContextCapture.json

6. Profiles

No Profiles.