“Digital human” has recently become a trendy expression and different meanings can be attached to it. MPAI says that it is “a digital object able to receive text/audio/video/commands (“Information”) and generate Information that is congruent with the received Information”.

MPAI has been developing several standards for “digital humans” and plans on extending them and developing more.

Let’s have an overview.

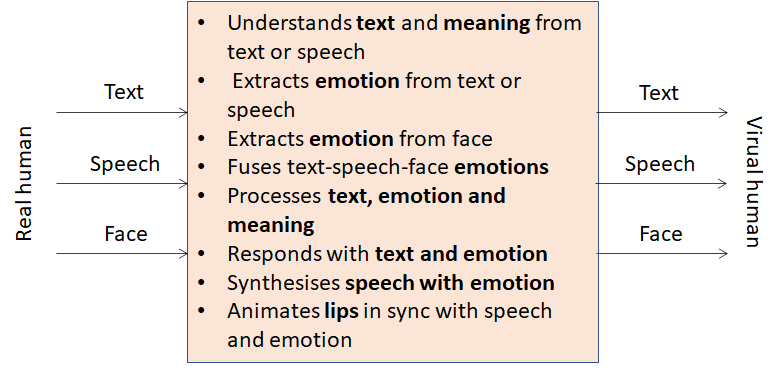

In Conversation with Emotion a digital human perceives text or speech from and video of a human. It then generates text or speech that is congruent with content and emotion of the perceived media, and displays itself as an avatar whose lips move in sync with its speech and according to the emotion embedded in the synthetic speech.

In Multimodal Question Answering a digital human perceives text or speech from a human asking a question about an object held by the human, and the video of the human holding the object. In response it generates text or speech that is a response to the human question and is congruent with the perceived media data including the emotional state of the human.

Adding an avatar whose lips move in sync with the generated speech could have a more satisfactory rendering of the speech generated by the digital human.

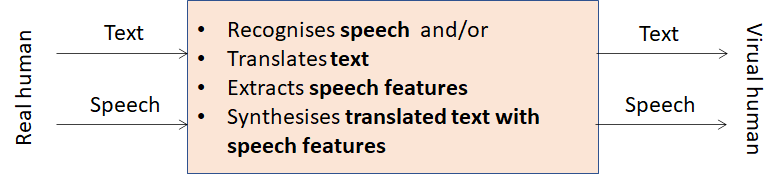

In Automatic Speech Translation a digital human is told to translate speech or text generated by the human into a specified language and to preserve or not the speech features of the input speech, in case the input is speech. The digital human then generates translated text, if the input is text and translated speech preserving or not the input speech features, if the input is speech.

Adding an avatar whose lips move in sync with the generated speech and according to its embedded emotion could have a more satisfactory rendering of the digital human speech.

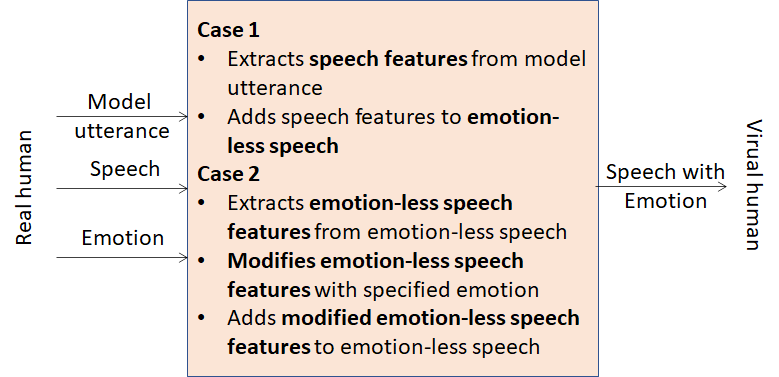

In Emotion Enhanced Speech a digital human is told to add an emotion to an emotion-less speech by giving

- A model utterance: the digital human extracts and adds the speech features of the model utterance to the emotion-less speech segment.

- An emotion taken from the MPAI standard list of emotions: the digital human adds the speech features obtained by combining the speech features proper of the selected emotion to the speech features of the emotion-less speech to the emotion-less speech.

In both cases an avatar can be animated by the emotion-enhanced speech.

MPAI has more digital human use cases under development:

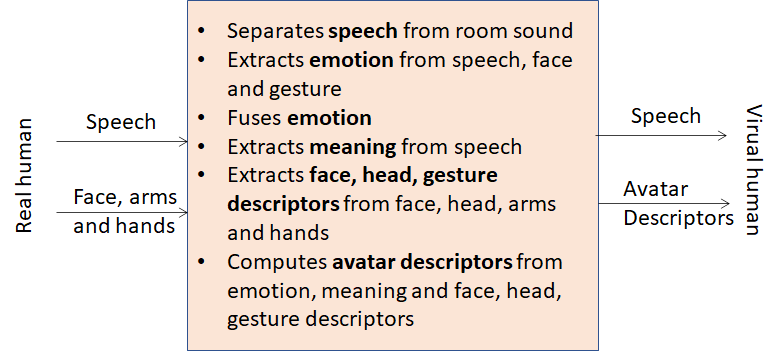

- Human-CAV Interaction: A digital human (a face) speaks to a group of humans gazing at the human it is responding to.

- Mixed-reality Collaborative Spaces: A digital human (a torso) utters the speech of a participant in a virtual videoconference while its torso moves in sync with the participant’s torso.

- Conversation about a scene: A digital human (a face) converses with a human about the objects of a scene the human is part of gazing at the human or at an object.