MPAI propounds the development of Collaborative Immersive Laboratories (CIL)

Collaborative Immersive Laboratory (XRV-CIL) is project designed to enable researchers in network-connected physical venues equipped with devices that create an immersive virtual environment to manipulate and visualise laboratory data with other researchers located at different places and having a simultaneous immersive experience.

XR Venue (MPAI-XRV) is an MPAI project addressing a multiplicity of use cases enabled by Extended Reality (XR) and enhanced by Artificial Intelligence (AI) technologies. MPAI-XRV specifies design methods for AI Workflows and AI Modules that automate complex processes in a variety of application domains. Venue is used as a synonym for Real and Virtual Environment. CIL is one of the XRV projects.

One use case for CIL would be to work with medical data such as scans to discover patterns within cellular data to facilitate therapy identification as part of the following workflow:

- Start from a file (e.g., a LIF file for data from a confocal microscope) that contains slices of a 3D Object (+ time) produced by machines from different manufacturers and enable real time navigation of the 3D object starting from slices.

- Use AI trained filter to filter out the noise. Noise is information not part of the scanned object that is found in the slices.

- Preserve the slices by applying specific processes, e.g., dehydration.

- Enhance some specific features of the object by using appropriate contrasting agents, e.g., monoclonal antibodies.

- Use the slices in sufficient number to train a Machine:

- To count the cells in a human tissue from different organs, different living bodies, and anatomical features presenting different health conditions.

- To identify the typology and functions of the cells caused by the influence of genomics and environment, i.e., phenotyping.

- Request the trained Machine to produces “inferences” used to count and identify the cells having specified features.

- Generate statistics of the inferences produced by the Machine.

- Human navigates the cleaned (noise-filtered) slices as an object and verify whether the inferences of the Machine can be trusted.

- A trajectory of possible outcomes can plot towards multiple decision paths for desired outcomes based on change in how the living body changes over time guiding proactive decisions in habit and therapeutical interventions.

- After a certain time, redo steps 1 to 9.

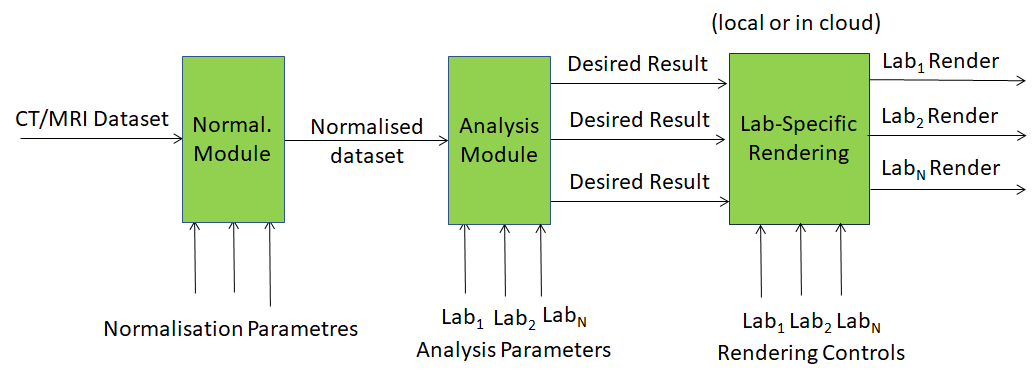

For instance, Figure 1 shows a CT or MRI dataset being normalised, analysed and the result rendered with e.g., a renderer that is common to the participating labs. Each Lab may enter annotations to the dataset or apply rendering controls that enhance appropriate parts of the rendered dataset.

Figure 1 – An example of data analysed and rendered in an XRV-CIL

Figure 1 represents a specific case of the full XRV-CIL project while Figure 2 represents the more general case.

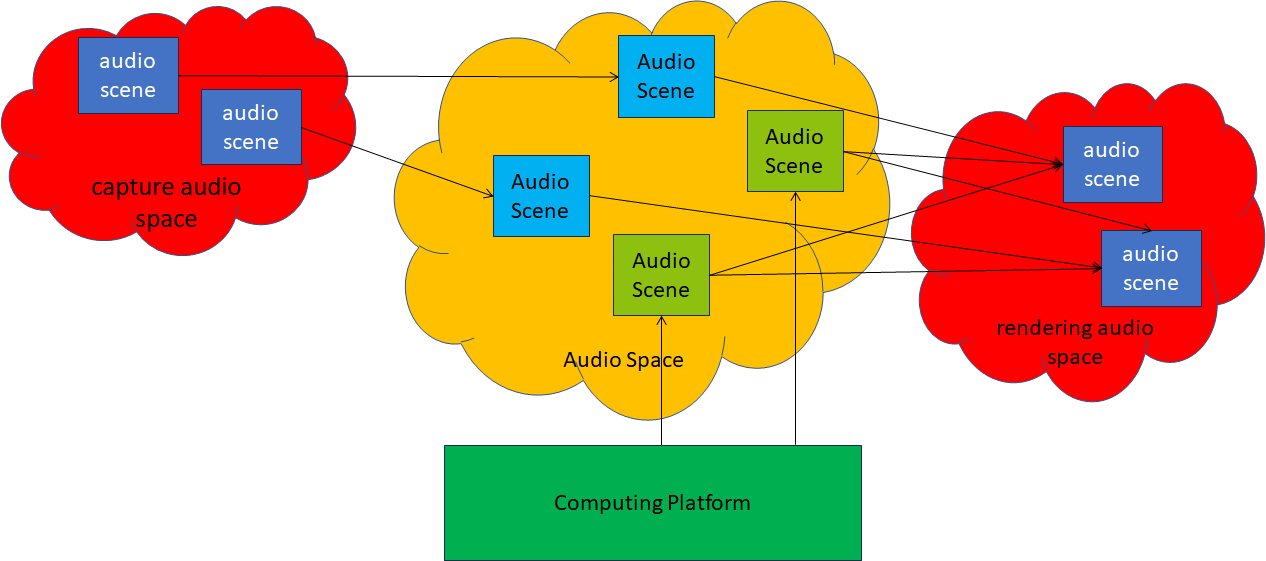

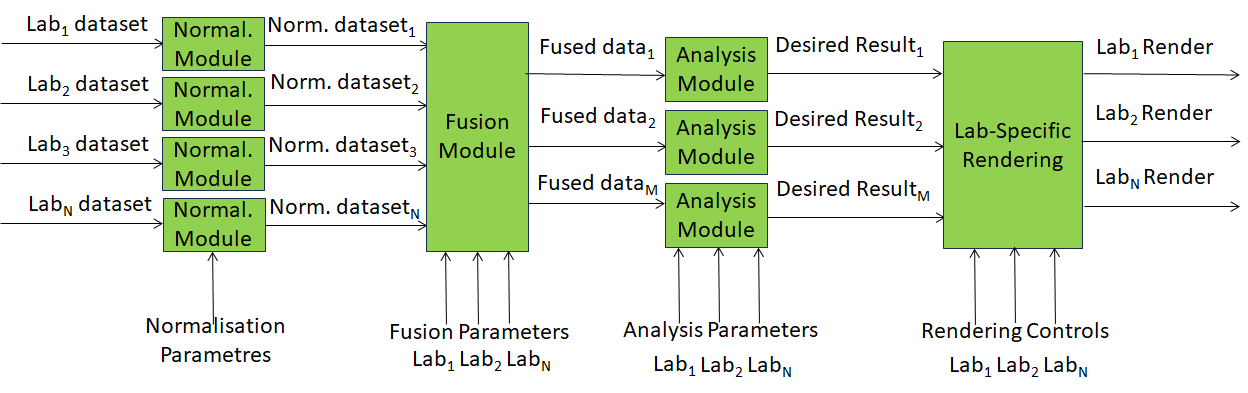

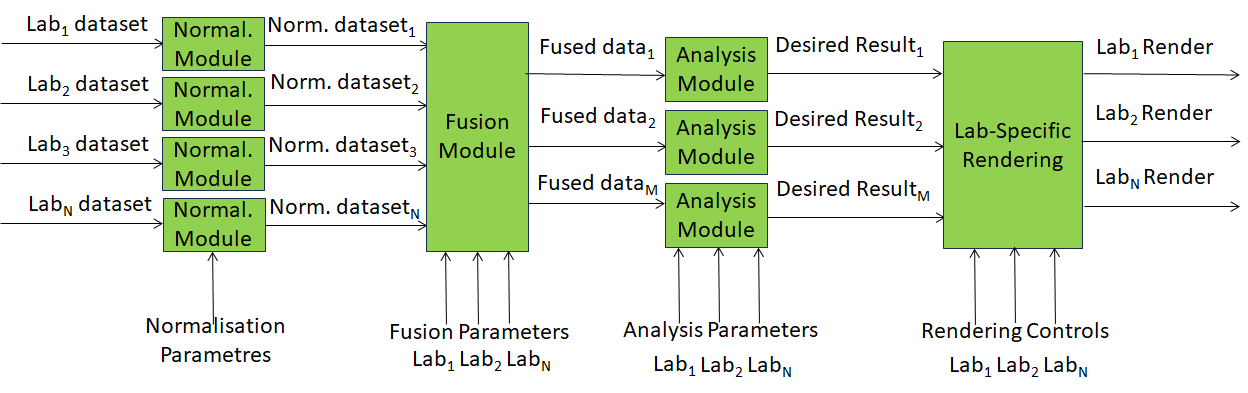

Figure 2 – The multi-technology, multi-location XRV-CIL case

Let us assume that there are N geographically distributed labs providing datasets acquired with different technologies at different times related to a particular application domain (where each lab may provide more than one dataset). Technology-specific AI Modules normalise the datasets. A Fusion AI Module controlled by Fusion Parameters from each lab provides M Fused Data (number of Fused Data is independent of the number of input datasets).

Fused Data are processed by Analysis AI Modules driven by Analysis Parameters possibly coming from one or more labs. They produce Desired Results which are then Rendered specifically for each Lab either locally or in the cloud.

The model of Figure 2 is applicable to various domains for scientific, industrial, and educational applications such as:

- Medical

- Anthropological

- Multi- and hyper-spectral Imaging

- Spectroscopy

- Chemistry

- Geology and Material Science

- Non-destructive testing

- Oceanography

- Astronomy

XRV-CIL promises to dramatically improve the way data is collaboratively acquired, processes, and shared among laboratories.

MPAI, the international, unaffiliated, non-profit organisation developing standards for AI-based data coding might contribute to the areas of dataset normalisation, specification of input/output and metadata of processing elements, interaction protocols with rendered processing results. MPAI could also contribute to identification of specific AI technologies to process datasets, e.g., cell counting mentioned above.