Highlights

- MPAI presents its latest published standards

- Second consecutive Creative AI Trophy awarded to MPAI Founding Member Audio Innova

- MPAI approves two new standards

- A look at the upcoming AI Health standard

- Meetings in the coming February-March meeting cycle

MPAI presents its latest published standards

In the last few months, MPAI has approved and published seven standards, some new and some extensions of existing standards. MPAI in now planning a series of online presentations to give an opportunity to learn about the new MPAI standards.

The online presentations will be made in the week of 11-15 March according to the following schedule (all times UTC):

| Standard | March | Registration |

| AI Framework (MPAI-AIF) | 11 T16:00 | Link |

| Context-based Audio Enhancement (MPAI-CAE) | 12 T17:00 | Link |

| Connected Autonomous Vehicle (MPAI-CAV) – Architecture | 13 T15:00 | Link |

| Human and Machine Communication (MPAI-HMC) | 13 T16:00 | Link |

| Multimodal Conversation (MPAI-MMC) | 12 T14:00 | Link |

| MPAI Metaverse Model (MPAI-MMM) – Architecture | 15 T15:00 | Link |

| Object and Scene Description (MPAI-OSD) | 12T16:00 | Link |

| Portable Avatar Format (MPAI-PAF) | 14 T14:00 | Link |

The presentations – scheduled to last 40 min – will introduce scope, features, and technologies in each standard, as well as the new web-based access to MPAI standards. Discussions will follow.

Second consecutive Creative AI Trophy awarded to MPAI Founding Member Audio Innova

On February 8, 2024, MPAI founding member Audio Innova secured its second consecutive victory by being awarded the ‘Creative AI Trophy’, the “Palme d’Or” of Artificial Intelligence at the World Artificial Intelligence Cannes Festival (WAICF). This prestigious event stands as the foremost global gathering focused on AI. The award-winning project, titled ‘Now and then (and tomorrow): preserving, re-activating and sharing interactive multimedia artistic installations by means of AI,’ is anchored on MPAI-CAE Audio Recording Preservation (ARP) technology. It also introduces an innovative multilayer model for metadata organization, harnessing AI to revive interactive artistic installations. This art form is known for its inherently brief lifespan, making the preservation and reactivation efforts particularly significant.

The winning team, coordinated by Sergio Canazza, includes Anna Zuccante, Giada Zuccolo, Alessandro Fiordelmondo, and Cristina Paulon.

The project was selected among 45 finalists shortlisted from many other candidates to “get into the spotlight the next AI Superstar”.

The jury was composed by ten among the most relevant experts in the field of artificial intelligence, including Adam Cheyer, founder of Siri, Sentient, Viv Labs, and Change.org, Jean-Gabriel Ganascia from Sorbonne University, Hiroaki Kitano, CEO of Sony AI, Antonio Krüger, director of the German Research Center For Artificial Intelligence, Francesca Rossi from Watson IBM Research Lab, New York, and AI Ethics Global Leader, and Frederic Werner, head of the Consumer Engagement division at ITU.

Figure 1 – Two Audio Innova personnel receiving holding the Award

The new version of MPAI- CAE specification that includes Audio Recording Preservation is available online.

MPAI-41 has just approved two new standards. One is the new version 2.1 of Context-based Audio Enhancement (MPAI-CAE). The standard joins the growing list of standards that have undergone a revision to extend their scope:

- AI Framework (MPAI-AIF) now at V2.0

- Compression and Understanding of Industrial Data now at V1.1

- Governance of the MPAI Ecosystem now at V1.1

- Multimodal Conversation now at V 2.1

- MPAI Metaverse Model – Architecture now at V1.1

- Portable Avatar Format now at V1.1

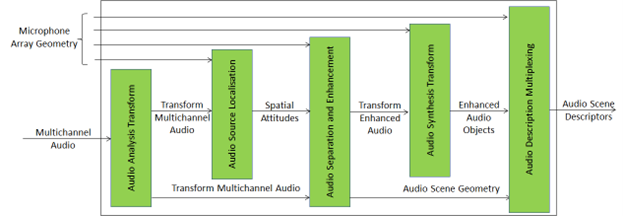

MPAI-CAE V2.1 is now accessible online in the new web-based modality. The standard is a collection of use cases implemented as AI Workflows (four in the case of MPAI-CAE). Each use case is specified by drawing from a pool of AI Modules that are potentially shared by other MPAI standards. The Audio Scene Description Composite AIM specified by MPAI-CAE V2.1 is depicted in the figure. Go to the MPAI-CAE web site and enjoy the experience.

Figure 2 – The Audio Scene Description Composite AIM

The second standard – Human and Machine Communication (MPAI-MMC) – is “new”. Its scope was described in detail in the last issue of this newsletter. It supports new forms of communication between Entities – humans present in a real space or represented in a virtual space as a speaking avatar and acting in a context – using text, speech, face, gesture, the audio-visual scene in which it is embedded. MPAI-MMC integrates a wide range of technologies available from existing MPAI standards.

With these achievements, MPAI can now boast a total of eleven standards approved – seven of which have been extended at least once – and six projects under way. Please have a look at the linked list of MPAI standards and projects.

This is a good reason for you to join MPAI now to contribute to the management of MPAI Assets and the development of existing projects or to propose new projects.

A look at the upcoming AI Health standard

MPAI has identified Health Data as an area where AI can successfully be applied to achieve better results and has been working on the Artificial Intelligence for Health (MPAI-AIH) project. In the second half of 2023 it has published a Call for Technologies and received responses that is it currently using to draft a Technical Specification.

Health is such a vast space and MPAI-AIH is addressing one segment of it. Specifically, MPAI-AIH addresses the interoperability-related components of an AI Health Platform that comprises:

- Multiple Front Ends(handsets) – one for each participating individual. Front Ends process individual participants’ health data using AI Modules provided by the Back End, uploads and assigns rights to the Back End to process their health data. Rights granting is managed by smart contracts.

- A Back End which stores Health Data, processes Data using appropriate AI Modules and AI Workflows, allows Third Parties to process health data based on the rights granted by the originators. Back End also collect individual participants’ Neural Network Models that have been continuously trained while processing Data, updates Models using Federated Learning technologies, and redistributes the updated Models to the participants.

What is being standardised?

- The Back End architecture and the functional requirements of its services.

- The API

- The Smart Contract formats.

- Systems Data Types.

Figure 3 depicts the architecture of the AIH Platform.

igure 3 – AIH Platform Reference Model

MPAI-AIH is expected to reach standard status in a few months.

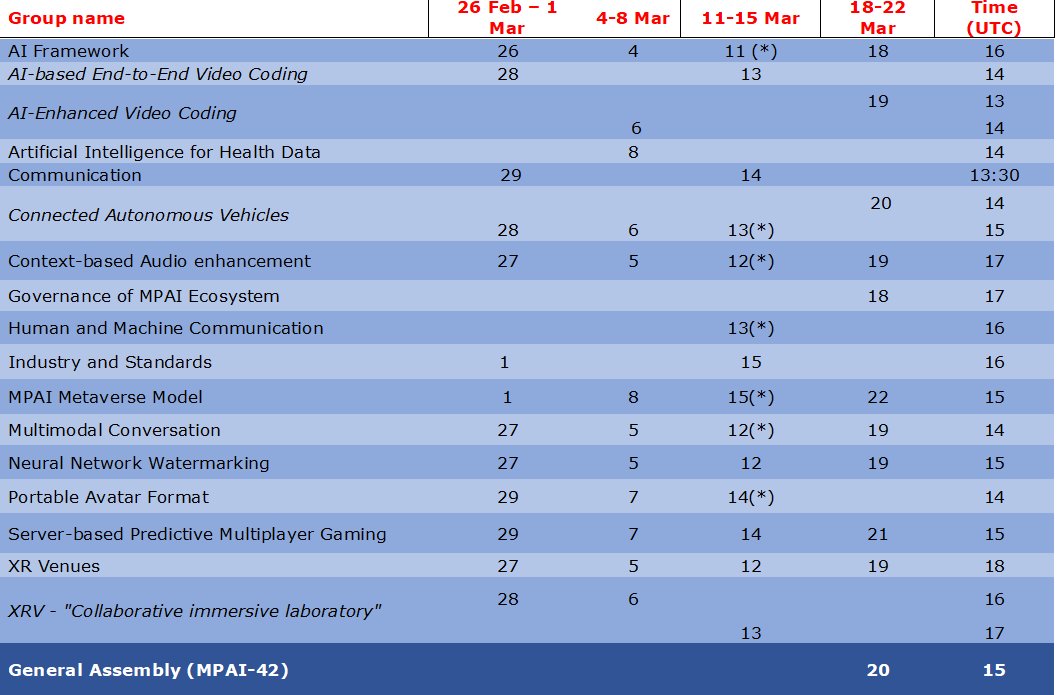

Meetings in the coming February-March meeting cycle

Participation in meetings indicated in normal font are open to MPAI members only. Legal entities and representatives of academic departments supporting the MPAI mission and able to contribute to the development of standards for the efficient use of data can become MPAI members.

Meetings in italic are open to non-members. If you wish to attend, send an email to secretariat@mpai.community.

This newsletter serves the purpose of keeping the expanding diverse MPAI community connected.

We are keen to hear from you, so don’t hesitate to give us your feedback.