Highlights

- Online presentations of one new and four revised Technical Specifications

- MPAI approves 4 revised standards in final form

- MPAI approves the first formal metaverse standard

- A contribution to industry: Television Media Analysis reference software

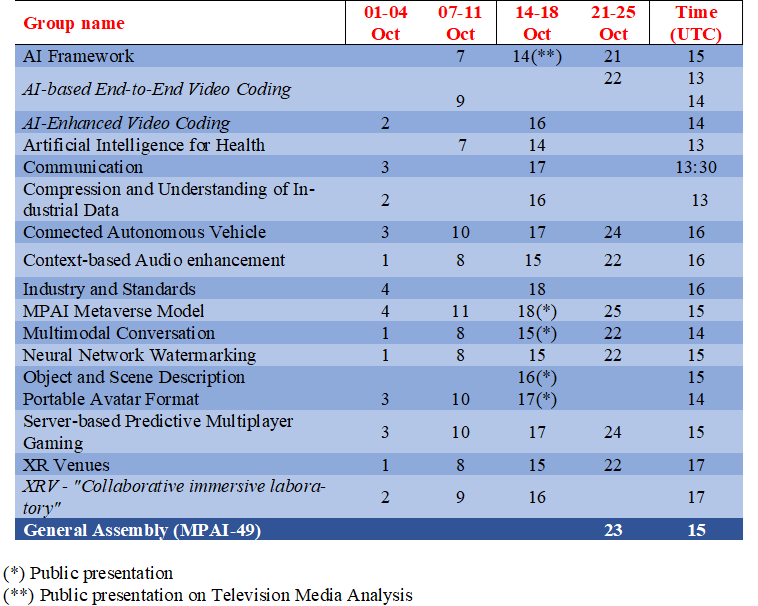

- Meetings in the coming October meeting cycle

Online presentations of Standards and Reference Software

Stay up to date with the developments in MPAI by attending the online presentations of the latest standards and versions and reference software! Click a standard name or reference software

| Type | Name | Acronym | Vers. | Time/UTC |

| Reference Software | Television Media Analysis | MPAI-TAF | V1.0 | 14 Oct @15 |

| Standard | Multimodal Conversation | MPAI-MMC | V2.2 | 15 Oct @14 |

| Standard | Object and Scene Description | MPAI-OSD | V1.1 | 16 Oct @15 |

| Standard | Portable Avatar Format | MPAI-PAF | V1.2 | 17 Oct @14 |

| Standard | MPAI Metaverse Model | MPAI-MMC | V2.2 | 18 Oct @15 |

MPAI approves 4 revised standards in final form

The MPAI process envisages main steps

- Preparation of Functional and Commercial Requirements

- Call for Technologies

- Standard Development

- Publication for Community Comments

- Final Publication.

The 48th MPAI General Assembly (MPAI-48) has approved for Community Comment publication four Standards, the three mentioned in the table above and the Data Types, Formats, and Attributes Standard. Here is a brief Description of the main features of the four Standards.

The combined Technical Specification: MPAI Metaverse Model (MPAI-MMM) – Architecture (MMM-ARC) V1.2 and Technical Specification: MPAI Metaverse Model (MPAI-MMM) – Technologies (MMM-ARC) V1.0 specify five types of Processes operating in an M-Instance, thirty Actions that Processes can perform, 65 the Data Types and their Qualifiers that Processes can Act on to achieve client and M-Instance interoperability. The next article provides more details on the MPAI-MMM Standard, the first even published standard designed for metaverse interoperability.

Technical Specification: Multimodal Conversation (MPAI-MMC) V2.2 specifies 23 Data Types that enable more human-like and content-rich forms of human-machine conversation, applies them to eight use cases in different domains and specifies 23 AI Modules. MPAI-MMC reuses Data Types and AI Modules from other MPAI standards. MPAI-MMM V2.2 specifies the technologies supporting several use cases leveraging MPAI-MMM technologies to improve human and machine conversation and text/speech translation, the full specification of the connected autonomous vehicle subsystem for human-vehicle interaction, and the virtual meeting secretary automatically providing a textual summary with emotional annotations.

Technical Specification: Object and Scene Description (MPAI-OSD) V1.1 specifies 27 Data Types enabling the digital representation of spatial information of Audio and Visual Objects and Scenes, applies them to the Television Media Analysis (OSD-TMA) use case and specifies 15 AI Modules. MPAI-OSD reuses Data Types and AI Modules from other MPAI standards. MPAI-OSD V1.1 provides fully revised versions of Visual and Audio-Visual Object, and Scene Geometry and Descriptors in addition to specification of some data types of general use such as annotations to Objects and Scenes.

Technical Specification: Portable Avatar Format (MPAI-PAF) V1.2 specifies five Data Types enabling a receiving party to render a digital human as intended by the sending party, applies them to the Avatar-Based Videoconference Use Case, and specifies 13 AI Modules. MPAI-PAF reuses Data Types and AI Modules from other MPAI standards. The Portable Avatar data type has been enriched with new features required by applications such as Speech Model for Text-to-Speech translation.

Technical Specification: Data Types, Formats, and Attributes (MPAI-TFA) V1.0 specifies Qualifiers – a Data Type containing Sub-Types, Formats, and Attributes – associated to “media” Data Types – currently Text, Speech, Audio, and Visual – that facilitate/enable the operation of an AI Module receiving a Data Type instance. During the “Community Comments” phase, MPAI has developed Qualifier for two new data types – 3D Model (required by Portable Format) and Audio-Visual (required by Television Media Analysis and other applications). MPAI-48 has also published Version 1.1 of the MPAI-TMA standard for Community Comments.

MPAI approves the first formal metaverse standard

MPAI has been probably the first standards organisation to open a format “metaverse standard” project and definitely the first to deliver a standard that enables independent metaverse implementations.

The story started beginning of 2022 and had the first two deliverables 12 and 15 months later with two Technical Reports on Functionalities and Functional Profiles. The 48 MPAI General Assembly has put a first seal with the approval of two interconnected standards on Architecture and Technologies.

This is a brief account of the contents of the MPAI Metaverse Model standard.

MPAI-MMM, in the following also called MMM, indicates the combined MMM-ARC and MMM-TEC Technical Specifications for M-Instance interoperability. It defines a metaverse instance (M-Instance) as a platform offering a subset or all of the following functions:

- Senses Data from a M-Instance of the Universe, i.e., the real world.

- Transforms the sensed Data into processed Data.

- Produces one or more M-Environments (i.e., subsets of an M-Instance) populated by Objects that can be one of the following:

- Digitised – sensed from the Universe – possibly animated by activities in the Universe.

- Virtual – imported or internally generated – possibly autonomous or driven by activities in the Universe.

- Mixed.

- Acts on Objects from the M-Instance or potentially from other M-Instances on its initiative, or driven by the actions of humans or machines in the Universe.

- Affects U- and/or M-Environments using Objects in ways that are:

- Consistent with the goals set for the M-Instance.

- Within the Capabilities of the M-Instance (M-Capabilities).

- According to the Rules of the M-Instance.

- Respecting applicable laws and regulations.

The functionalities of an M-Instance are provided by a set of Processes performing Actions on Items (i.e., instances of MMM-specified Data Types that have been Identified in the M-Instance) with various degrees of autonomy and interaction. An implementation may merge some MMM-specified Processes into one or split an MMM-specified Process into more than one process provided that the resulting system behaves as specified by MMM.

Some Processes exercise their activities strictly inside the M-Instance, while others have various degrees of interaction with Data sensed from or actuated in the Universe. Processes may be characterised as:

- Services providing specific functionalities, such as content authoring.

- Devices connecting the Universe to the M-Instance and the M-Instance to the Universe.

- Apps running on Devices.

- Users representing and acting on behalf of human entities residing in the Universe. A User (but other types of Process as well) may be rendered as a Persona, i.e., a static or dynamic avatar.

Processes perform their activities by communicating with other Processes or by performing Actions on Items. Examples of Items are Asset, 3D Model, Audio Object, Audio-Visual Scene, etc. MMM specifies the Functional Requirements of some 30 Actions, i.e., Functionalities that are performed by Processes, and some 60 Items.

A Process holds a list of Process Actions, each of which expresses the Action that the Process has performed or may perform on certain Items at certain M-or U-Locations (areas of the virtual and real world, respectively) during certain Times.

For convenience, prefixes are added to Action names:

- MM indicates Actions performed inside the M-Instance, e.g., MM-Animate is the Action that uses a stream to animate a 3D Model with a Spatial Attitude (defined as Position, Orientation, and their velocities and accelerations).

- MU indicates Actions in the M-Instance influencing the Universe, e.g., MU-Actuate is the Action of a Device rendering one of its Items to a U-Location as Media with a Spatial Attitude.

- UM indicates Actions in the Universe influencing the M-Instance, e.g., UM-Embed is the Action of placing an Item produced by Identifying a scene, UM-Capture.d at a U-Location, at an M-Location with a Spatial Attitude.

Some Actions, such as UM-Embed, are Composite Actions, i.e., combinations of Basic Actions.

Rights are a basic notion underpinning the operation of the MMM and are defined as the list of combinations of Process Action and Level. Process Action is an Item including Action, the Items on which it can be performed, the M-Locations or U-Locations where it can be performed and the Times during which it can be performed. Levels indicate that the Rights are Internal, i.e., assigned by the M-Instance at Registration time, Acquired, i.e., obtained by initiative of the Process, or Granted to the Process by another Process. The way an M-Instance verifies the compliance of a Process to its Rights in not specified. An M-Instance can decide to verify the Activity Data (the log of all performed Process Actions) based on claims by another Process, to make random verifications or to not make any verification at all.

A Process can request another Process to perform an Action on its behalf by using the Inter-Process Protocol. If the requested Process is in another M-Instance, it will use the Inter-M-Instance Protocol to request a Resolution Service of its M-Instance to establish a communication with another Resolution Service in the other M-Instance. The Backus Naur form of the MMM-Script enables efficient communication between Processes.

Figure 1 gives a summary view of these basic MMM notions.

Figure 1 – Inter-Process/M-Instance Communication

To be admitted to an M-Instance, a human may be requested to provide a subset of their Personal Profile and to Transact a Value (i.e., an Amount in a Currency). The M-Instance then grants certain Rights to identified Processes of the Registered human, including the import of Personae (i.e., their avatars) for their Users.

The fast development of certain technology areas is one of the issues that has prevented the development of standards for metaverse interoperability. MMM deals with this issue by providing the JSON syntax and semantics for all Items. When needed, the JSON syntax references Qualifiers, MPAI-defined Data Types that provide additional information to the Data Type in the form of:

- Sub-Type (e.g., the colour space of a Visual Data Type).

- Format (e.g., the compression or the file/streaming format of Speech).

- Attributes (e.g., the Binaural Cues of an Audio Object).

An M-Instance or a Client receiving a Visual Object can understand whether it has the required technology to process that Visual Object, or else it should rely on a Conversion Service to obtain a version of the Object suitable to the M-Instance or Client.

A M-Instance can be a costly undertaking if all technologies required by the MMM Technical Specification need to be implemented even for M-Instances of a limited scope. MMM-Profiles are introduced to facilitate the take-off of the metaverse. A Profile only includes a subset of Actions and Items that are expected to be needed by a sizeable number of applications. MMM defines four Profiles:

- Baseline Profile enables a human equipped with a Device supporting the Baseline Profile that enables basic applications such as lecture, meeting, and hang-out.

- Finance Profile enables a human equipped with a Device supporting the Finance Profile to perform trading activities.

- Management Profile includes the functionalities of the Baseline and Finance Profiles and enables a controlled ecosystem with more advanced functionalities.

- High Profile enables all the functionalities of the Management Profile with a few additional functionalities of its own.

MPAI did develop some use cases in the two MPAI-MMM Technical Reports published in 2022. They were used to develop the MMM-ARC and MMM-TEC Technical Specifications. MMM includes several Verification Use Cases that use MMM-Script to verify that the currently specified Actions and Items enable full support of those identified Use Cases.

A contribution to industry: Television Media Analysis reference software

The Television Media Analysis (OSD-TMA) is an implementation of the AI Framework (MPAI-AIF) standard. It is composed of an AI Workflow that produces a set of Audio-Visual Scene Descriptors (AVS) which include:

- Relevant Audio, Visual, or Audio-Visual Object

- IDs of speakers and ID of faces with their spatial positions

- Text from utterances

of a television program provided as input. The AV Scene Descriptors are “snapshot” descriptors of a “static” scene for a particular time. Scene Descriptors are stacked in Audio-Visual Event Descriptors (OSD-AVE) thus providing the description of a program of interest.

OSD-TMA assumes that there is only one active speaker at a time.

Figure 2 depicts the Reference Model of TV Media Analysis.

Figure 2 – Reference Model of OSD-TMA

The complete analysis of the workflow is available.

The OSD-TMA Reference Software:

- Receives any input Audio-Visual file that can be demultiplexed by FFMPEG.

- Uses FFMPEG to extract:

- A WAV (uncompressed audio).

- A video file.

- Produces a description of the input Audio-Visual file represented by Audio-Visual Event Descriptors.

The current OSD-TMA implementation does not support Auxiliary Text.

The OSD-TMA uses the following software components:

- RabbitMQ is a service that enables and manages asynchronous, decoupled communication between the controller and OSD-TMA AIMs using message queues.

- Controller is an MPAI-AIF function but is adapted for use in OSD-TMA. This service starts, stops and removes all OSD-TMA AIMs automatically using Docker in Docker (DinD): the controller lets only one dockerized OSD-TMA AIM run at any time, which saves computing resources. Docker is used to automate the deployment of all OSD-TMA AIMs so that they can run in different environments.

- Portainer is a service that helps manage Docker containers, images, volumes, networks and stacks using a Graphical User Interface (GUI). Here it is mainly used to manage two containerized services: RabbitMQ and Controller.

- Docker Compose builds the Docker images of all OSD-TMA AIMs and of the Controller by reading a YAML file called compose.yml. Docker Compose helps run RabbitMQ and Controller as Docker containers.

- Python code manages the MPAI-AIF operation but is adapted for use in OSD-TMA.

The OSD-TMA code includes compose.yml and the code for the Controller.

compose.yml starts RabbitMQ, not Portainer. Portainer installation is described.

The OSD-TMA code is released with a demo. Recorded demo: The saved JSON of OSD-AVE.

The OSD-TMA Reference Software has been developed by the MPAI AI Framework Development Committee (AIF-DC), in particular, Francesco Gallo (EURIX) and Mattia Bergagio (EURIX) for developing this software.

The implementation includes the AI Modules as in the following table. AIMs in red required a GPU for high performance. However, the software can also operate without GPU.

| AIM | Name | Library | Dataset |

| MMC-ASR | Speech recognition | whisper-timestamped | Labeled Faces in the Wild |

| MMC-SIR | Speaker identification | SpeechBrain | VoxCeleb1 |

| MMC-AUS | Audio segmentation | pyannote.audio | |

| OSD-AVE | Event descriptors | ||

| OSD-AVS | Scene descriptors | ||

| OSD-TVS | TV splitting | ffmpeg | |

| OSD-VCD | Visual change | PySceneDetect | |

| PAF-FIR | Face recognition | DeepFace |

Note that the Software is released with the BSD-3-Clause licence to provide a working Implementation of OSD-TMA, not a ready-to-use product. MPAI disclaims its suitability for any other purposes without guaranteeing that it is secure.

Note that acceptance of licences from the respective repositories may be needed for some AIMs. More details at this link.

Meetings in the coming October meeting cycle

This newsletter serves the purpose of keeping the expanding diverse MPAI community connected.

We are keen to hear from you, so don’t hesitate to give us your feedback.