1 Scope of One-to-Many Speech Translation

2 Reference Architecture of One-to-Many Speech Translation

3 I/O Data of One-to-Many Speech Translation

4 Functions of AI Modules of One-to-Many Speech Translation

5 I/O Data of AI Modules of One-to-Many Speech Translation

6 JSON Metadata of One-to-Many Speech Translation

1 Scope of One-to-Many Speech Translation

The goal of the One-to-Many Speech Translation (MMC-MST) Use Case is to enable one person speaking his or her language to broadcast to two or more audience members, each listening and responding in a different language, presented as speech or text. If the desired output is speech, users can specify whether their speech features (voice colour, emotional charge, etc.) should be preserved in the translated speech.

The flow of control (from Recognised Text to Translated Text to Output Speech) is identical to that of the Unidirectional case. However, rather than one such flow, multiple paired flows are provided – the first pair from language A to language B and B to A; the second from A to C and C to A; and so on.

Depending on the value of Input Selector (text or speech):

- Input Text in Language A is translated into Translated Text in and pronounced as Speech of all Requested Languages.

- The Speech features (voice colour, emotional charge, etc.) in Language A are preserved in all Requested Languages.

2 Reference Architecture of One-to-Many Speech Translation

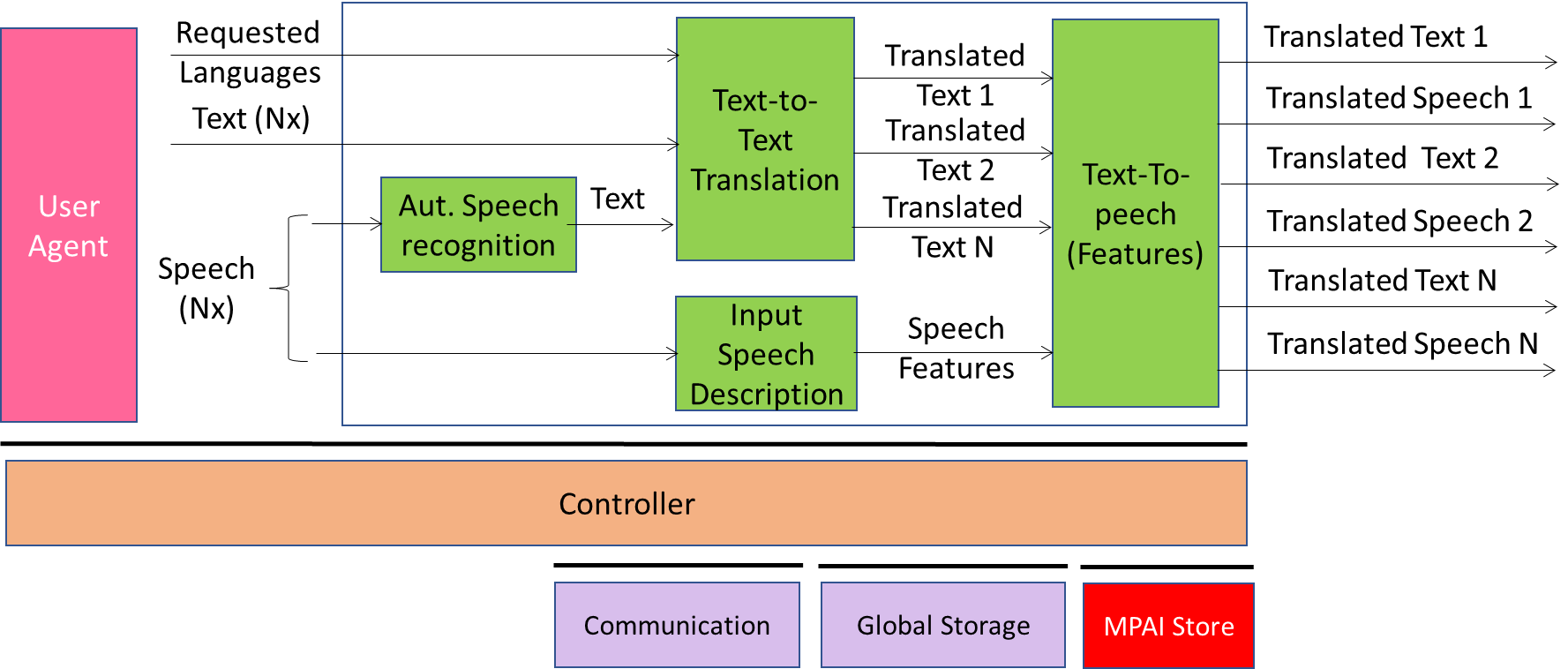

Figure 1 depicts the AIMs and the data exchanged between AIMs.

Figure 1 – Reference Model of One-to-Many Speech Translation (MST)

3 I/O Data of One-to-Many Speech Translation

The input and output data of the One-to-Many Speech Translation Use Case are given by Table 1:

Table 1 – I/O Data of One-to-Many Speech Translation

| Input | Descriptions |

| Input Selector | Determines whether: 1. The input will be in Text or Speech 2. The Input Speech features are preserved in the Output Speech. |

| Language Preferences | User-specified input language and translated languages |

| Input Speech | Speech produced by human desiring translation and interpretation in a specified set of languages. |

| Input Text | Alternative textual source information. |

| Output | Descriptions |

| Translated Speech | Speech translated into the Requested Languages. |

| Translated Text | Text translated into the Requested Languages. |

4 Functions of AI Modules of One-to-Many Speech Translation

Table 2 gives the functions of One-to-Many Speech Translation AIMs.

Table 2 – Functions of One-to-Many Speech Translation AI Modules

| AIM | Functions |

| Automatic Speech Recognition | Recognises Speech |

| Text-to-Text Translation | Translates Recognised Text |

| Input Speech Description | Extracts Speech Features |

| Text-to-Speech (Features) | Synthesises Translated Text adding Speech Features |

5 I/O Data of AI Modules of One-to-Many Speech Translation

Table 3 gives the I/O Data of the AI Modules.

Table 33 – AI Modules of One-to-Many Speech Translation

| AIM | Receives | Produces |

| Automatic Speech Recognition | Input Speech Segment | Recognised Text |

| Text-to-Text Translation | Text input | Translated Texts in the Requested Languages. |

| Input Speech Description | Input Speech | Speaker-specific Speech Descriptors. |

| Text-to-Speech (Features) | 1. Translated Texts

2. Speech Descriptors (based on Input Selector) |

Speech Segments in the Desired Languages. |

6 Specification of One-to-Many Speech Translation AIW, AIMs, and JSON Metadata

Table 4 – AIW, AIMs, and JSON Metadata

| AIW and AIMs | Name | JSON | ||

| MMC-MST | One-to-Many Speech Translation | X | ||

| – | MMC-ASR | Audio Scene Description | X | |

| – | MMC-TTT | Text-to-Speech | X | |

| – | MMC-ISD | Input Speech Description | X | |

| – | MMC-TTS | Text-to-Speech | X | |