<-Go to AI Workflows Go to ToC Human-CAV Interaction->

| 1 Functions | 2 Reference Model | 3 I/O Data. |

| 4 Functions of AI Modules | 5 I/O Data of AI Modules | 6 AIW, AIMs, and JSON Metadata |

| 7 Reference Software | 8 Conformance Texting | 9 Performance Assessment |

1 Functions

In the Conversation with Emotion (MMC-CWE) Use Case, a machine responds to a human’s textual and/or vocal utterance in a manner consistent with the human’s utterance and emotional state, as detected from the human’s text, speech, or face. The machine responds using text, synthetic speech, and a face whose lip movements are synchronised with the synthetic speech and the synthetic machine emotion.

2 Reference Model

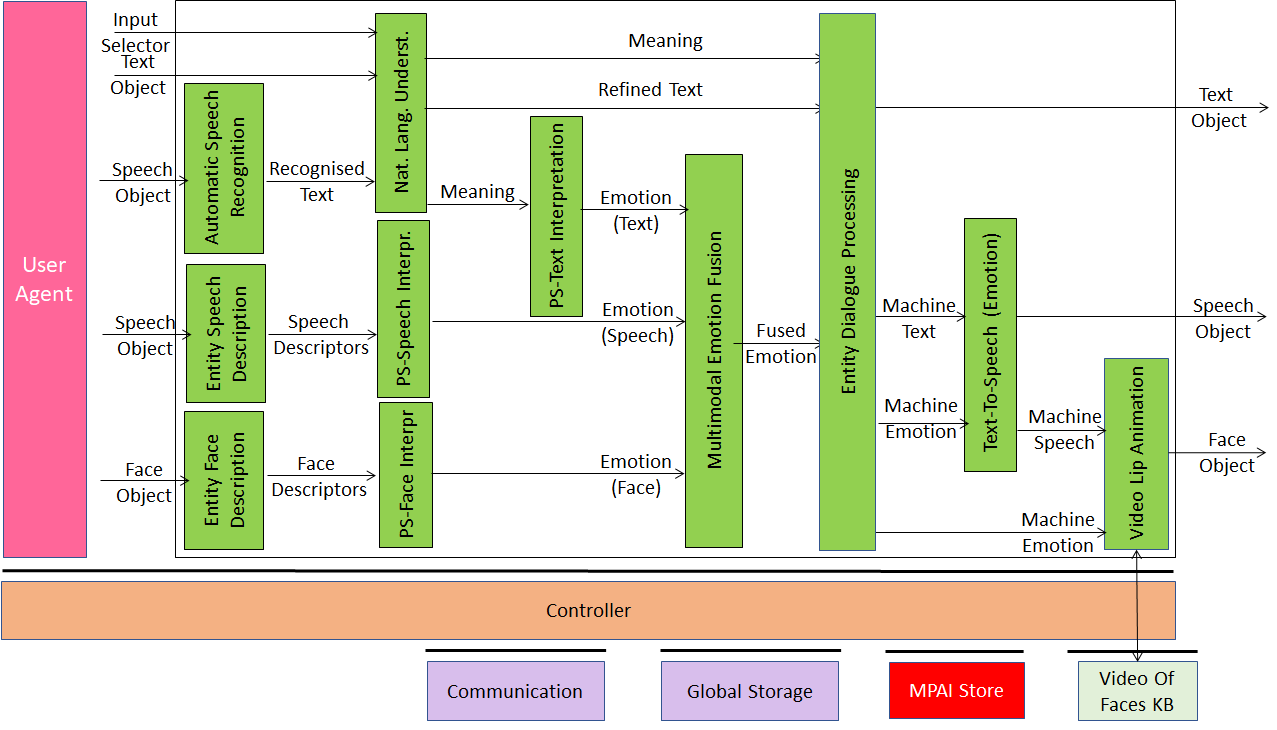

Figure 1 gives the Reference Model of Conversation With Emotion including the input/output data, the AIMs, the AIM topology, and the data exchanged between and among the AIMs.

Figure 1 – Reference Model of Conversation With Emotion

The operation of Conversation with Emotion develops as follows:

- Automatic Speech Recognition produces Recognised Text

- Input Speech Description and PS-Face Interpretation produce Emotion (Speech).

- Input Face Description and PS-Face Interpretation produce Emotion (Face).

- Natural Language Understanding refines Recognised Text and produces Meaning.

- Input Text Description and PS-Text Interpretation produce Emotion (Text).

- Multimodal Emotion Fusion AIM fuses all Emotions into the Fused Emotion.

- The Entity Dialogue Processing AIM produces a reply based on the Fused Emotion and Meaning.

- The Text-To-Speech (Emotion) AIM produces Output Speech from Text with Emotion.

- The Lips Animation AIM animates the lips of a Face drawn from the Video of Faces KB consistently with the Output Speech and the Output Emotion.

3 I/O Data

The input and output data of the Conversation with Emotion Use Case are:

Table 1 – I/O Data of Conversation with Emotion

| Input | Descriptions |

| Input Selector | Data determining the use of Speech vs Text. |

| Text Object | Text typed by the human as additional information stream or as a replacement of the speech depending on the value of Input Selector. |

| Speech Object | Speech of the human having a conversation with the machine. |

| Face Object | Visual information of the Face of the human having a conversation with the machine. |

| Output | Descriptions |

| Text Object | Text of the Speech produced by the Machine. |

| Speech Object | Synthetic Speech produced by the Machine. |

| Face Object | Video of a Face whose lip movements are synchronised with the Output Speech and the synthetic machine emotion. |

4 Functions of AI Modules

Table 2 provides the functions of the Conversation with Emotion AIMs.

Table 2 – Functions of AI Modules of Conversation with Emotion

| AIM | Function |

| Automatic Speech Recognition | 1. Receives Speech Object. 2. Produces Recognised Text. |

| Entity Speech Description | 1. Receives Speech Object. 2. Produces Speech Descriptors |

| Entity Face Description | 1. Receives Face Object. 2. Extracts Face Descriptors. |

| Natural Language Understanding | 1. Receives Input Selector, Text Object, Recognised Text. 2. Produces Meaning (i.e., Text Descriptors), Refined Text. |

| PS-Text Interpretation | 1. Receives Text Descriptors. 2. Provides the Emotion of the Text. |

| PS-Speech Interpretation | 1. Receives Speech Descriptors. 2. Provides the Emotion of the Speech. |

| PS-Face Interpretation | 1. Receives Face Descriptors. 2. Provides the Emotion of the Face. |

| Multimodal Emotion Fusion | 1. Receives Emotion (Text), Emotion (Speech), Emotion (Face). 2. Provides human’s Input Emotion by fusing Emotion (Text), Emotion (Speech), and Emotion (Video). |

| Entity Dialogue Processing | 1. Receives Refined Text, Meaning, Input Emotion. 2. Analyses Meaning and Input Text or Refined Text, depending on the value of Input Selector. 3. Produces Machine Emotion and Machine Text. |

| Text-to-Speech | 1. Receives Machine Text and Machine Emotion. 2. Produces Output Speech. |

| Video Lip Animation | 1. Receives Machine Speech and Machine Emotion. 2. Animates the lips of a video obtained by querying the Video Faces KB, using the Output Emotion. 3. Produces Face Object with synchronised Speech Object (Machine Object). |

5 I/O Data of AI Modules

The AI Modules of Conversation with Emotion perform the Functions specified in Table 21.

Table 3 – AI Modules of Conversation with Emotion

| AIM | Receives | Produces |

| Automatic Speech Recognition | Speech Object | Recognised Text |

| Entity Speech Description | Speech Object | Speech Descriptors |

| Entity Face Description | Face Object | Face Descriptors |

| Natural Language Understanding | Recognised Text | Refined Text Text Descriptors |

| PS-Text Interpretation | Text Descriptors | Emotion (Text) |

| PS-Speech Interpretation | Speech Descriptors | Emotion (Speech) |

| PS-Face Interpretation | Face Descriptors | Emotion (Face) |

| Multimodal Emotion Fusion | Emotion (Text) Emotion (Speech) Emotion (Face) |

Fused Emotion |

| Entity Dialogue Processing | 1. Text Descriptors 2. Based on Input Selector 2.1. Refined Text 2.2. Input Text 3. Input Emotion |

1. Machine Text 2. Machine Emotion |

| Text-to-Speech | 1. Machine Text 2. Machine Emotion |

Output Speech. |

| Video Lip Animation | 1. Machine Emotion 2. Machine Speech |

Output Visual |

6 AIW, AIMs, and JSON Metadata

Table 4 – AIMs and JSON Metadata

| AIW | AIMs | Name | JSON |

| MMC-CWE | Conversation With Emotion | X | |

| MMC-ASR | Automatic Speech Recognition | X | |

| MMC-EDP | Entity Dialogue Processing | X | |

| MMC-ESD | Entity Speech Description | X | |

| MMC-MEF | Multimodal Emotion Fusion | X | |

| MMC-NLU | Natural Language Understanding | X | |

| MMC-PFI | PS-Face Interpretation | X | |

| MMC-PTI | PS-Text Interpretation | X | |

| MMC-SPE | Speech Personal Status Extraction | X | |

| MMC-TTS | Text-to-Speech | X | |

| MMC-VLA | Video Lip Animation | X | |

| PAF-EFD | Entity Face Description | X | |

| PAF-FPE | Face Personal Status Extraction | X | |

| PAF-PSI | PS-Speech Interpretation | X |

7 Reference Software

8 Conformance Testing

Important note. MMC-CWE Conformance Testing Specification V1.0 does not provide methods and datasets to Test the Conformance of:

- Input Speech Description and PS-Speech Interpretation, but only of the Speech Personal Status Extraction (MMC-SPE) Composite AIM for Emotion.

- Input Face Description and PS-Face Interpretation, but only of the Face Personal Status Extraction Composite AIM for Emotion.

Table 5 gives the input/output data of the MMC-CWE AI Workflow.

Table 5 – I/O data of MMC-CWE

| Input Data | Data Type | Input Conformance Testing Data |

| Input Selector | Binary data | All Input Selectors shall conform with Selector. |

| Text Object | Unicode | All input Text files to be drawn from Text files. |

| Speech Object | .wav | All input Speech files to be drawn from Speech files. |

| Face Object | AVC | All input Video files to be drawn from Video files. |

| Output Data | Data Type | Conformance Test |

| Machine Text | Unicode | All Text files produced shall conform with Text files. |

| Machine Speech | .wav | All Speech files produced shall conform with Speech files. |

| Machine Video | AVC | All Video files produced shall conform with Video files. |

9 Performance Assessment