1 Functions

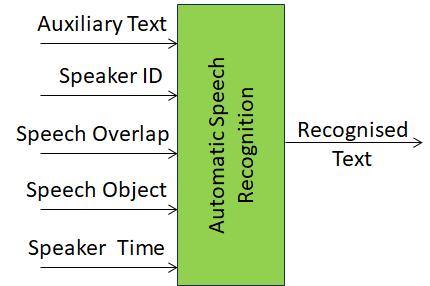

Automatic Speech Recognition (MMC-ASR):

| Receives | Auxiliary Text |

| Speech Object | |

| Speaker ID | |

| Speech Overlap | |

| Speaker Time | |

| Produces | Recognised Text |

2 Reference Architecture

Figure 1 depicts the Reference Architecture of the Automatic Speech Recognition AIM.

Figure 1 – The Automatic Speech Recognition AIM

3 I/O Data

Table 1 specifies the Input and Output Data of the Automatic Speech Recognition AIM.

Table 1 – I/O Data of the Automatic Speech Recognition AIM

| Input | Description |

| Auxiliary Text | Text Object with content related to Speech Object. |

| Speech Object | Speech Object emitted by Entity |

| Speaker ID | Identity of Speaker |

| Speech Overlap | Times and IDs of overlapping speech segments |

| Speaker Time | Time during which Speech is recognised |

| Output | Description |

| Recognised Text | Output of the Automatic Speech Recognition AIM |

4 SubAIMs

No SubAIMs.

5 JSON Metadata

https://schemas.mpai.community/MMC/V2.2/AIMs/AutomaticSpeechRecognition.json

6 Profiles

No Profiles