A definition of standard is “the documented agreement by a group of people that certain things should be done in a certain way”. In its still short but intense life, MPAI has documented several such agreements about “certain things”. One of these things is emotion. MPAI has specified 59 words with attached semantics that identify a type of emotion and a mechanism to extend the words representing emotions. It has also defined the format of the financial and organisational data that feed a machine predicting the probability of default and the organisational adequacy of the company the financial data refers to.

However, the path from a documented agreement to real products, services and applications is not easy and not short. In the domain of software standards, a reference implementation is often required to assure those who were not part of the agreement that the agreement is sound. In all cases, you need an agreed procedure that allows a party to test an implementation and verify that it does indeed conform with the standard. In other words, if the standard can be compared to the law, conformance testing can be compared to the code of procedure.

MPAI application standards, such as Conversation with Emotion and Company Performance Prediction, are not monoliths. They are implemented as sets of modules (AI Modules – AIMs) connected in workflows (AI Workflows – AIWs) executed in an environment (AI Framework – AIF). MPAI specifies the functions and data formats of the AIMs and the function, data format and connections of their AIW. MPAI also specifies the APIs that an AIM and other AIF components call to execute an AIW. The goal is to enable a user to buy an AIF from one party, an AIW from another, and the AIMs also from different parties.

Another facet is that MPAI standards deal with a particular technology – Artificial Intelligence – that sets it aside from other technologies. In general, the value of a neural network resides in the “completeness” and “reliability” of the data used for the task that the neural network claims to be able to carry out. Users buy a network together with the assurance that the data used are OK. But is it? Can a user be safe if it relies on a neural network?

MPAI uses the word performance (of an implemented standard) to indicate all these issues and defines it as a set of attributes – Reliability (e.g., quality), Robustness (e.g., ability to operate outside its original domain), Replicability (e.g., tests done by one party can be replicated by another) and Fairness (e.g., dataset or the network are open to being tested for bias).

Since its early days, MPAI has decided that the complex ecosystem composed of MPAI, its specifications, implementers of specifications, conformance testers, and performance assessors could hardly get their act together in a smoothly running ecosystem. MPAI would be unable to honour its promises of an open competitive market of components, implicit in the notion of interoperable AIMs nicely fitting in an AIW, and running in an AIF.

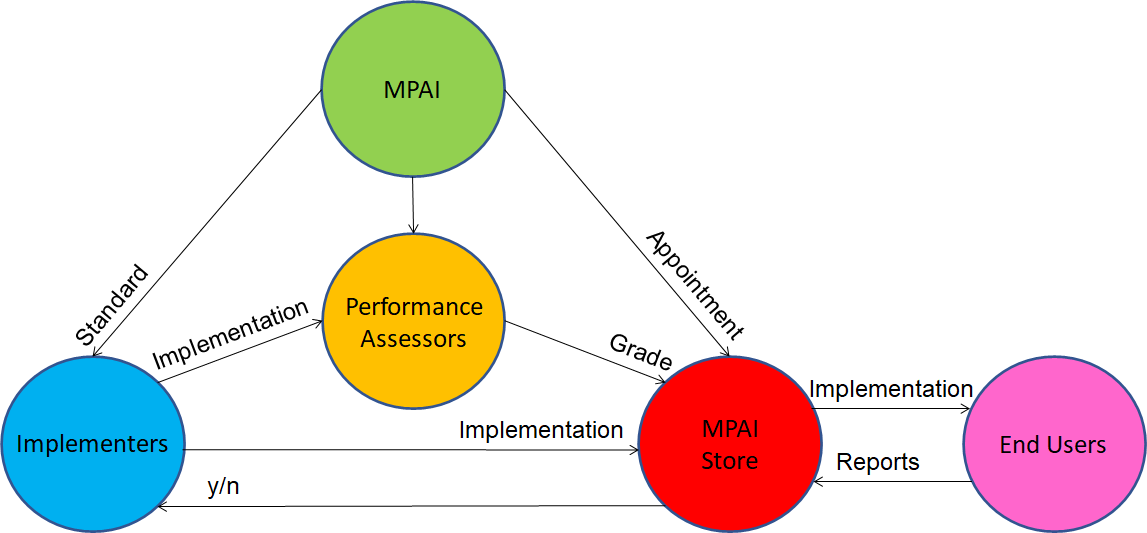

MPAI has specified its solution in the Governance of the MPAI Ecosystem (MPAI-GME) standard that specifies the roles of the MPAI Ecosystem players:

- MPAI:

- Develops and publishes standards, i.e., the set of Technical Specification, Reference Software Specification, Conformance Testing Specification, ad Performance Assessment Specification.

- Establishes the MPAI Store (see point 2.).

- Appoints Performance Assessors (see point 3.).

- The MPAI Store:

- Assign identifiers to implementers of MPAI standards (this is required to identify an implementation).

- Receive implementations of MPAI standards submitted by implementers.

- Verify that implementations are secure.

- Test the conformance of implementations to the appropriate MPAI standard or to one of its use cases.

- Receive reports from Performance Assessors about the grade of performance of implementations.

- Label with their interoperability level and make available for download implementations, and publish reviews of implementation user experiences.

- Publish reviews of implementations communicated by Users.

- Implementers of MPAI Technical Specifications:

- Obtain an implementer ID from the MPAI Store.

- Submit implementations to the MPAI Store for security verification and conformance testing prior to distribution.

- May submit implementations to Performance Assessors.

- Performance Assessors:

- Are appointed by MPAI for a particular domain of expertise.

- Assess implementations for performance, typically for a fee.

- Make the MPAI Store and implementer aware of their findings about an implementation.

- Users:

- Download implementations.

- May communicate reviews of downloaded implementations to the MPAI Store.

Figure 1 describes the operation of the MPAI Ecosystem

Figure 1 – The MPAI Ecosystem

Another piece of the Governance of the MPAI Ecosystem has been put in place on the 9th of August by a group of individuals active in MPAI who have established the MPAI Store in Scotland as a Company Limited by Guarantee.