The MPAI metaverse standardisation proposal

1. Introduction

Metaverse is expected to create new jobs, opportunities, and experiences and transform virtually all sectors of human interaction. To harness its potential, however, there are hurdles to overcome:

- There is no common agreement on what a “metaverse” is or should be.

- The potential users of the metaverse are too disparate.

- Many successful independent implementations of “metaverse” already exist.

- Some important enabling technologies may be years away.

To seamlessly use metaverses, standards are needed. Before engaging in metaverse standardisation, we should find a way to achieve metaverse standardisation, even without:

- Reaching an agreement on what a metaverse is.

- Disenfranchising potential users.

- Alienating existing initiatives.

- Dealing with technologies (for now)

2. The MPAI proposal

MPAI – Moving Picture, Audio, and Data Coding by Artificial Intelligence – believes that developing a (set of) metaverse standards is very challenging goal. Metaverse standardisation requires that we should:

- Start small and grow.

- Be creative and devise a new working method.

- Test the method.

- Gather confidence in the method.

- Achieve a wide consensus on the method.

Then, we could develop a (set of) metaverse standards.

MPAI has developed an initial roadmap:

- Build a metaverse terminology.

- Agree on basic assumptions.

- Collect metaverse functionalities.

- Develop functionality profiles.

- Develop a metaverse architecture.

- Develop functional requirements of the metaverse architecture data types.

- Develop a Table of Contents of Common Metaverse Specifications.

- Map technologies to the ToC of the Common Metaverse Specifications.

Step #1 – develop a common terminology

We need no convincing of the importance of this step as many are developing metaverse terminologies. Unfortunately, there is no attempt at converging to an industry-wide terminology.

The terminology should:

- Have an agreed scope.

- Be technology- and business-agnostic.

- Not use one industry’s terms if they are used by more than one industry.

The terminology is:

- Intimately connected with the standard that will use it.

- Functional to the following milestones of the roadmap.

MPAI has already defined some 150 classified metaverse terms and encourages the convergence of existing terminology initiatives. The MPAI terminology can be found here.

Step #2 – Agree on basic assumptions

Assumptions are needed for a multi-stakeholder project because designing a roadmap depends on the goal and on the methods used to reach it.

MPAI has laid down 16 assumptions which it proposes for a discussion. Here the first 3 assumptions are presented. All the assumptions can be found here.

- Assumption #1: As there is no agreement on what a metaverse is, let’s accept all legitimate requests of “metaverse” functionalities.

- Note: an accepted functionality does not imply that a Metaverse Instance shall support it.

- Assumptions#2: Common Metaverse Specifications (CMS) will be developed.

- Note: they will provide the technologies supporting identified Functionalities.

- Assumption#3: CMS Technologies will be grouped into Technology Profiles in response to industry needs.

- Note: A profile shall maximise the number technologies supported by specific groups of industries.

The notion of profile was well known and used in digital media standardisation and is defined here:

A set of one or more base standards, and, if applicable, their chosen classes, subsets, options and parameters, necessary for accomplishing a particular function.

Step #3 – Collect metaverse functionalities

The number of industries potentially interested in deploying metaverse is very large and MPAI has explored 18 of them. See here. A metaverse implementation is also likely to use external service providers – interfaces should be defined. See here.

MPAI has collected > 150 functionalities organised in: Areas – Subareas – Titles. See here.

This is not an accomplished task, but its beginning. Collecting metaverse functionalities should be a continuous task.

Step #4 – Which profiles?

The notion of profile is not currently implementable because some key technologies are not yet available and it is not clear which technologies, exisiting or otherwise, will eventually be selected.

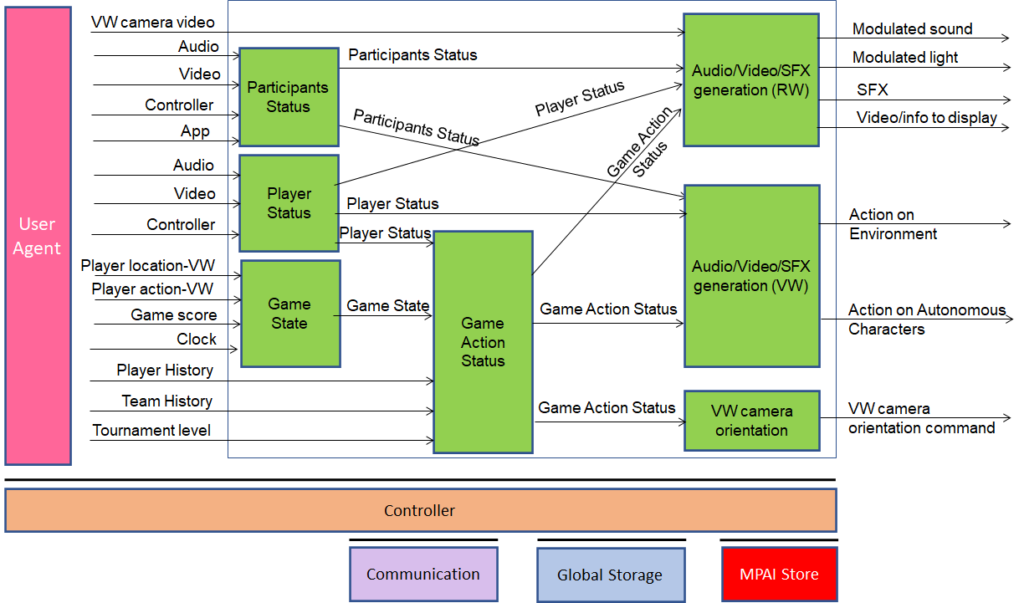

MPAI proposes to introduce a new type of profile – functionality profile, characterised by the functionalities offered, not by the technologies implementing them. By dealing only with functionalities and not technologies, profile definition is not “contaminated” by technology considerations. MPAI is in the process of developing:

Technical Report – MPAI Metaverse Model (MPAI-MMM) – Functionality Profiles.

It is expected that the Technical Report will be published for Community Comments on the 26th of March and finally adopted on the 19th of April 2023. It will contain the following table of contents:

- A scalable Metaverse Operational Model.

- Actions (what you do in the metaverse):

- Purpose – what the Action is for.

- Payload – data to the Metaverse.

- Response – data from the Metaverse.

- Items (on what you do Actions):

- Purpose – what the Item is for.

- Data – functional requirements.

- Metadata – functional requirements.

- Example functionality profiles.

The Technical Report will not contain nor make reference to technologies.

3. The next steps of the MPAI poposal

The Technical Report will demonstrate that it is possible to develop metaverse functionality profiles (and levels) that do not make reference to technologies, only to functionalities.

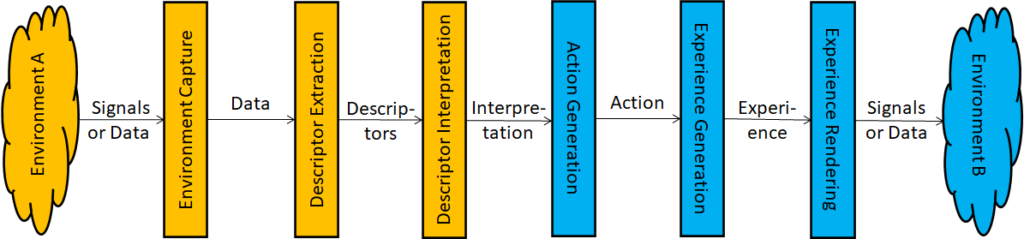

Step #5 – Develop a metaverse architecture.

The goal is to specify of a Metaverse Architecture, including the main functional blocks and the data types exchanged between blocks.

Step #6 – Develop functional requirements of the metaverse architecture data types.

The goal is to develop the functional requirements of the data types exchanged between functional blocks of the metaverse architecture.

Step #7 – Develop the Table of Contents of the Common Metaverse Specifications.

The goal is to produce an initial Table of Contents (ToC) of Common Metaverse Specifications to have a clear understanding of which Technologies are needed for which purpose in which parts of the metaverse architecture to achieve interoperability.

Step #8 – Map technologies to the ToC of the Common Metaverse Specifications.

MPAI intends to map its relevant technologies and see how they fit in the Common Metaverse Specification architecture. Other SDOs are invited to join the effort.

4. Conclusions

Of course, step #8 there will not provide the metaverse specifications but a tested method to produce them. MPAI envisages to reach step #8 in December 2023. It is a good price to pay before engaging in a perilous project.