After a series of ups and downs that lasted about sixty years, the set of technologies that go by the name of Artificial Intelligence (AI) has powerfully entered the design, production and strategy realities of many companies. Although it would not be easy – it would perhaps be an ineffective use of time – to argue against those who claim that AI is neither Artificial nor Intelligent, the term AI is sufficiently useful and indicative that it has found wide use in both saying and doing.

To characterise AI, it is useful to compare it with the antecedent technology called Data Processing (DP). When handling a data source, an expert could understand the characteristics of the data, e.g., the values, or rather the transformations of the data capable of extracting the most representative quantities. A good example was Digital Signal Processing (DSP) well represented by those agglomerates of sophisticated algorithms that go by the name of standards for audio and video compression.

In all these cases we find wonderful examples of how human ingenuity has been able to dig into enormous masses of data for years and discover the peculiarities of audio and video signals one by one to give them a more efficient, i.e., one that required fewer bits to represent the same or nearly the same data.

AI presents itself as a radical alternative to what DP fans have done so far. Instead of employing humans to dig into the data to find hidden relationships, machines are trained to search for and find these hidden relationships. In other words, instead of training humans to find relationships, train humans to train the machine to find those relationships.

The machines intended for this purpose consist of a network of variously connected nodes. Drawing on the obvious parallel of the brain, the nodes are called neurons and the network is therefore called the neural network. In the training phase, the machine is presented with many – maybe millions – of examples and, thanks to an internal logic, the connections can be corrected backwards so that the next time – hopefully – the result is better tuned.

Intuitively, it could be said that the more complex the universe of data that the machine must “learn”, the more complex the network must be. This is not necessarily true because the machine has been built to understand the internal relationships of the data and what appears to us at first sight complex could have a rule or a set of relatively simple rules that underlie the data and that the machine can “understand”.

Training a neural network can be expensive. The first cost element is the large amounts of data for training the network. This can be supervised (the man tells the machine how well it fared) or unsupervised (the machine understands this by itself). The second cost element is the large amounts of calculations to change the weights, i.e., the importance of the connections between neurons for each iteration. The third cost element is the access to IT infrastructures to carry out the training. Finally, if the trained neural network is used to offer a service, the cost of accessing potentially important computing resources every time the machine produces an inference, that is, it processes data to provide an answer.

On 19 July 2020, the idea of establishing a non-profit organisation with the mission of developing standards for data encoding using mainly AI techniques was launched. One hundred days later the organisation was formed in Geneva under the name of MPAI – Moving Picture, Audio and Data Coding by Artificial Intelligence.

Why should we need an organisation for data coding standards using AI? The answer is simple and can be formulated as follows: MPEG standards – based on DP – have enormously accelerated, and actually promoted the evolution and dissemination of audio-visual products, services and applications. It is, therefore, reasonable to expect that MPAI standards – based on AI – will accelerate the evolution and diffusion of products, services, and applications for the data economy. Yes, because even audio-visual sources in the end produce – and for MPEG always they did so – data.

One of the first objectives that MPAI set to itself was the pure and simple lowering of the development and operating costs of AI applications. How can a standard achieve this?

The answer starts a bit far away, that is, from the human brain. We know that the human brain is made up of connected neurons. However, the connections of the approximately 100 billion neurons are not homogeneously distributed because the brain is made up of many neuronal “aggregations” whose function the research in the field is gradually coming to understand. So, rather than neurons connecting with parts of the brain, we are talking about neurons that have many interconnections with other neurons within an aggregation, while it is the aggregation itself passing the results of its processing to other aggregations. For example, the visual cortex, the part of the brain processing the visual information located in the occipital lobe and part of the visual pathway has a layered structure with 6 interconnected layers. The 4th layer is further subdivided in 4 sublayers.

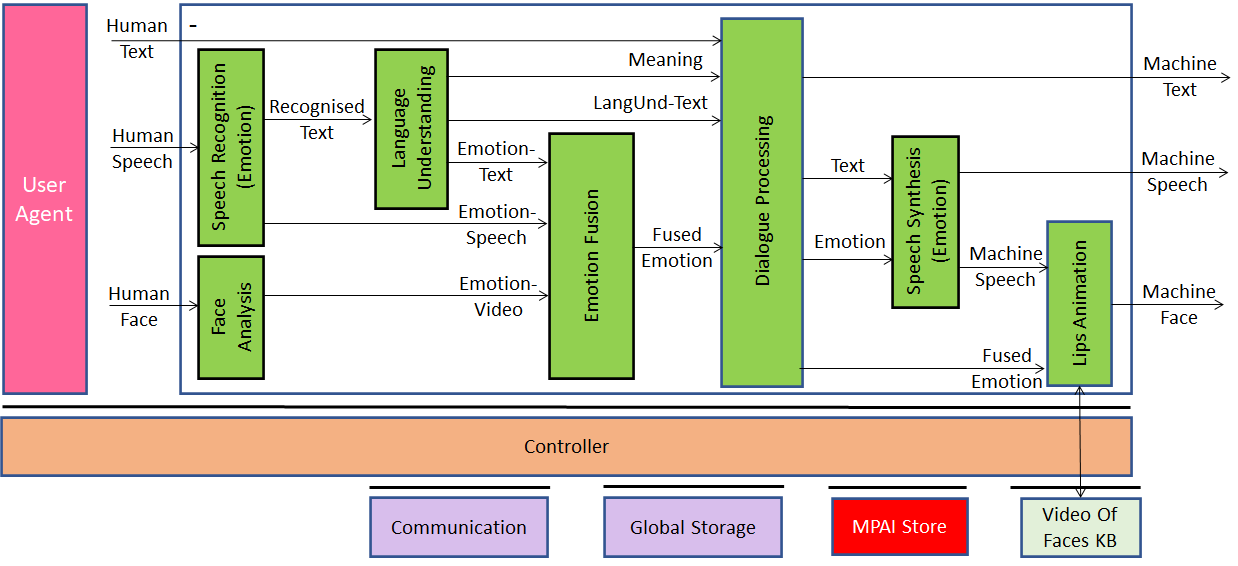

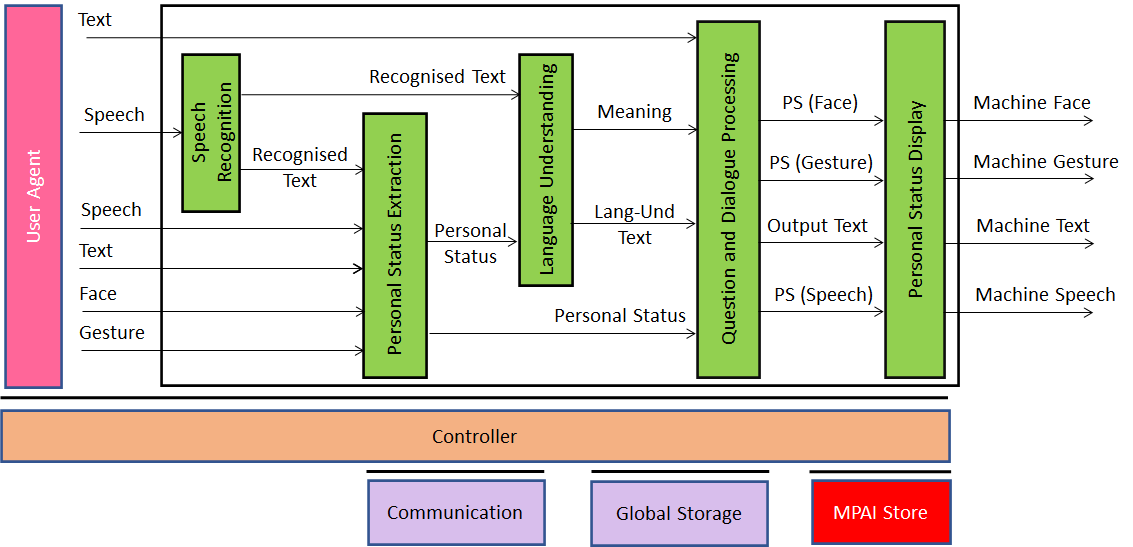

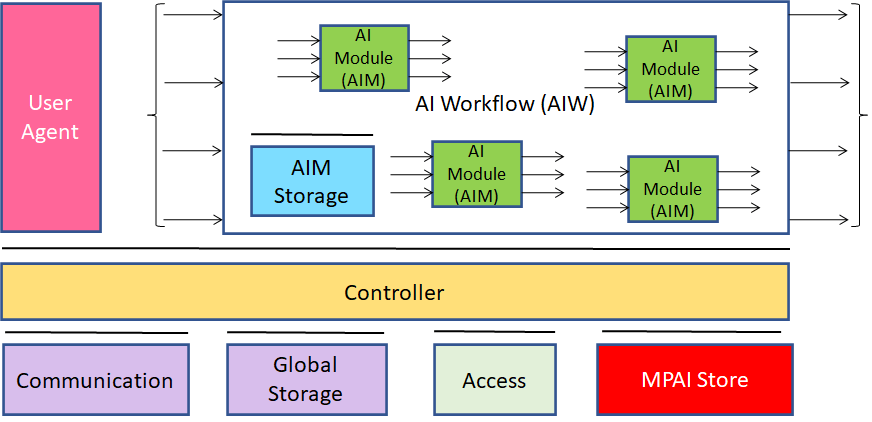

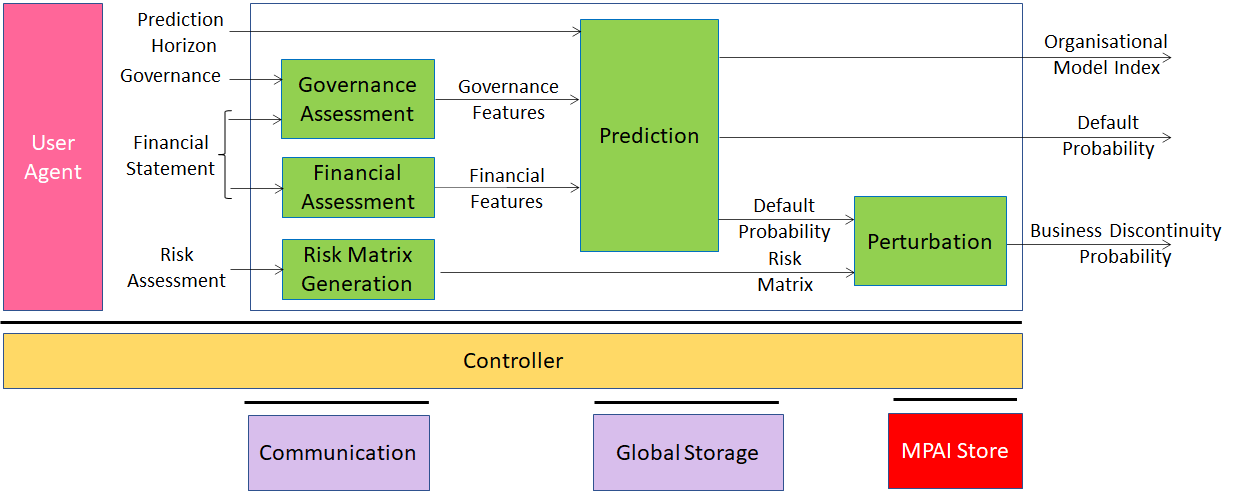

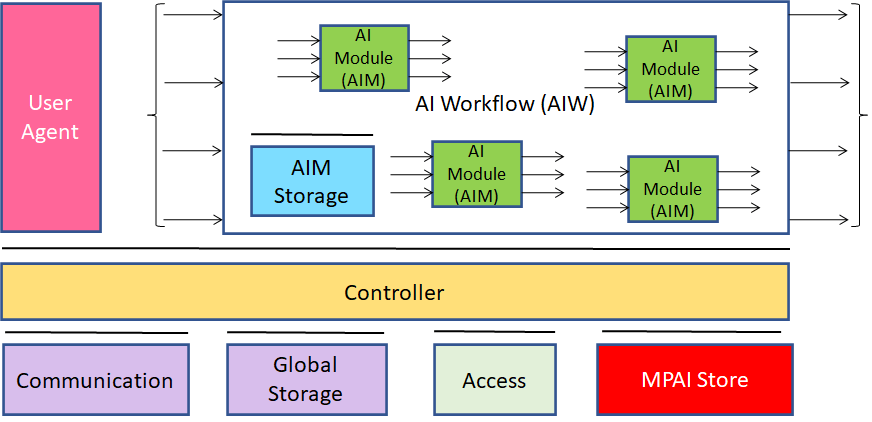

Whatever its motivations, one of the first standards approved by the MPAI General Assembly (November 2021, 14 months after MPAI was established, was AI Framework (MPAI-AIF), a standard that specifies the architecture and constituent components of an environment able to implement AI systems consisting of AI Modules (AI Module or AIM) organised in AI Workflows (AI Workflow or AIW), as shown in Figure 1.

Figure 1 – Reference model of MPAI-AIF

The main requirements that have guided the development of the MPAI-AIF standard specifying this environment are:

- Independence from the operating system.

- Modularity of components.

- Interfaces that encapsulate components abstracted from the development environment.

- Wide range of implementation technologies: software (Microcontrollers to High-Performance Computing systems), hardware, and hardware-software.

- AIW execution in local and distributed Zero-Trust environments.

- AIF interaction with other AIFs operating in the vicinity (e.g., swarms of drones).

- Direct support for Machine Learning functions.

- Interface with MPAI Store to access validated components.

Controller performs the following functions:

- Offers basic functionality, e.g., scheduling, and communication between AIM and other AIF components.

- Manages resources according to the instructions given by the user.

- Is linked to all AIM/AIW in a given AIF.

- Activates/suspends/resumes/deactivates AIWs based on user or other inputs.

- Exposes three APIs:

- AIM APIs allow AIM/AIW to communicate with it (register, communicate and access the rest of the AIF environment).

- User APIs allow user or other controllers to perform high-level tasks (e.g., turn the controller on/off, provide input to the AIW via the controller).

- Controller-to-controller APIs allow a controller to interact with another controller.

- Accesses the MPAI Store APIs to communicate to the Store.

- One or more AIWs can run locally or on multiple platforms.

- Communicates with other controllers running on separate agents, requiring one or more controllers in proximity to open remote ports.

Communication connects an output port of one AIM with an input port of another AIM using events or channels. It has the following characteristics:

- Activated jointly with the controller.

- Persistence is not required.

- Channels are Unicast – physical or logical.

- Messages have high or normal priority and are communicated via channels or events.

AI Module (AIM) receives data, performs a well-defined function and produces data. It has the following features:

- Communicates with other components via ports or events.

- Can incorporate other AIMs within it.

- Can register and log out dynamically.

- Can run locally or on different platforms, e.g., in the cloud or on swarms of drones, and communicate with a remote controller.

AI Workflow (AIW) is a structured aggregation of AIMs receiving and processing data according to a function determined by a use case and producing the required data.

Shared Storage stores data making it available to other AIMs.

AIM Storage stores the data of individual AIMs.

User Agent interfaces the user with an AIF via the controller.

Access offers access to static or slow-varying data that are required by the AIM, such as domain knowledge data, data models, etc.

MPAI Store stores and makes implementations available to users.

MPAI-AIF is an MPAI standard that can be freely downloaded from the MPAI website. An open-source implementation of MPAI-AIF will be available shortly.

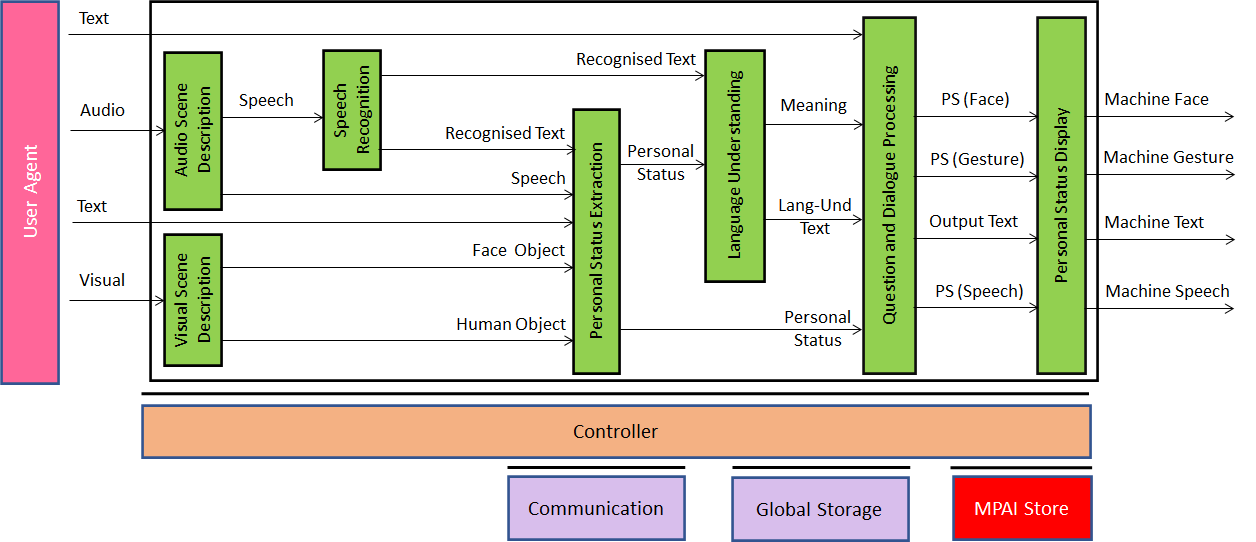

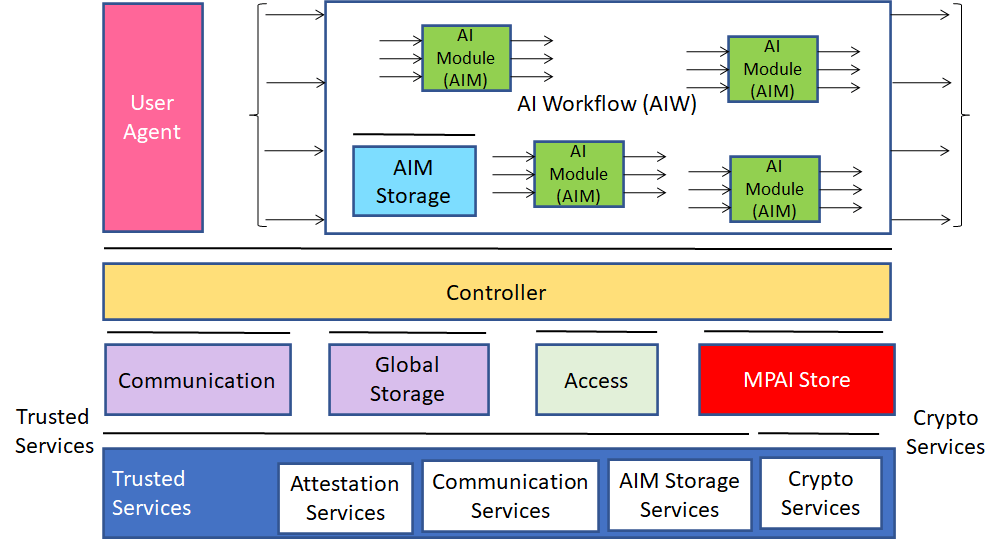

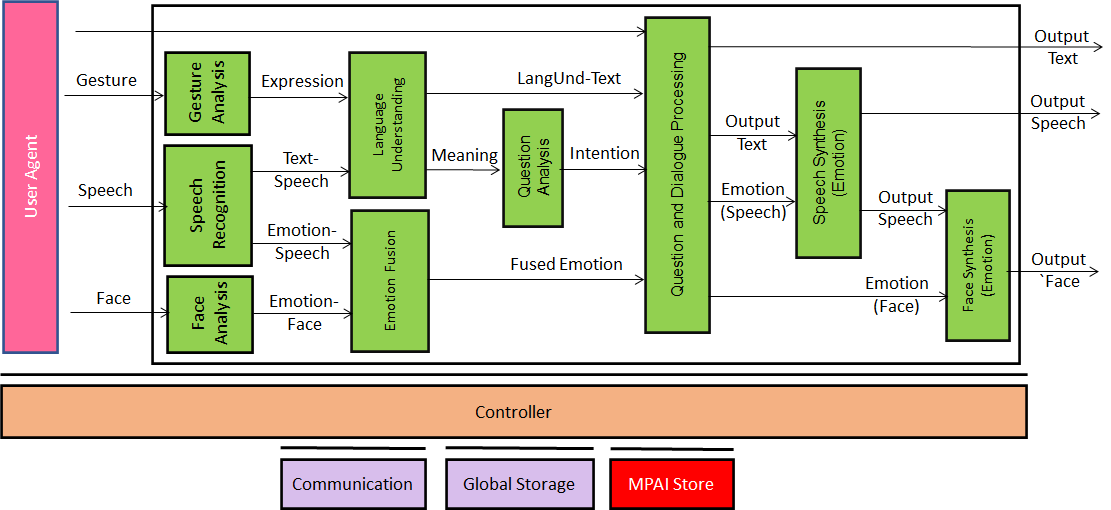

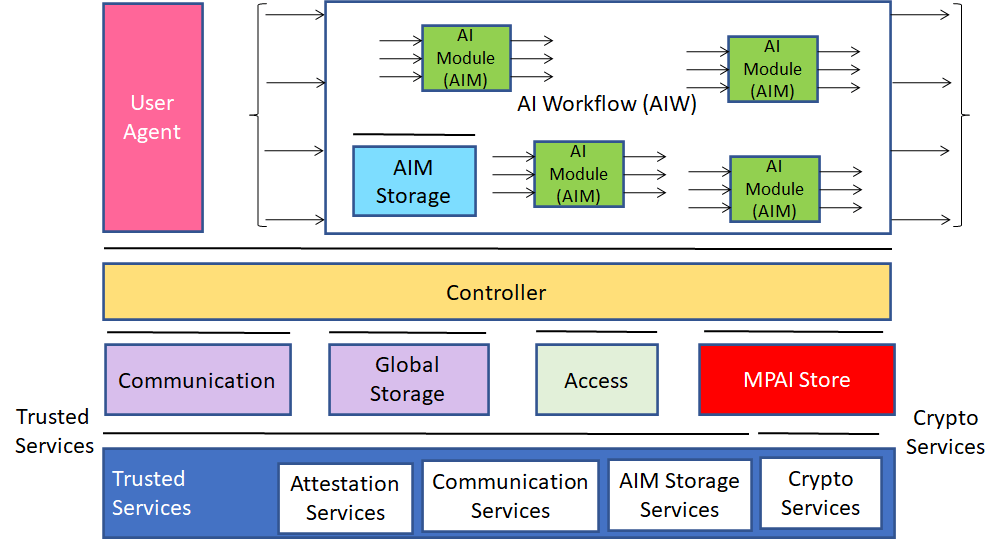

MPAI-AIF is important because it lays the foundation on which other MPAI application standards can be implemented. So, it can be said that the description given above does not mark the conclusion of MPAI-AIF, but only the beginning. In fact, work is underway to provide MPAI-AIF with security support. The reference model is an extension of the model in Figure 1.

Figure 2 – Reference model of MPAI-AIF with security support

MPAI will shortly publish a Call for Technologies. In particular, the Call will request API proposals to access Trusted Services and Crypto Services.

We started by extolling the advantages of AI and complaining about the high costs of using the technology. How can MPAI-AIF lower costs and increase the benefits of AI? The answer lies in these expected developments:

- AIM implementers will be able to offer them to an open and competitive market.

- Application developers will be able to find the AIMs they need in the open and competitive market.

- Consumers will enjoy a wide selection of the best AI applications produced by competing application developers based on competing technologies.

- the demand for technologies enabling new and more performing AIMs will fuel innovation.

- Society will be able to lift the veil of opacity behind which hide many of today’s AI-based monolithic applications.

MPAI develops data coding standards for applications that have AI as the core enabling technology. Any legal entity supporting the MPAI mission may join MPAI, if able to contribute to the development of standards for the efficient use of data.

Visit the MPAI website, contact the MPAI secretariat for specific information, subscribe to the MPAI Newsletter and follow MPAI on social media: LinkedIn, Twitter, Facebook, Instagram, and YouTube.

Most importantly: join MPAI – share the fun – build the future.