MPAI kicks off three new standard projects approving Use Cases and Functional Requirements

MPAI has completed the identification of the functional requirements of the technologies needed to support new Use Cases in three standards:

- AI Framework (MPAI-AIF) Version 2 to support secure execution of AI applications.

- Multimodal Conversation (MPAI-MMC) Version 2, to support enhanced conversation between humans and machines represented by avatars.

- Neural Network Watermarking (MPAI-NNW) to enable watermarking technology providers to qualify their products.

MPAI targets the next General Assembly to be held on the 19th of July, the 2nd anniversary of the launch of the MPAI idea, to publish the three corresponding Calls for Technologies.

MPAI will hold four online events to present and discuss the Use Cases and Functional Requirements on the following dates:

| Title | Acronym | July | Time | Note | |

| AI Framework V2 | MPAI-AIF | 11 | 15:00 UTC | Register | |

| Multimodal Conversation V2 | MPAI-MMC | 07 | 14:00 UTC | Register | |

| Multimodal Conversation V2 | MPAI-MMC | 12 | 14:00 UTC | Register | |

| Neural Network Watermarking | MPAI-NNW | 12 | 15:00 UTC | Register |

MPAI Principal Members are now developing the Framework Licences for the three standards. The Framework Licences are licences without critical data such as cost, dates, rates etc., and have been devised by MPAI to facilitate the practical availability of approved standards (see here for an example).

Responses to the Calls for Technologies are expected to be received by the 10th of October 2022.

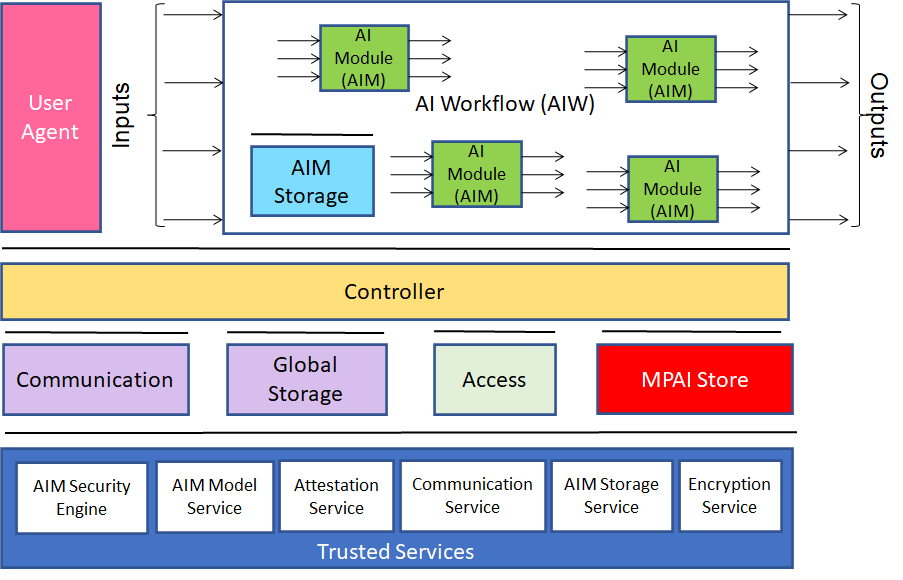

MPAI application standards are implemented as aggregations of AI Modules (AIM) called AI Workflows (AIW) and are executed in the MPAI-specified AI Framework (AIF).

Version 1 of the AI Framework (MPAI-AIF) standard specifies the AIF architecture, the AIF, AIW and AIM metadata that describe their capabilities, and the APIs. An open-source MPAI-AIF implementation is now available.

AI Framework (MPAI-AIF) Version 2 intends to provide the AIF components with the ability to call the security APIs of Figure 1 such as attestation service and secure storage service. MPAI-AIF V2 will help implementers to develop applications that meet their specific security requirements.

Figure 1 – MPAI-AIF V2 Reference Model

Those interested in the MPAI-AIF V2 Call for Technologies, can register to attend the online meeting on 11 July at 15 UTC. The event will offer an opportunity for those attending to discuss the identified functional requirements with MPAI. MPAI wishes to receive as many responses as possible so that a robust MPAI-AIF standard can be developed.

In the meantime, please review MPAI-AIF V2 Use Cases and Functional Requirements and visit the MPAI-AIF website for an introduction.

The technologies specified by Multimodal Conversation standard MPAI-MMC V1 enable 5 important use cases: 1) Conversation with Emotion where a user converses with a machine able to extract the emotion of the human conveyed by their speech and face, and to display itself as a face-only avatar with emotion; 2) Multimodal Question-Answering, where a human asks questions about an object held in their hand to a machine manifesting itself as a synthetic voice; and 3) Automatic Speech Translation use cases where a machine translates the speech in one language to speech in specified languages that preserve the speech features of the speaker.

With Multimodal Conversation (MPAI-MMC) V2, MPAI substantially enlarges its technology repository to support 5 new ambitious use cases.

- Personal Status Extraction (PSE) targets a set of enabling technologies extending the Conversation with Emotion use case. A machine extracts the human’s Personal Status (PS) from a human’s text, speech, face,. and gesture of a human. PS includes Emotion, Cognitive Status (i.e., the human’s comprehension of the Environment, such as “Confused” or “Convinced”), and Attitude (i.e., the human’s positioning vis-à-vis the Environment such as, “Confrontational” or “Respectful”).

- Personal Status Display (PSD), too, targets a set of enabling technologies extending the Conversation with Emotion use case. Given a text and the Personal Statuses shown by speech, face and gesture, PSD generates an avatar uttering the words of the text conveying the desired PS while the face and gesture also display the desired PS.

- Conversation about a Scene (CAS) extends both the Conversation with Emotion and Multimodal Question and Answering use cases of V1. The machine uses PSE to extract the human’s PS to improve understanding of what object the human refers to from their gesture, and uses PSD to manifest itself.

- Human and Connected Autonomous Vehicle (CAV) Interaction (HCI) enables basic forms of conversation between humans and a CAV: when a group of humans approach a CAV asking to be let in and when humans converse in the cabin with the CAV joining in the conversation. PSE and PSD are again basic technologies for interaction.

- In the Avatar-Based Videoconference (ABV) use case, (groups of) humans from different locations participate in a virtual videoconference through their avatars that accurately represent them. ABV relies on a server to authenticate participants and translate their utterances to specified languages. A Virtual Secretary summarises the proceedings of the meeting and processes the participants’ requests to change the summary. An ABV client creating an avatar uses PSE to improve the accuracy of the avatar’s descriptors, and the Virtual Secretary uses PSE to improve its understanding of the avatars’ PS, and uses PSD to manifest itself.

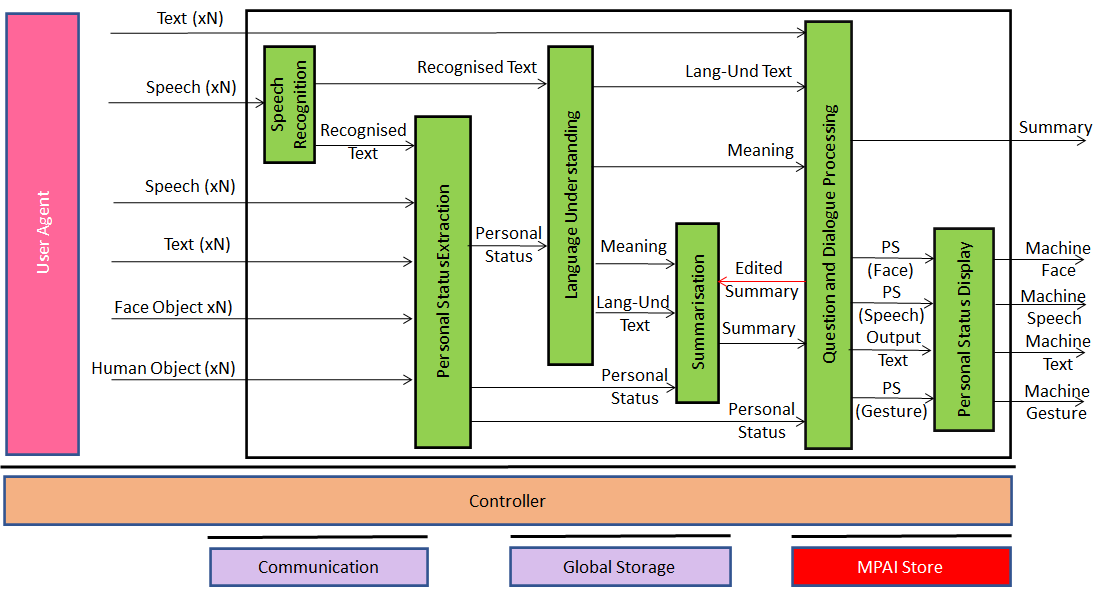

Figure 2 depicts the architecture of the Virtual Secretary. Speech, Text, Face Object, and Human Object refer to the avatars at the meeting that the Virtual Secretary interacts with. The Virtual Secretary need not process the avatars to extract that data because the avatar descriptors are already available. The PSE module extracts the PS of a particular avatar and uses it to better interpret the utterances of that avatar and improve the accuracy of the summary. Avatars can make comments via chat about the summary and the Question and Dialogue Processing Module can feed back a revised version of the summary. Finally, the Virtual Secretary manifests itself as an avatar generated by the Personal Status Display Module.

Figure 2 – Virtual Secretary Reference Model

Those interested in the MPAI-MMC V2 Call for Technologies can register to attend the online meeting on 7 July at 14 UTC and register for the second meeting on 12 July at 14 UTC.

Two sessions are dedicated to MPAI-MMC because of the number of the use cases and the scope of the supporting technologies.

The event will offer an opportunity for those attending to discuss the identified functional requirements with MPAI. MPAI wishes to receive as many responses as possible so that a robust MPAI-MMC standard can be developed.

In the meantime, please review MPAI-MMC V2 Use Cases and Functional Requirements and visit the MPAI-MMC website for an introduction.

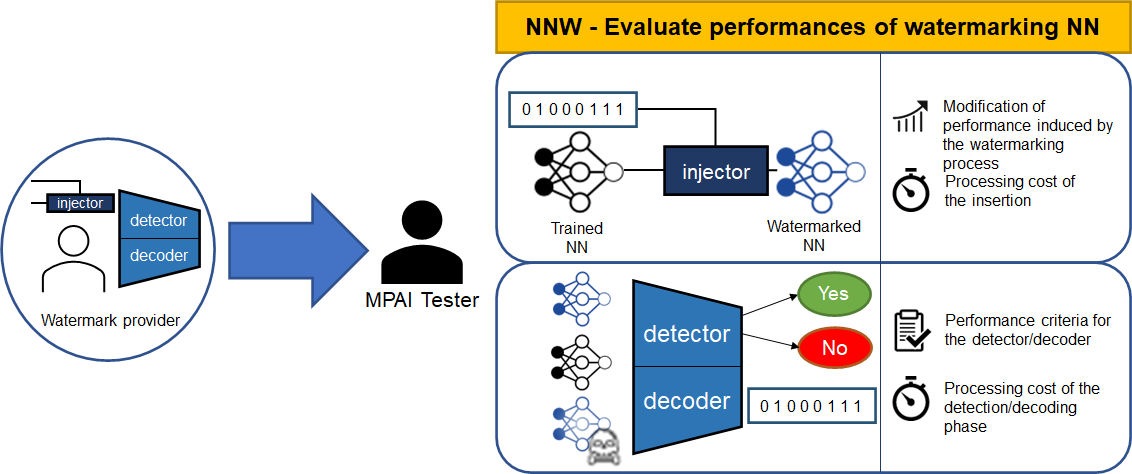

Digital watermarking indicates a class of technologies that can be used to insert a data payload into other data for a variety of purposes, such as to identify the data or its ownership. Watermarking can be applied to neural networks for similar purposes.

With its Neural Network Watermarking (MPAI-NNW) standard MPAI does not intend to develop a standard technology to inject a data payload into a neural network via watermark, rather to give a Neural Network Watermarking tester the means to measure the suitability of:

- A watermark inserter to inject a payload without deteriorating the NN performance (changing the parameters of a network deteriorates the performance of the network).

- A watermark detector to recognise the presence and a watermark decoder to successfully retrieve the payload of the inserted watermark

- A watermark inserter to inject a payload and a watermark detector/decoder to detect/decode a payload from a watermarked model or from any of its inferences at a measured computational cost.

Of course, the measurement shall be done for a specific size of the watermark payload.

Figure 3 depicts the scope of the MPAI-NNW standard.

Figure 3 – The problem addressed by MPAI-NNW

Those interested in the MPAI-NNW Call for Technologies, can register to attend the online meeting on 11 July at 15 UTC. The event will offer an opportunity for those attending to discuss the identified functional requirements with MPAI. MPAI wishes to receive as many responses as possible so that a robust MPAI-NNW standard can be developed.

In the meantime, please review MPAI-NNW Use Cases and Functional Requirements and visit the MPAI-NNW website for an introduction.

Meetings in the coming June-July meeting cycle

| Group name | 27Jun

01Jul |

04-08 Jul | 11-15 Jul | 18-22 Jul | Time

(UTC) |

|

| AI Framework | 27 | 4 | 11 | 18 | 15 | |

| Governance of MPAI Ecosystem | 18 | 14 | ||||

| 27 | 4 | 11 | 16 | |||

| XR Venues | 27 | 4 | 11 | 18 | 17 | |

| Multimodal Conversation | 28 | 5-7 | 12 | 19 | 14 | |

| Neural Network Watermaking | 12 | 19 | 13 | |||

| 28 | 5 | |||||

| Context-based Audio enhancement | 28 | 5 | 12 | 18 | 16 | |

| Connected Autonomous Vehicles | 29 | 6 | 13 | 19 | 12 | |

| AI-Enhanced Video Coding | 18 | 13 | ||||

| 6 | 14 | |||||

| AI-based End-to-End Video Coding | 29 | 13 | 14 | |||

| Avatar Representation and Animation | 7 | 13 | ||||

| 30 | 14 | 13:30 | ||||

| Server-based Predictive Multiplayer Gaming | 30 | 14 | 14:30 | |||

| Communication | 30 | 7 | 14 | 15 | ||

| Artificial Intelligence for Health Data | 1 | 15 | 14 | |||

| Industry and Standards | 1 | 15 | 16 | |||

| General Assembly (MPAI-22) | 15 |

This newsletter serves the purpose of keeping the expanding the diverse MPAI community connected

We are keen to hear from you, so don’t hesitate to give us your feedback