Highlights

- MPAI publishes new versions of two flagship standards – Connected Autonomous Vehicle and MPAI Metaverse Model

- MPAI and AI Agents – understanding the world

- An improved Company Performance Prediction (CUI-CPP) standard

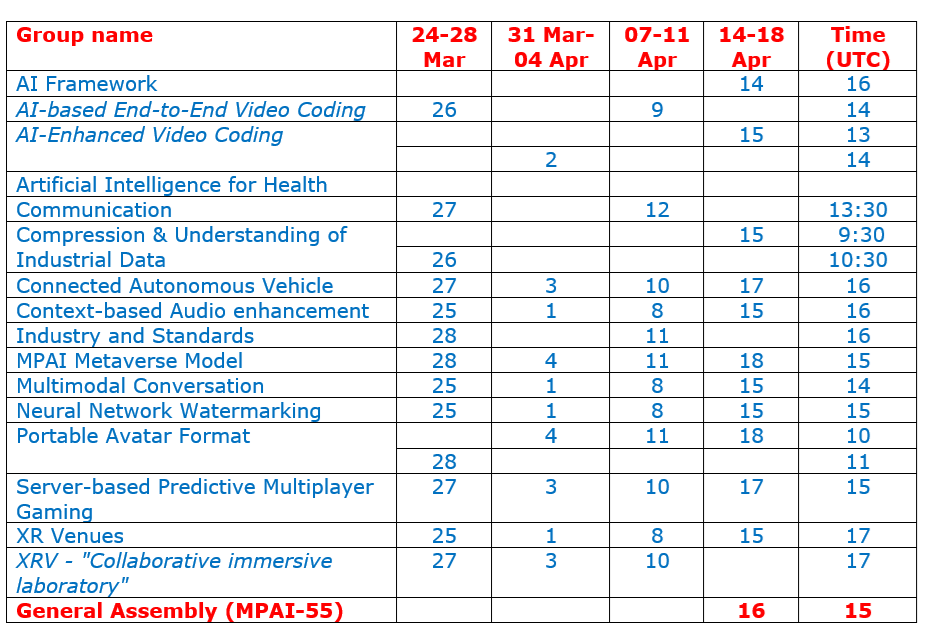

- Meetings in the coming March/April 2025 meeting cycle

MPAI publishes new versions of two flagship standards – Connected Autonomous Vehicle and MPAI Metaverse Model

Connected Autonomous Vehicle (MPAI-CAV) has been one of the earliest MPAI projects. The goal was to leverage the MPAI AI Framework infrastructure to re-enable componentisation for the AI age of the automotive industry that seems to have lost interest in the component model that assisted its development for many decades.

In 2024, MPAI published the CAV Architecture standard (CAV-ARC) and published a Call for Technologies for the next phase. The intense work that followed has now born fruit. The 54th General Assembly (MPAI-54) has published Technical Specification: Connected Autonomous Vehicle (MPAI-CAV) – Technologies (CAV-TEC) V1.0 with a request for Community Comments. More about the content in the next issue of this newsletter together with an announcement of a public online presentation.

MPAI-54 also decided to publish Technical Specification: MPAI Metaverse Model (MPAI-MMM) – Technologies (MMM-TEC) V2.0 with a request for Community Comments. MPAI started working on this project much later than with MPAI-CAV but published two Technical Reports in 2023, two versions of the Architecture standard (MMM-ARC) in 2023-24, and one version of the Technologies standard (MMM-TEC) in 2024. The currently published MMM-TEC 2.0 will be introduced in the next issue of this newsletter together with an announcement of a public online presentation.

In general, MPAI standards normatively reference other MPAI standards, and CAV and MMM are no exception. These new versions rely on the extended versions of three standards: Object and Scene Description (MPAI-OSD) V1.3, Portable Avatar Format (MPAI-PAF) V1.4, and Data Types, Formats, and Attributes (MPAI-TFA) V1.3, which are also published for Community Comments.

Here are the links to these new versions:

- CAV-TEC V1.0 https://mpai.community/standards/mpai-cav/tec/v1-0/

- MMM-TEC V2.0 https://mpai.community/standards/mpai-mmm/tec/v2-0/

- MPAI-OSD V1.3 https://mpai.community/standards/mpai-osd/v1-3/

- MPAI-PAF V1.4 https://mpai.community/standards/mpai-paf/v1-4/

- MPAI-TFA V1.3 https://mpai.community/standards/mpai-tfa/v1-3/

- Comments should be sent by the MPAI Secretariat by 2025/04/13T23:53 UTC.

MPAI and AI Agents – understanding the world

In the last newsletter we started an analysis of AI Modules specified by MPAI and how they fit in the current industry interest in “Agentic AI” that MPAI Calls Perceptive and Agentive AI (PAAI).

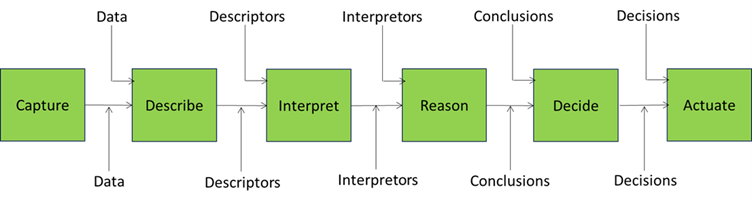

A brief recap about PAAI defined as systems able to:

- Capture data from universe, metaverse, or machines.

- Describe data, possibly integrating descriptions from other machines or PAAIs.

- Interpret descriptions, possibly integrating interpretations from other machines or PAAIs.

- Communicate with other PAAIs.

- Reason about interpretations.

- Decide on an action, possibly in consultation with humans or other PAAIs.

- Explain the path that led the PAAI to reach a decision.

- Actuate decisions in the universe or metaverse.

- Store and retrieve experiences.

- Learn or acquire knowledge.

The analysis in the last newsletter reached feature #5 and this newsletter intends to complete the analysis starting with Figure 1 representing the “core” function of a PAAI and how external components or other PAAIs can participate in or contribute to the workflow.

Figure 1 – A PAAI workflow

The 6th feature is “decide on an action”. In most MPAI AI Workflows, the decision to make an action is an integral part of the reasoning process. For instance, the Entity Dialogue Processing AI Module does not say what Conclusions it reached about the input data. It simply provides Text sometimes complemented by Personal Status. The PAAI may autonomously decide, receive contributions from, or be other-directed by another PAAI or human to decide on an action.

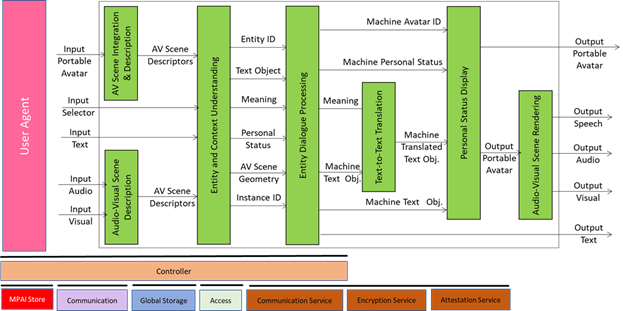

For instance, a Communicating Entities in Context machine may decide to produce a Portable Avatar or human-perceptible media (output at the right-hand side of Figure 2) after receiving a Portable Avatar or capturing human-perceptible media (input at the left-hand side of Figure 2) and a passenger may request a CAV to display how it sees the world around it (called Full Environment Descriptors).

Figure 2 – Human and Machine Communication (MPAI-HMC) V2.0 Reference Model

The 7th feature is about explaining why the PAAI has decided on a particular action. So far, MPAI has not developed this feature in any of its standards, but it plans on doing so at least for the Company Performance Prediction (CUI-CPP), as described in the next article in this newsletter.

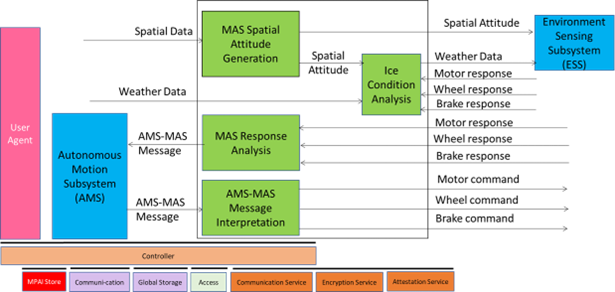

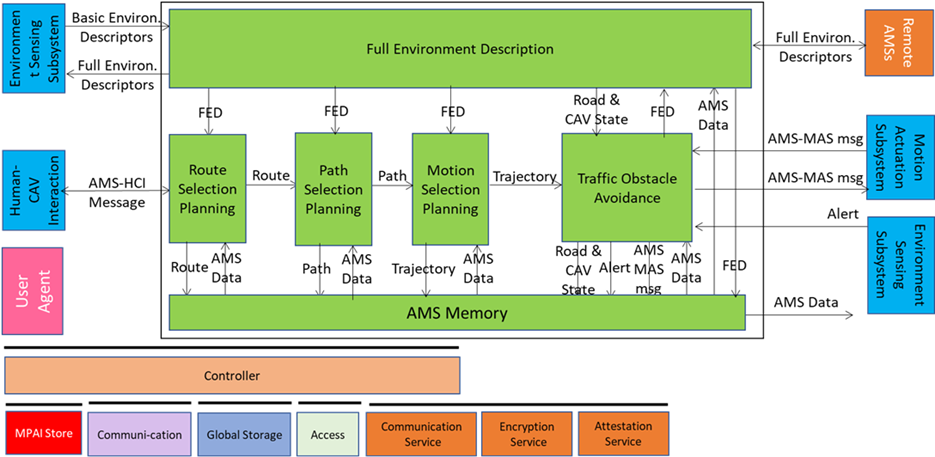

Unlike the 7th feature, the next one – “actuate decision in the universe or metaverse” – is well supported by MPAI standards. Let’s take two use cases. The first is the Motion Actuation Subsystem (CAV-MAS) of the Connected Autonomous Vehicle (MPAI-CAV) depicted in Figure 3.

Figure 3 – Motion Actuation Subsystem (CAV-MAS) Reference Model

In the MPAI-MMM standard, the Autonomous Motion Subsystem (CAV-AMS) receives an initial description of the external environment from the Environment Sensing Subsystem (CAV-ESS), exchanges portions of that description with other CAVs, makes a final decision about the environment, decides the next step, and sends a message to the Motion Actuation Subsystem asking to move the CAV to a Position and Orientation with their velocities and accelerations (this set of data is called Spatial Attitude). The MAS, which plays the “decision actuation” role, splits the request into three components: one for the Brakes, one for the Motors, and one for the Steering Wheel(s).

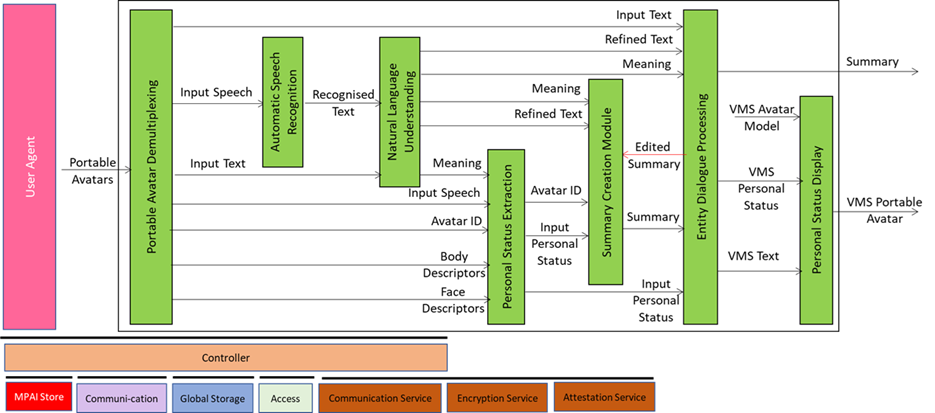

The 2nd use case is the Virtual Meeting Secretary (MMC-VMS) depicted in Figure 4.

Figure 4 – Virtual Meeting Secretary (MMC-VMS) Reference Model

The Virtual Meeting Secretary perceives the avatars in the virtual meeting room, understands what they say and their Personal Statuses, produces an extended summary of what is being said, decides on a textual response and Personal Status, produces a Portable Avatar integrating all relevant elements of itself, and forwards its Portable Avatar to the Avatar Videoconference Server.

The 9th feature is “store and retrieve experiences”. This means that a PAAI may set aside important elements of its current experience for later use while performing its activities. This feature is already present in some profiles and is being developed for the CAV Autonomous Motion Subsystem as depicted in Figure 5.

Figure5 – The CAV Autonomous Motion Subsystem Reference Model

The AMS Memory AIM stores relevant data that can be retrieved to face future situations that resemble some of those encountered in the past. This is a more limited functionality compared to the one addressed by the 10th feature “learn or acquire knowledge” where data encountered during the PAAI activity are used to train some of the PAAI components.

An improved Company Performance Prediction (CUI-CPP) standard

In past civilisations – but sometimes also in ours – predicting the future was a diffused practice. In ancient Rome, haruspices practised a form of divination based on the inspection of entrails. In more recent centuries, army leaders consulted a fortune teller before a battle.

Leading a company has similarities with leading an army. Today, however (or, at least I hope) not many company leaders call a fortune teller before making major decisions.

Artificial Intelligence can play the fortune teller’s role not because it uses digital tarot cards, but because having learnt the story in similar situations, it can make valid predictions.

MPAI-53 has kicked off the Company Performance Prediction (CUI-CPP) V2.0 project. The goal is to develop a standard enabling companies, investors, banks, etc. to assess the prospects (“tell the fortune”) of a company.

The current idea of the standard is to start from certain types of data:

- Prediction horizon, i.e., the future time for which a prediction is requested, say, 3 years.

- Governance data, i.e., company-provided data about the company structure and management.

- Financial data, i.e., company-provided financial statements.

- Risk Data, i.e., risk data that rely on extensive data from different parties.

- Risk Assessment, i.e., company-provided risk data.

CUI-CPP V2.0 will provide JSON Schemas for all these data types. These will be input for the prediction system.

As risks will be the most relevant part of the standard, a taxonomy is required. Risks are divided into three main classes: Business, Climate, and Cyber. Each class is subdivided into categories. For instance, the Finance category in the Business class includes Exchange rate risk, Interest rate risk, Credit from banks, Fluctuation in raw material prices, and Credit from third parties.

Each Primary Risk is planned to be described with the following set of data:

- Risk type

- Risk name

- Regulation addressing the risk

- A vector of inputs.

The vector of input is risk- specific. For instance, a Denial-of-Service attack is described by the following:

Input name: Attacker

- Time: time the attack was started or detected.

- Source: provider of input vector or external source.

- Type: the IP address.

- IP address: IP address of source of attack.

while the rainfall risk is described by the following:

Input name: GNSS Position

- Time: time of prediction.

- Source: ID of prediction requester.

- Type: the GNSS coordinates

- Value of position: according to selected GNSS standard.

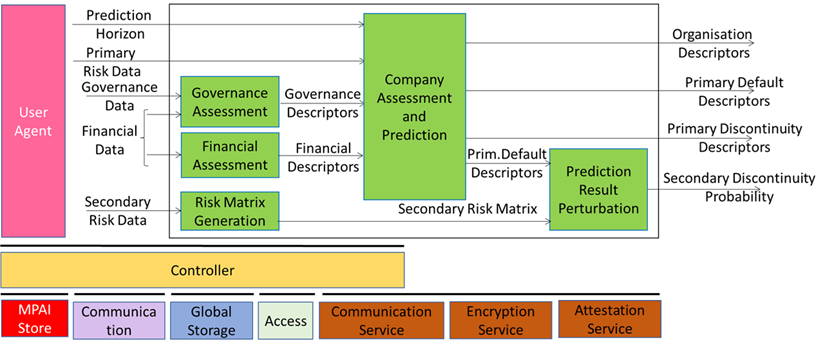

Governance and Financial data are processed to provide Descriptors. The goal is to extract the most relevant information from input data. Descriptors are then fed to a Company Assessment and Prediction (CAP) Model together with Primary Risk data. The CAP Model produces three types of Descriptors: Organisation, Primary Default, and Primary Discontinuity. The first – Organisation Descriptors – include an assessment of the efficacy of company organisation and a vector providing information on which Descriptors have more influence on the overall assessment. The second – Primary Default Descriptors – include the probability of company default and a vector providing information on which Descriptors have more influence on the company default. Similarly, for the third – Primary Discontinuity Descriptors.

There are more risks than those that should be assessed with the procedure above. The project calls them Secondary Risks, having this name because no approved AI model exists to make a prediction. The project assumes that these are assessed by the Company and are converted to a Risk Matrix where the rows are the risks and the columns are the four characteristics: Occurrence, Business impact, Gravity, and Risk Retention (i.e., the portion of risks that the Company decides to retain). The Prediction Result Perturbation receives the Primary Default Descriptors and the Secondary Risk Matrix and produces the Discontinuity Probability caused by the Secondary Risks.

Figure 6 gives the Reference Model of Company Performance Prediction (CUI-CPP) V2.0.

The standard will specify all the data types indicated in Figure 6. As is the rule for all MPAI standards, CUI-CPP V2.0 will be silent on how the Assessment and Prediction AI Model should be implemented.

Figure 6 – The Reference Model of Company Performance Prediction V2.0

Meetings in the coming March/April 2025 meeting cycle