1. Functions

The Communicating Entities in Context (HMC-CEC) AI Workflow enables Entities to communicate with other Entities, possibly in different Contexts, where:

- Entity refers to one of:

- human

- In an audio-visual scene, or

- Represented as a Digitised Human in an Audio-Visual Scene.

- Digital Human representing

- A human as a Digitised Human in an Audio-Visual Scene, or

- A Machine as a Virtual Human in an Audio-Visual Scene.

- A Machine not represented.

- human

- Context is information describing the attributes of an Entity, such as language, culture etc.

Note that the same non-capitalised and capitalised word represents an object in the real world and its digital representation in the Virtual World, respectively.

Depending on its real or virtual nature, an Entity communicates with another Entity by:

- Using the human’s body, speech, context, and the audio-visual scene the human is immersed in

- Rendering the Virtual Entity as a speaking humanoid in an audio-visual scene, or

- Communicating by emitting Communication Items.

Communication Item is an implementations of Portable Avatar, a Data Type including Data related to an Avatar and its Context, that enables a receiver to render an Avatar as intended by the sender.

HMC-CEC assumes that:

- Input/Output Audio and Input/Output Visual are Audio Object or Speech Objects and Visual Object, respectively.

- The real space

- Is digitally represented as an Audio-Visual Scene that includes the communicating human.

- May include other humans and generic objects.

- The Virtual Space

- Contains a Digital Human and/or its Speech components in an Audio-Visual Scene.

- May include other Digital Humans and generic Objects.

- The Machine can:

- Understand the semantics of the Communication Item at different layers of depth depending on the technologies used by an Implementation.

- Produce a multimodal response expected to be congruent with the received information.

- Render the response as a speaking Virtual Human in an Audio-Visual Scene.

- Convert the Data produced by an Entity to Data whose semantics is compatible with the Context of another Entity.

Note 1: An AI Module is specified only by its Functions and Interfaces. Implementers are free to use their preferred technologies to achieve the expected AIM Functions while respecting the Function and Interface constraints.

Note 2: An Implementation may subdivide a given AIM into more than one AIM, provided that their combined Functions and Interface conform with the Interfaces of the corresponding HMC-CEC AIM.

Note 3: An implementation may combine AIMs into one, provided that the resulting AIM performs the combine Functions and exposes the Interface of the combined HMC-CEC AIMs.

2 Reference Model

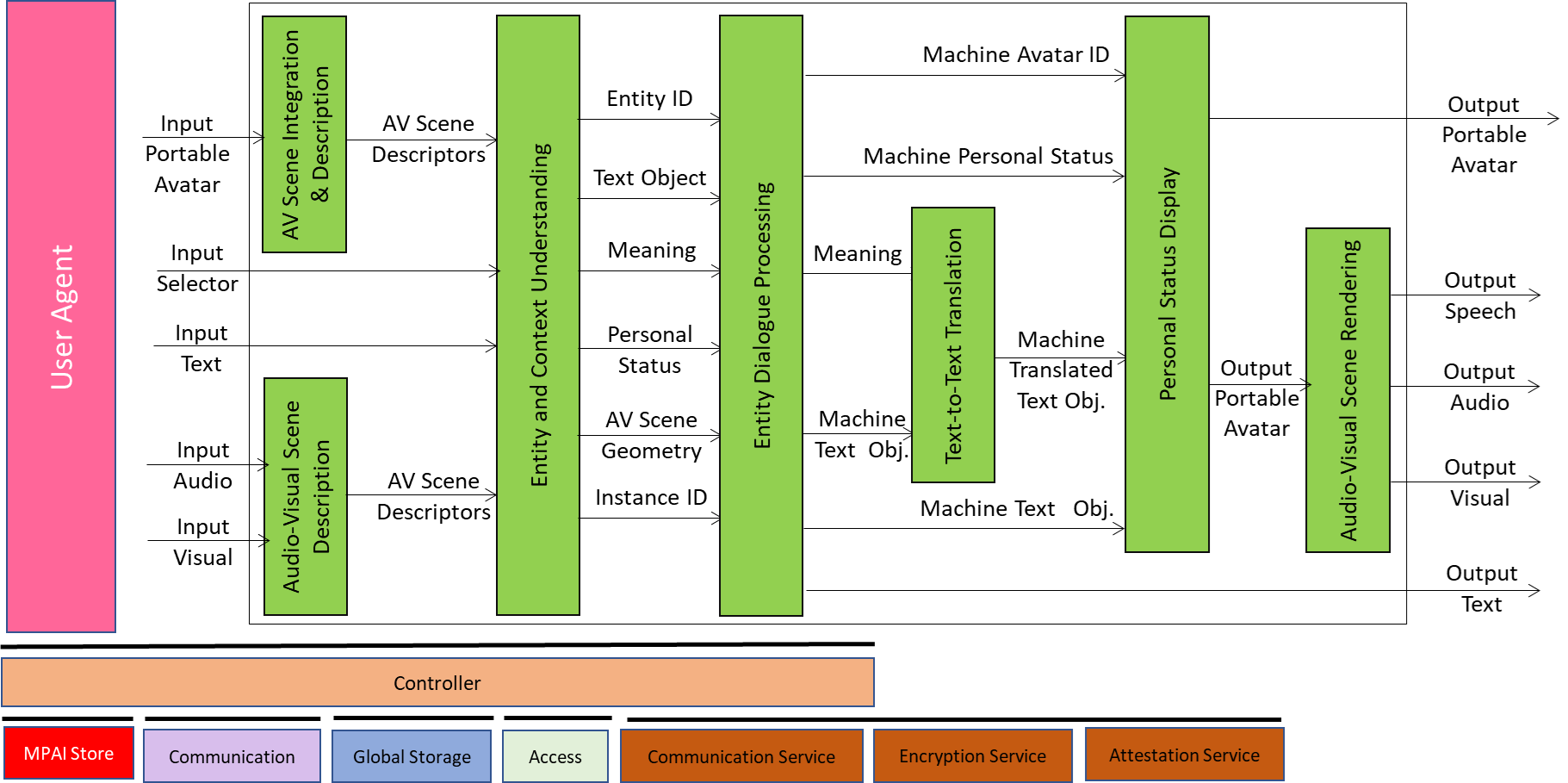

Figure 1 depicts the Reference Model of the Communicating Entities in Context (HMC-CEC) AIW that includes AI Modules (AIM) per Technical Specification: AI Framework (MPAI-AIF) V2.1. Three out of the seven AIMs in Figure 1 (Audio-Visual Scene Description, Entity Context Understanding, and Personal Status Display) are Composite AIMs, i.e., they include interconnected AIMs.

Figure 1 – Communicating Entities in Context (HMC-CEC) AIW

Note that:

- The Input Selector enables an Entity to inform the Machine about use of media types (Text. Speech, and Visual), Portable Avatar, and Language Preferences.

- The Machine captures the information emitted by an Entity and its Context through Input Text, Input Audio and Input Visual. In Figure 1 Audio includes Speech.

- The Input Portable Avatar is the Communication Item emitted by a Machine Entity.

- The Audio-Visual Scene Descriptors are digital representations of a real audio-visual scene or a Virtual Audio-Visual Scene produced either by the Audio-Visual Scene Description AIM or the Audio-Visual Scene Integration and Description AIM.

- To facilitate identification, AIMs are labelled with three letters indicating the Technical Specification that specifies it, followed by a hyphen “-”, followed by three letters uniquely identifying the AIM defined by that Technical Specification. For instance, Portable Avatar Demultiplexing is indicated as PAF-PDX where PAF refers to Technical Specification: Portable Avatar Format (MPAI-PAF) and PDX refers to the Portable Avatar Demultiplexing AIM also specified by MPAI-PAF.

3 Input/Output Data

Table 1 gives the Input/Output Data of the MPAI-HMC AIW.

Table 1 – Input/Output Data of the HMC-CEC AIW

| Input | Description |

| Portable Avatar | A Communication Item emitted by the Entity communicating with the ego Entity. |

| Input Selector | Selector containing data specifying the media and the language used in the communication. |

| Input Text | Text Object generated by the communicating Entity as information additional to or in lieu of Speech Object. |

| Input Audio | The audio scene captured by the Machine. |

| Input Visual | The visual scene captured by the Machine. |

| Output | Description |

| Portable Avatar | The Communication Item produced by the Machine. |

| Output Speech | The speech corresponding to the Speech Object in the output Communication Item. |

| Output Audio | The audio corresponding to the Audio Object in the output Communication Item. |

| Output Visual | The visual corresponding to the Visual Object in the output Communication Item. |

| Output Text | The Text contained in a Communication Item or associated with Output Audio and Output Visual. |

4 Functions of AI Modules

Table 2 gives the functions of HMC-CEC AIMs.

Table 2 – Functions of AI Modules

| AIM | Functions |

| Audio-Visual Scene Integration and Description | Produces Audio-Visual Scene Descriptors where the Avatar in Portable Avatar has been added to Audio-Visual Scene. |

| Audio-Visual Scene Description | Provides Audio-Visual Scene Descriptors. |

| Entity and Context Understanding | Provides information on Entity and its Context. |

| Entity Dialogue Processing | Produces Text and Personal Status of Machine in response to input from Entity and Context Understanding. |

| Text-to-Text Translation | Produces translation of Machine Text using Text and Meaning. |

| Personal Status Display | Produces Portable Avatar. |

| Audio-Visual Scene Rendering | Renders the content of the internally generated Portable Avatar. |

5 Input/Output Data of AI Modules

Table 3 gives the I/O Data of the AIMs of HMC-CEC. Note that an ID can either be specified as an Instance Identifier or refer to a generic identifier.

Table 3 – Input/Output Data of AI Modules

| AIM | Receives | Produces |

| Audio-Visual Scene Integration and Description | Input Portable Avatar | Audio-Visual Scene Descriptors |

| Audio-Visual Scene Description | Input Audio Input Visual |

Audio-Visual Scene Descriptors |

| Entity and Context Understanding | Audio-Visual Scene Descriptors Input Text Input Selector |

Audio-Visual Scene Geometry Personal Status Entity ID Text Object Meaning Instance Identifier |

| Entity Dialogue Processing | Audio-Visual Scene Geometry Personal Status Entity ID Text Meaning Instance Identifier |

Machine Personal Status Machine Avatar ID Machine Text Object Output Text |

| Text-to-Text Translation | Machine Text Object Machine Personal Status |

Machine Translated Text Object |

| Personal Status Display | Machine Personal Status Machine Avatar ID Machine Text Object |

Output Portable Avatar |

| Audio-Visual Scene Rendering | Output Portable Avatar | Output Audio Output Visual |

6 AIW, AIMs, and JSON Metadata

Table 4 provides the list of AIMs composing the HMC-CEC AIW. The AIMs of a Composite AIM are also provided down to the level of Basic AIMs.

Table 4 – AIW, AIMs, and JSON Metadata

7 Reference Software

8 Conformance Testing

Table 5 provides the Conformance Testing Method for HMC-CEC AIM.

If a schema contains references to other schemas, conformance of data for the primary schema implies that any data referencing a secondary schema shall also validate against the relevant schema, if present and conform with the Qualifier, if present.

Table 5 – Conformance Testing Method for HMC-CEC AIM

| Receives | Portable Avatar | Shall validate against Portable Avatar Schema. Portable Avatar Data shall conform with respective Qualifiers. |

| Input Selector | Shall validate against Selector schema. | |

| Input Text | Shall validate against Text Object schema. Audio Data shall conform with Text Qualifier. |

|

| Input Audio | Shall validate against Audio Object schema. Audio Data shall conform with Audio Qualifier. |

|

| Input Visual | Shall validate against Visual Object schema. Audio Data shall conform with Visual Qualifier. |

|

| Produces | Portable Avatar | Shall validate against Portable Avatar Schema. Portable Avatar Data shall conform with respective Qualifiers. |

| Output Audio | Shall validate against Audio Object schema. Audio Data shall conform with Audio Qualifier. |

|

| Output Visual | Shall validate against Visual Object schema. Audio Data shall conform with Visual Qualifier. |

|

| Output Text | Shall validate against Text Object schema. Audio Data shall conform with Text Qualifier. |