Connected Autonomous Vehicles (CAV) promise to replace human errors with a lower machine errors rate, give more time to human brains for rewarding activities, optimise use of vehicles, infrastructure, and traffic management, reduce congestion and pollution, and help elderly and disabled people have a better life.

MPAI believes that standards can accelerate the coming of CAVs as an established reality and so the first MPAI standard for this is “Connected Autonomous Vehicles – Architecture”. It specifies a CAV Reference Model broken down into Subsystems for which it specifies the Functions and the data exchanged between subsystems. Each subsystem is further broken down into components for which it specifies the Functions, the data exchanged between components and the topology.

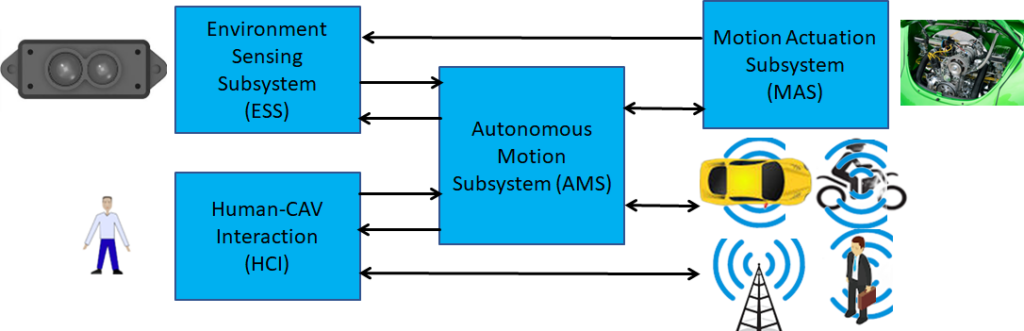

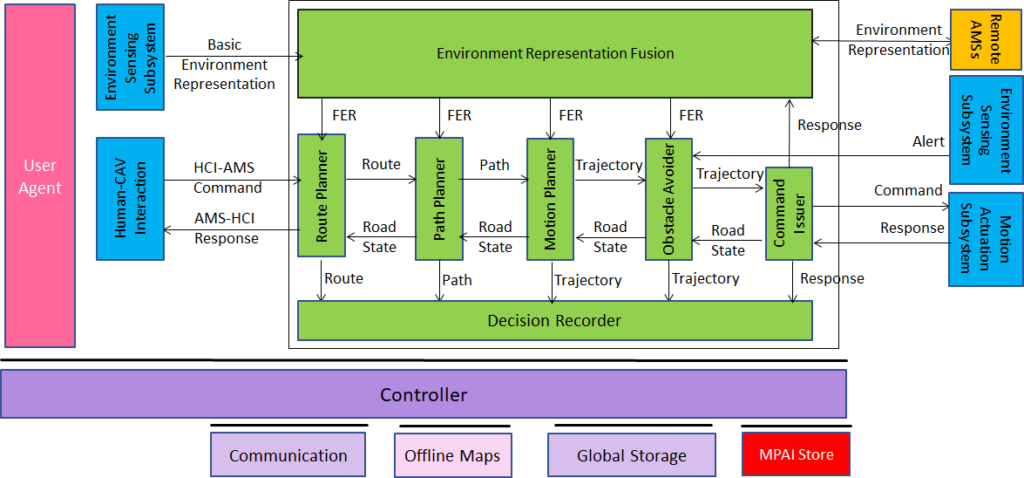

The Subsystem-level Reference model is represented in Figure 1.

Figure 1 – The MPAI-CAV – Architecture Reference Model

There are four subsystem-level reference models. Each subsystem is specified in term of:

- The functions the subsystem performs.

- The Reference model designed to be compatible with the AI Framework (MPAI-AIF) Technical Specification.

- The input/output data exchanged by the subsystem with other subsystems and the environment.

- The functions of each of the subsystem components, intended to be implemented as AI Modules.

- The input/output data exchanged by the component with other components.

In the following the functions and the reference models of the MPAI-CAV – Architecture will be given. The other three components can be found in the draft Technical Specification ( (html, pdf)).

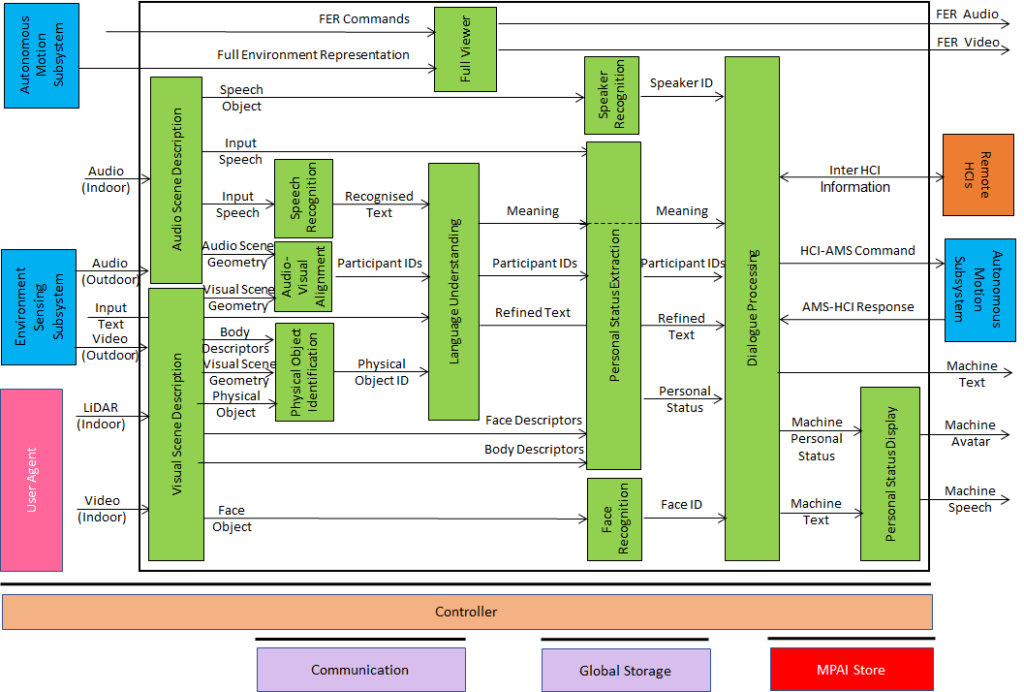

Human-CAV Interactions (HCI)

The HCI functions are:

- To authenticates humans, e.g., to let them into the CAV.

- To converses with humans interpreting utterances, e.g., to go to a destination, or during a conversation. HCI makes use of the MPAI-MMC “Personal Status” data type.

- To Converses with the Autonomous Motion Subsystem to implement human conversation and execute commands.

- To enables passengers to navigate the Full Environment Representation.

- Appears as a speaking avatar showing a Personal Status.

The HCI Reference Model is depicted in Figure 2.

Figure 2 – HCI Reference Model

The full HCI specification is available here.

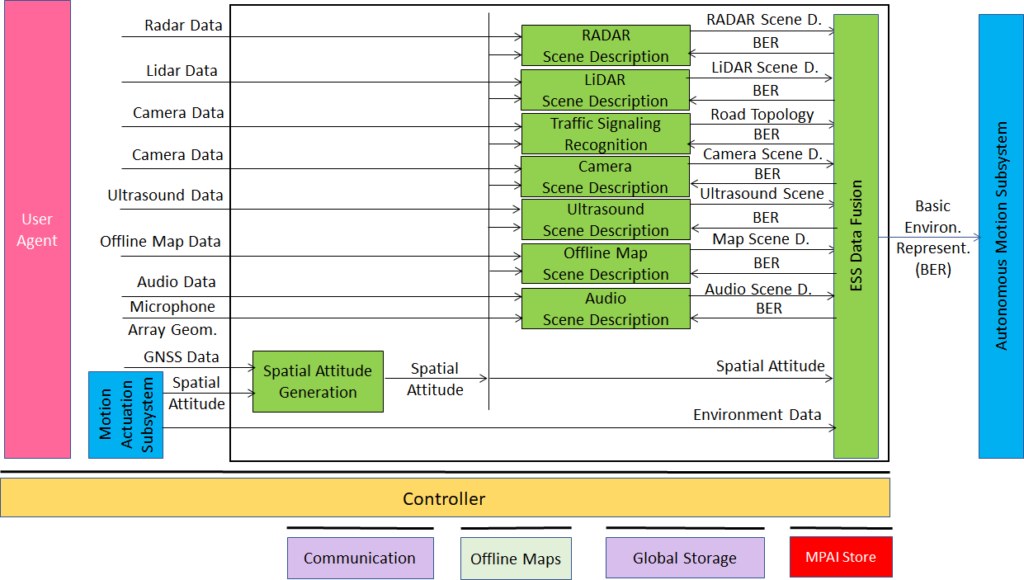

Environment Sensing Subsystem (ESS)

The ESS functions are:

- To acquire Environment information using Subsystem’s RADAR, LiDAR, Cameras, Ultrasound, Offline Map, Audio, GNSS, …

- To receive Ego CAV’s position, orientation, and environment data (temperature, humidity, etc.) from Motion Actuation Subsystem.

- To produce Scene Descriptors for each sensor technology in a common format.

- To produce the Basic Environment Representation (BER) by integrating the sensor-specific Scene Descriptors during the travel.

- To hand over the BERs, including Alerts, to the Autonomous Motion Subsystem.

The ESS Reference Model is depicted in Figure 3.

Figure 3 – ESS Reference Model

The full ESS specification is available here.

Autonomous Motion Subsystem (AMS)

The AMS functions are:

- To compute human-requested Route(s).

- To receive current BER from Environment Sensing Subsystem.

- To communicate with other CAVs’ AMSs (e.g., to exchange subsets of BER and other data).

- To produce the Full Environment Representation by fusing its own BER with info from other CAVs in range.

- To send Commands to Motion Actuation Subsystem to take the CAV to the next Pose.

- To receive and analyse responses from MAS.

The AMS Reference Model is depicted in Figure 3.

Figure 4 – AMS Reference Model

The full AMS specification is available here.

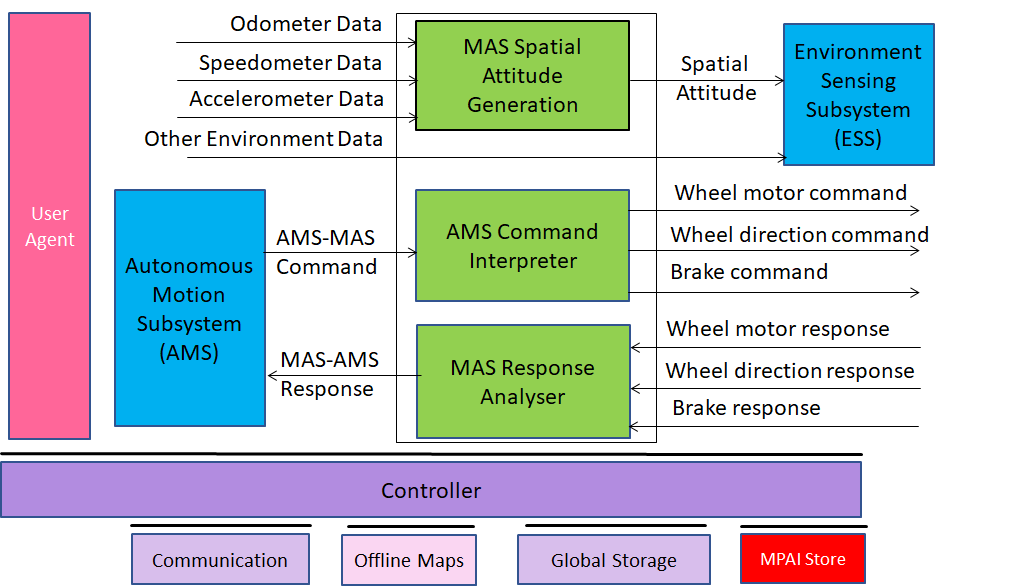

Motion Actuation Subsystem

The MAS functions are:

- To transmit spatial/environmental information from sensors/mechanical subsystems to the Environment Sensing Subsystem.

- To receive Autonomous Motion Subsystem Commands.

- To translates Commands into specific Commands to its own mechanical subsystems, e.g., brakes, wheel directions, and wheel motors.

- To receive Responses from its mechanical subsystems.

- To Sends responses to Autonomous Motion Subsystem about execution of commands.

The MAS Reference Model is depicted in Figure 5.

Figure 5 – MAS Reference Model

The full MAS specification is available here.

The WD of Connected Autonomous Vehicle – Architecture is published with a request for Community Comments. The MPAI-CAV – Architecture Working Draft (html, pdf) is published with a request for Community Comments. See also the video recordings (YT, WimTV) and the slides of the presentation made on 06 September. Anybody may make comment on the WD. Comments should reach the MPAI Secretariat by 2023/09/26T23:59 UTC. No specific format is required to make comments. MPAI plans on publishing MPAI-CAV – Architecture at the 36th General Assembly (29 September 2023).

The MPAI-CAV Architecture standard is the starting point for the next steps of the MPAI-CAV roadmap. The current specification does not include the Functional Requirements of the data exchanged between subsystems and components and this is exactly the activity that will start in October 2023.

Visit How to join to join MPAI.