1 Version

V2.1

2 Functions

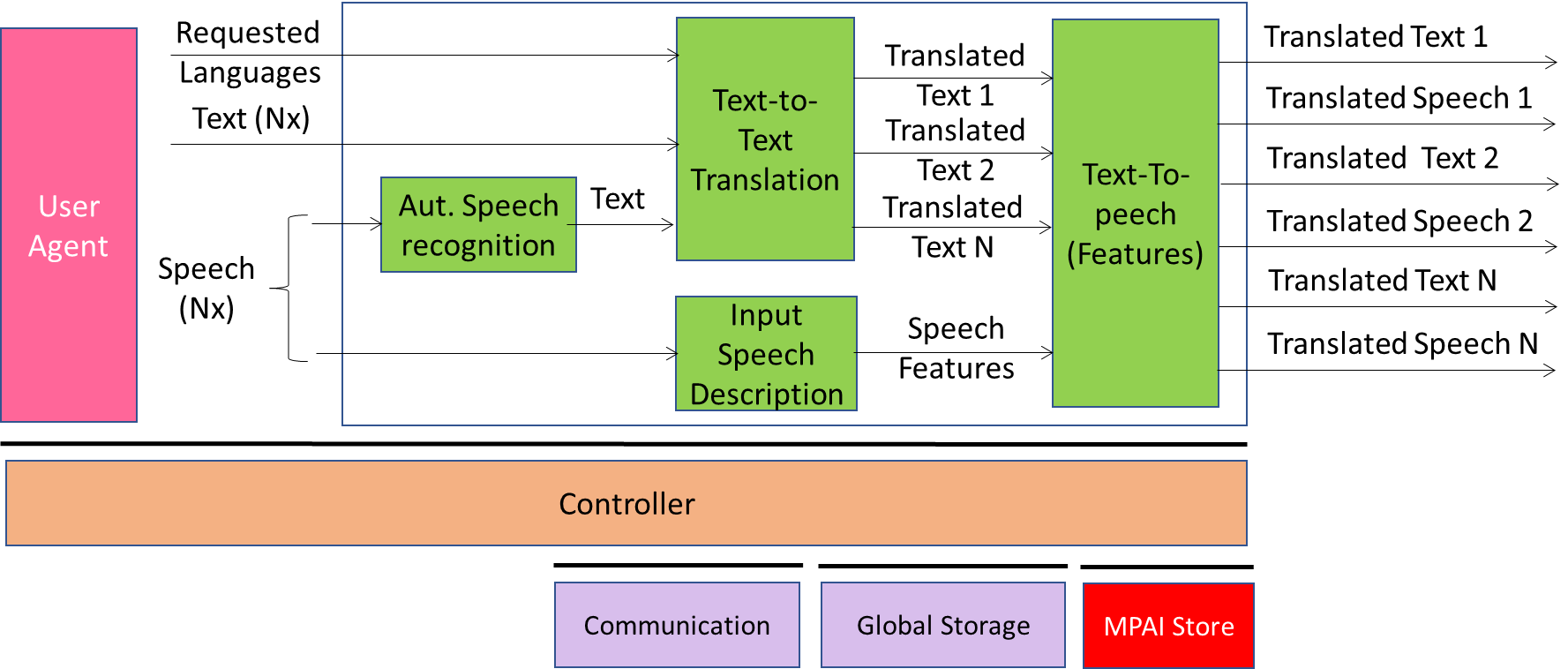

One-to-Many Speech Translation (MMC-MST) enables one human speaking their language to broadcast text or speech to two or more audience members, each reading or listening and responding in a different language. It the desired output is speech, humans can specify whether their speech features should be preserved in the translated speech.

One-to-Many Speech Translation:

- Receives

- Input Selector – indicates whether

- Input is Text of Speech

- Speech Features of Input Speech should be preserved in Translated Speech.

- Requested Language – Language of Speech and Target Speech.

- Input Text – Text to be translated.

- Input Selector – indicates whether

- Produces

- Translated Text1 or Speech1

- Translated Text2 or Speech2

- etc..

3 Reference Model

Figure 1 depicts the AIMs and the data exchanged between AIMs.

Figure 1 – Bidirectional Speech Translation (MMC-BST) AIW

4 I/O Data

The input and output data of the One-to-Many Speech Translation Use Case are given by Table 1:

Table 1 – I/O Data of One-to-Many Speech Translation

| Input | Descriptions |

| Input Selector | Determines whether: 1. The input will be in Text or Speech 2. The Input Speech features are preserved in the Output Speech. |

| Language Preferences | User-specified input language and translated languages |

| Input Speech | Speech produced by human desiring translation and interpretation in a specified set of languages. |

| Input Text | Alternative textual source information. |

| Output | Descriptions |

| Translated Speech | Speech translated into the Requested Languages. |

| Translated Text | Text translated into the Requested Languages. |

5 JSON Metadata

https://schemas.mpai.community/MMC/V2.1/AIWs/OneToManySpeechTranslation.json

| AIMs | Name | JSON |

| MMC-ASR | Audio Scene Description | X |

| MMC-TTT | Text-to-Speech | X |

| MMC-ISD | Input Speech Description | X |

| MMC-TTS | Text-to-Speech | X |