<-Architecture and Operation Go to ToC AI Modules->

| 1 Functions | 2 Reference Model | 3 CAV Input/Output Data |

| 4 Functions of AI Workflows | 5 I/O Data of AI Workflows | 6 AIWs and JSON Metadata |

1 Functions

A Connected Autonomous Vehicle is a physical system that:

- Converses with humans by understanding their utterances, e.g., “take me home” or “show me the environment you see”.

- Senses the environment where it is located or traverses. Figure 1 is an example of the environments targeted CAV-TEC.

- Plans a Route enabling the CAV to reach the requested destination.

- Autonomously reaches the destination by:

- Building digital representations of the environment.

- Moving in the physical environment.

- Exchanging elements of such Environment Representations with other CAVs and CAV-aware entities.

- Making decisions about how to execute the Route.

- Actuating the CAV motion to implement the decisions.

2 Reference Architecture

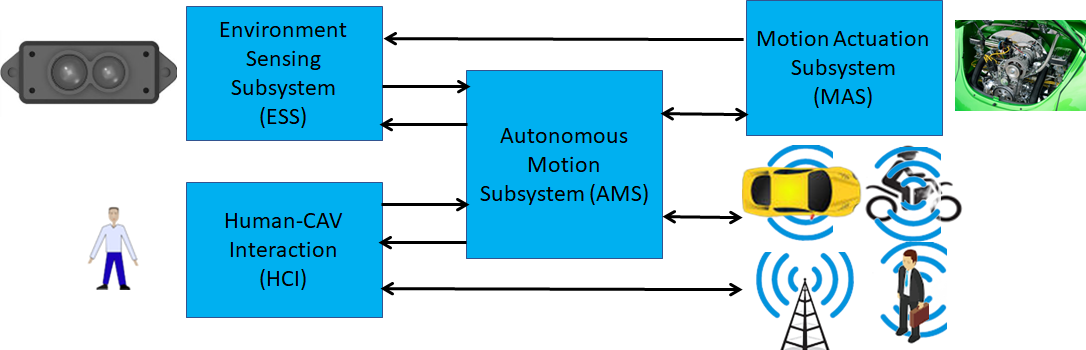

The MPAI-CAV Reference Model is composed of four Subsystems depicted in Figure 1 and implemented as AI Workflows:

- Human-CAV Interaction (HCI)

- Environment Sensing Subsystem (ESS)

- Autonomous Motion Subsystem (AMS)

- Motion Actuation Subsystem (MAS)

Figure 1 – The MPAI-CAV subsystems

The operation of a CAV unfolds according to the following workflow, which is not an exhaustive description of all the functions performed:

Table 1 – High-level CAV operation

| Entity | Action |

| Human | Requests the CAV, via HCI, to take the human to a destination. |

| HCI | 1. Authenticates humans. |

| 2. Interprets the request of humans. | |

| 3. Issues commands to the AMS. | |

| AMS | 1. Requests ESS to provide the current Pose. |

| ESS | 1. Computes and sends the Basic Environment Descriptors (BED) to AMS. |

| AMS | 1. Computes and sends Route(s) to HCI. |

| HCI | 1. Sends travel options to Human. |

| Human | 1. May integrate/correct their instructions. 2. Issues commands to HCI. |

| HCI | 1. Communicates Route selection to AMS. |

| AMS | 1. Sends the BED to the AMSs of other CAVs. 2. Computes the Full Environment Descriptors (FED). 3. Decides best motion to reach the destination. 4. Issues appropriate commands to MAS. |

| MAS | 1. Executes the Command. 2. Sends response to AMS. |

| Human | 1. Interacts and holds conversation with other humans on board and the HCI. 2. Issues commands to HCI. 3. Requests HCI to render the FED. 4. Navigates the FED. 5. Interacts with humans in other CAVs. |

| HCI | Communicates with HCIs of Remote CAVs on matters related to human passengers. |

1.3 I/O Data

Table 2 gives the input/output data of the Connected Autonomous Vehicle.

Table 2 – I/O data of Connected Autonomous Vehicle

| Input data | From | Description |

| Audio Object | Environment | Environment Data captured by Microphones with Qualifier. |

| Brake Response | Brakes | Acts on brakes, gives feedback. |

| Ego-Remote AMS Message | Ego AMS | Message to Remote AMS. |

| Ego-Remote HCI Message | Ego HCI | Message to Remote HCI. |

| GNSS Object | ~1 & 1.5 GHz Radio | Data from various Global Navigation Satellite System (GNSS) sources with Qualifier. |

| LiDAR Object | Environment | Environment Data captured by LiDAR with Qualifier. |

| Motor Response | Wheel Motor | Forces wheels rotation, gives feedback. |

| RADAR Object | Environment | Environment Data captured by RADAR with Qualifier. |

| Text Object | Cabin Passengers | Text complementing/replacing User input. |

| Ultrasound Object | Environment | Environment Data captured by Ultrasound with Qualifier. |

| Visual Object | Environment | Environment Data captured by cameras with Qualifier. |

| Weather Data | Environment | Temperature, Air pressure, Humidity, etc. |

| Wheel Response | Steering Wheel | Moves wheels by an angle, gives feedback. |

| Output data | To | Description |

| AMS Data | Outside device | AMS Data stored in AMS Memory provided for analysis. |

| Audio Object | Cabin Passengers | HCI Response, Rendered Full Environment Descriptors. |

| Brake Command | Brakes | Acts on Brakes. |

| Ego-Remote AMS Message | Remote AMS | Message from Ego AMS to Remote AMS. |

| Ego-Remote HCI Message | Remote HCI | Message from Ego HCI to Remote HCI. |

| Motor Command | Wheel Motors | Activates/suspends/reverses wheel rotation. |

| Text Object | Cabin Passengers | Text from HCI. |

| Visual Object | Cabin Passengers | Environment as seen by CAV and/or HCI rendering. |

| Wheel Command | Wheel | Moves wheel by an angle. |

1.4 Functions of AI Workflows

Table 2 describes the high-level functions of all CAV AI Workflows.

Table 3 – Functions of CAV AI Workflows

| AIW | Function |

| Human-CAV Interaction | Recognises human owner/renter, responds to humans’ commands and queries, converses with humans, manifests itself as a perceptible entity, exchanges information with the Autonomous Motion Subsystem in response to humans’ requests, and communicates with other CAVs or CAV-Aware entities. |

| Environment Sensing Subsystem | Senses the environment’s Electromagnetic and Acoustic information, receives Ego CAV’s Spatial Attitude and Weather Data from own ESS, requests location-specific Data from Offline Map(s), produces the best estimate of the Ego CAV Spatial Attitude, sensor-specific Scene Descriptors and Alerts to AMS, Basic Environment Descriptors (BED), passes the BEDs to HCI and AMS), and requests/receives elements of the Full Environment Descriptors (FED) to/from Remote AMSs. |

| Autonomous Motion Subsystem | Converses with HCI (and HCI with humans) to provide a Route, requests and provides FED subsets to selected Remote CAVs, produces FED, generates Paths, Trajectory, checks Trajectory implementation considering Alerts from ESS’s technology-specific Scene Descriptions, issues commands to and processes responses from MAS, stores Data received/produced in AMS Memory. |

| Motion Actuation Subsystem | Transmits Weather Data and Spatial Data-based Spatial Attitude of the CAV to ESS, receives AMS-MAS Messages from AMS, translates AMS-MAS Message into Brake, Motor, and Wheel Commands, packages and sends Brake, Motor, and Wheel Responses from its Brake, Motor, and Wheel to AMS. |

1.5 I/O Data of AI Workflows

Table 3 gives the AI Workflows of the Human-CAV Interaction depicted in Figure 1.

Table 4 – AI Workflows of Connected Autonomous Vehicle

1.6 AIWs and JSON Metadata

Table 4 provides the links to the AIW specifications and to the JSON Metadata.

Table 5 – AIWs and JSON Metadata

| AIW | Name | JSON |

| MMC-HCI | Human-CAV Interaction | X |

| CAV-ESS | Environment Sensing Subsystem | X |

| CAV-AMS | Autonomous Motion Subsystem | X |

| CAS-MAS | Motion Actuation Subsystem | X |

<-Architecture and Operation Go to ToC AI Modules->