MPAI goes public with MPAI-CUI Compression and Understanding of industrial Data

The title is slightly inaccurate. MPAI-CUI did “go public” on the 30th of September. It was one of the first batch of 3 MPAI standards. Now, however, MPAI wants to give the opportunity to anybody to understand what MPAI-CUI exactly does, how it can be used and see how a real-time implementation works and what it does.

To facilitate better understanding of the planned webinar let’s say a few things about MPAI-CUI.

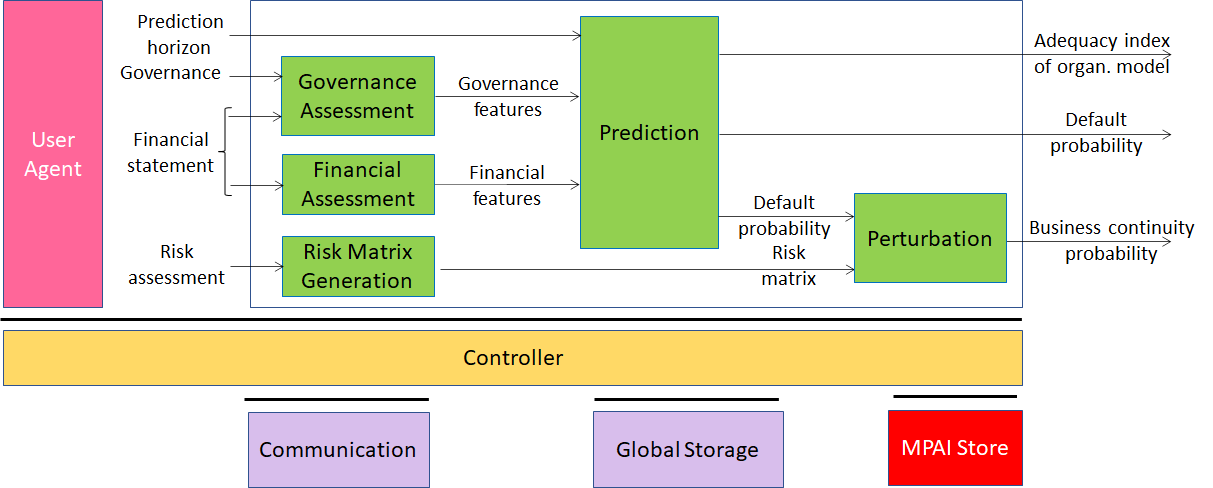

An implementation of the standard (see Fig. 1) is composed of several modules pre-processing the input data. A fourth module – called Prediction – is a neural network that has been trained with a large amount of company data of the same type as those used by the implementation and can provide an accurate estimate of the company default probability and the governance adequacy. The fifth module – called Perturbation – takes as input the estimation of the company default probability and the assessment of vertical risks (i.e., seismic and cyber) and estimates the probability that a business discontinuity will occur in the future.

Figure 1 – Company Performance Prediction in MPAI-CUI

The MPAI-CUI standard is a set of 4 specifications. The Technical Specification outlined above is the first and

- a second specification called Conformance Assessment enabling a user of an implementation of the standard to verify that it is technically correct.

- a third specification called Performance Assessment enabling a user to detect whether the training of the neural network is biased against some geographic locations (e.g., North-South) and some industry types (e.g., Service vs. Manufacturing).

- a fourth specification called Reference Software, a software implementation of the standard.

The novelty of MPAI-CUI is in its ability to analyse, through AI, the large amount of data required by regulation and extract the most relevant information. Moreover, compared to state-of-the-art techniques that predict the performance of a company, MPAI-CUI allows extending the time horizon of prediction.

Companies and financial institutions can use MPAI-CUI in a variety of contexts, e.g.:

- To support the company’s board in analysing the financial performance, identifying clues to the crisis or risk of bankruptcy years in advance. It may help decision-makers to make proper decisions to avoid these situations, conduct what-if analysis, and devise efficient strategies.

- To assess the financial health of companies applying for funds. A financial institution receiving a request from a troubled company, can access the company’s financial and organisational data and make an AI-based assessment and predict performance of the company. Financial institution can make the right decision whether to fund that company or not, based on a broader view of its situation.

The webinar will be held on 25th of November 2021 at 15:00 UTC with the following agenda:

1. Introduction (5’): introduction to MPAI, its mission, what has been done in the year after its establishment, plans.

2. MPAI-CUI standard (15’):

- The process that led to the standard: study of Use Cases, Functional Requirements, Commercial Requirements, Call for Technologies, Request for Community Comments and Standard.

- The MPAI-CUI modules and their function.

- Extensions to the standard under way.

- Some applications of the standard (banking, insurance, public administrations)

3. Demo (15’): a set of anonymous companies with identified financial, governance and risk features will be passed through an MPAI-CUI implementation.

4. Q&A

Conversing with a machine

It will take some time before we can have an argument with a machine about the different form of novels in different centuries and cultures. It is clear, however, that there is significant push by the industry to endow machines with the ability to hold even a limited form of conversation with humans.

The MMC standard, approved on 30th of September provides two significant examples. In the first it assumes there is a machine that responds to user queries. The query can be about the line of products sold by a company or by the operation of a product or complaints about a malfunctioning product.

It would be a great improvement over some systems available today if the machine could understand the state of mind of the human and fine-tune its speech so as to make it more in tune with the mood of the human.

MPAI is developing standards for typical use case.

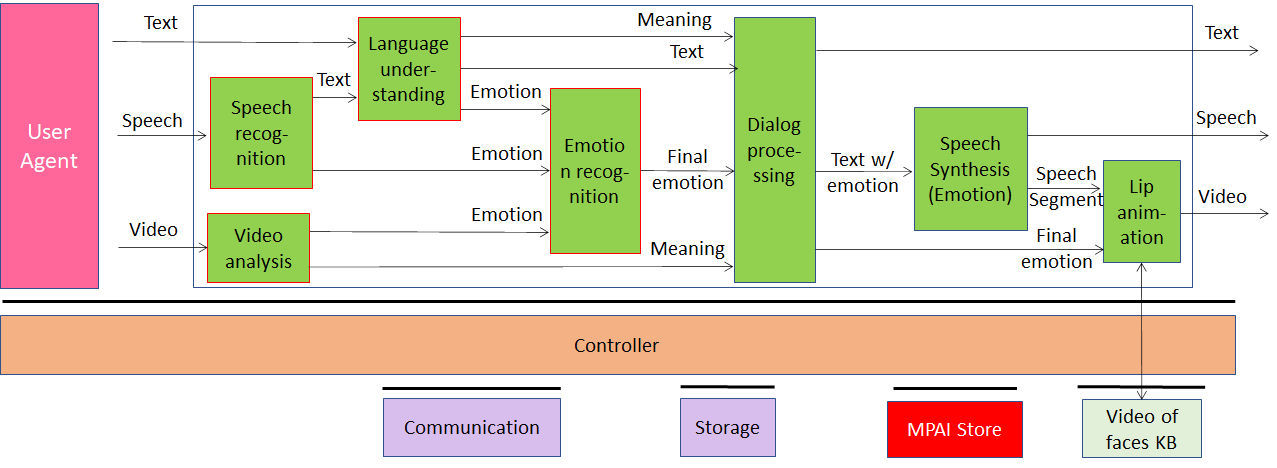

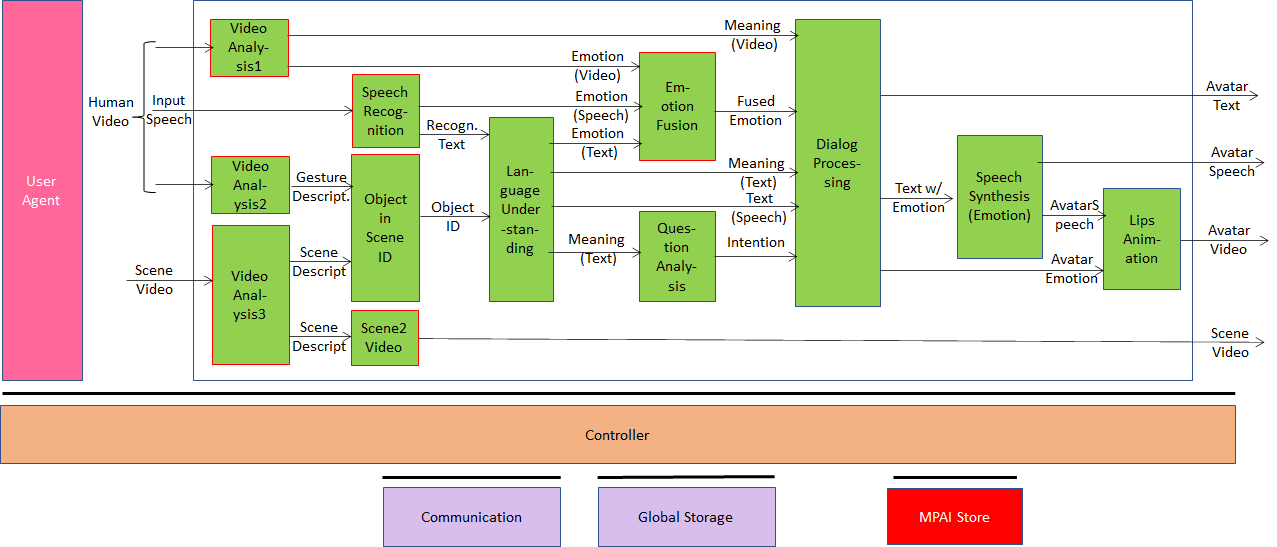

The first is Conversation with Emotion (MMC-CWE) depicted in Figure 2. Here MPAI has standardised the architecture of the processing units (called AIM Modules – AIMs) and the data formats exchanged by the processing units that support the following scenario: a human types or speaks to a machine that captures the human’s text or speech and face, and responds with a speaking avatar.

Figure 2 – Conversation with Emotion

A Video Analysis AIM extracts emotion and meaning from the human’s face and the the Speech Recognition AIM converts the speech to text and extracts emotion from the human’s speech. Emotion from different media is fused and all the data are passed to Dialogue Processing AIM that provides the machine’s answer along with the appropriate emotion. Both are passed to a speech synthesisr AIM and a lips animation AIM.

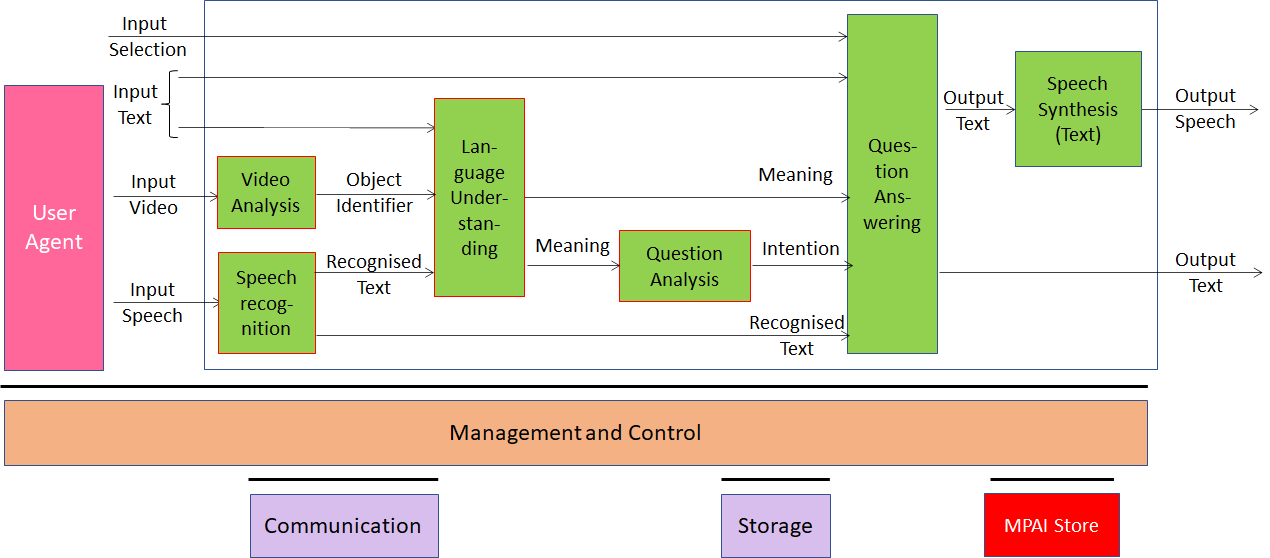

The second case is Multimodal Question Answering (MMC-MQA) depicted in Figure 3. Here MPAI has done the same for a system where a human handles an object in their hand and asks a question about the object that the machine answers with synthetic voice.

Figure 3 – Multimodal Question Answering

An AIM recognises the object while a speech recogniser AIM converts the speech of the human to text. The text with information about the object is processed by the Language Understanding AIM that produces Meaning which Question Analysis AIM converts to Intention. The Question Answering AIM processes human text, intention and meaning to produce the machine’s answer that is finally converted into synthetic speech.

The MPAI-MMC standard is publicly available at

https://mpai.community/standards/resources/.

MPAI is now busy developing the Reference Software of the 5 MPAI-MMC Use Cases. At the same time MPAI is exploring other environments where human-machine conversation is possible with technologies within reach.

The third case is conversation between a human and a Connected Autonomous Vehicle (CAV) depicted in Figure 4. In this case the CAV should be able to

- Recognise that the human has indeed the right to ask the CAV to do anything to the human.

- Understand commands like “take me home” and respond by offering a range of possibilities among which the human can choose.

- Respond to other questions while travelling and engage in a conversation with the human.

Figure 4 – Human to Connected Autonomous Vehicle dialogue

The CAV is impersonated by an avatar which should be capable to do several additional things compared to the MMC-CWE case distinguish which human in the compartment is asking a question and turn its eyes to that human.

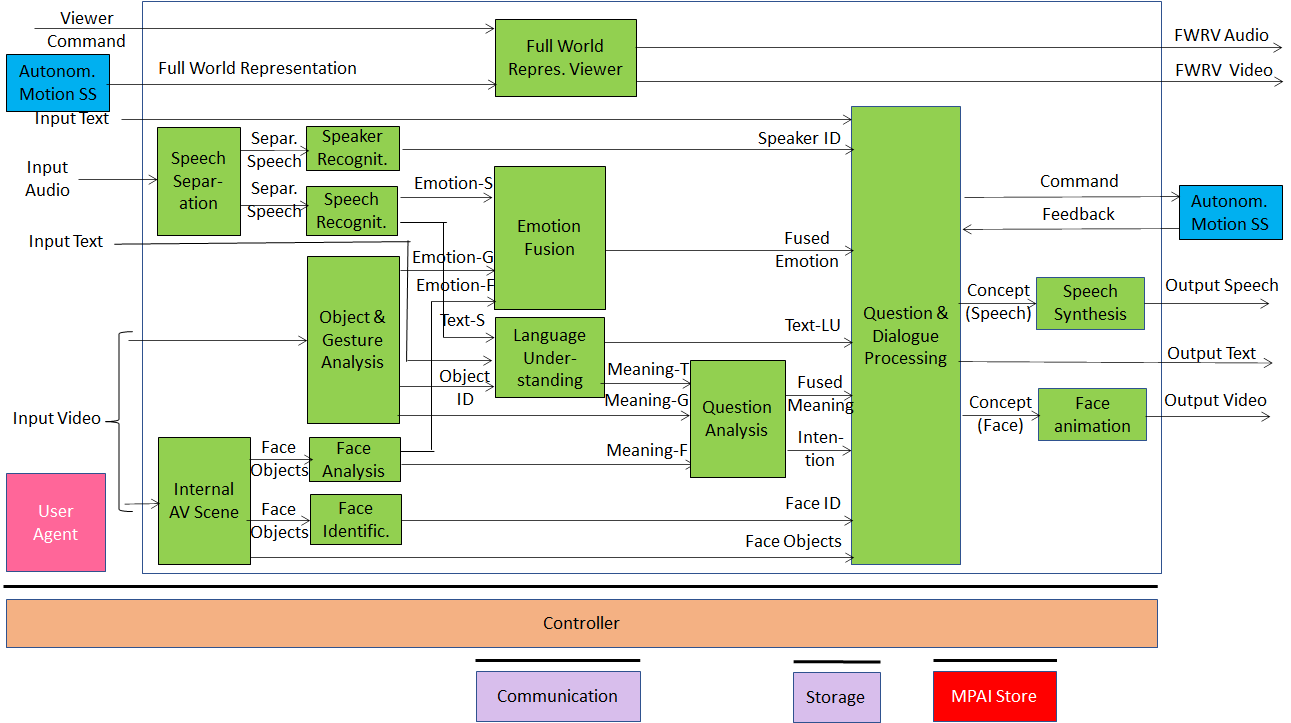

The fourth case Conversation About a Scene, depicted in Figure 5 is actually an extension of Multimodal Question Answering: a human and a machine are holding a conversation on the objects of a scene. The machine understands what the human is saying and the object they are pointing to, by looking at the changes in the face and in the speech denoting approval or disapproval etc.

Figure 5 – Conversation About a Scene

Figure 5 represents the architecture of the AIMs whose concurrent actions allow a human and a machine to have a dialogue. It integrates the emotion-detecting AIMs of MMC-CWE and the question-handling AIMs of MMC-MQA with the AIM that detects the human gesture (“what the human arm/finger is pointing at”) and the AIM that created a model of the objects in the scene. The Object in Scene AIM fuses the two data and provides the object identifier that is processed in a way similar to MMC-MQA.

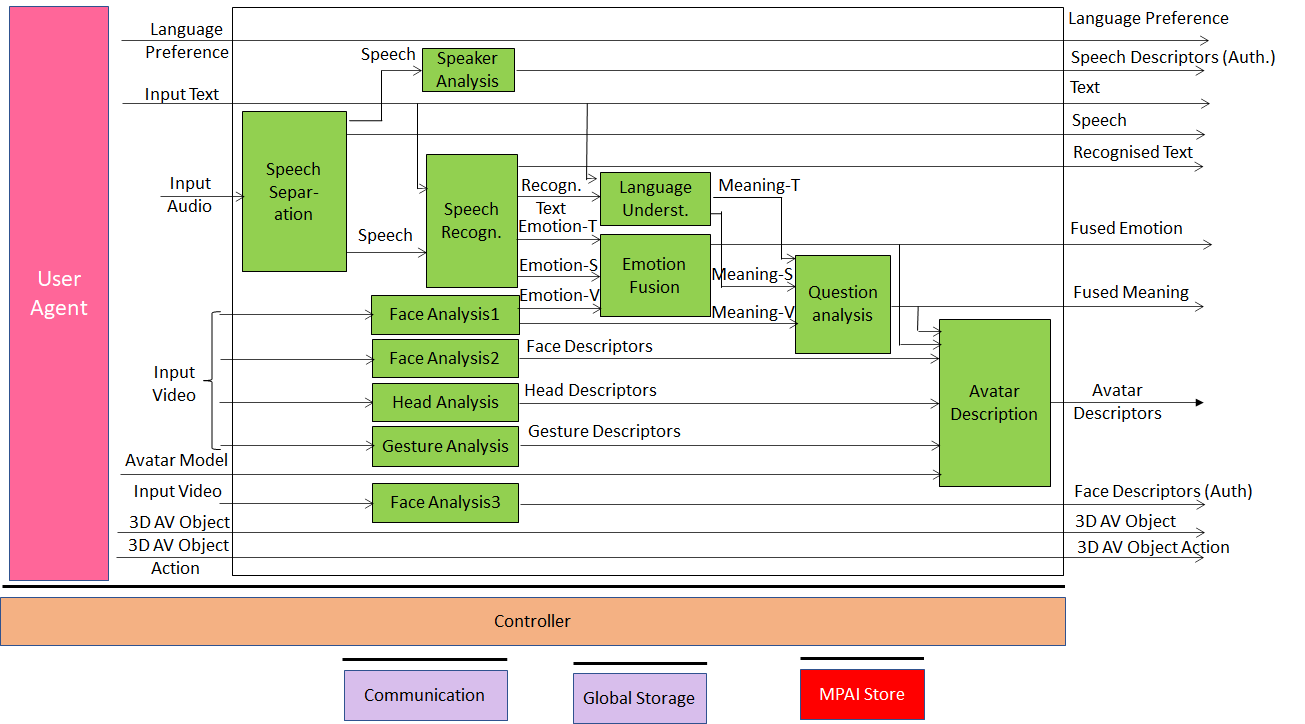

The fifth case is part of a recent new MPAI project called Mixed-reality Collaborative Spaces (MPAI-MCS) depicted in Figure 6, applicable to scenarios where geographically separated humans collaborate in real time with speaking avatars in virtual-reality spaces called ambient to achieve goals generally defined by the use scenario and specifically carried out by humans and avatars.

Figure 6 – Mixed-reality Colleborative Space.

Strictly speaking, in MCS the problem is not conversation with a machine but creation of a virtual twin of a human (an avatar) looking like and behaving in a similar way as its physical twin. Many the AIMs we need for this case are similar to and in some cases exactly the same as those needed by MMC-CWE and MMC-MQA: we need to capture the emotion or the meaning on the face and in the speech and in the physical twin so that we can map them to the virtual twin.

MPAI meetings in November-December (draft)

| Group name | 22-26 | 29-03 | 06-10 | 13-17 | 20-24 | Time |

| Mixed-reality Collaborative Spaces | 29 | 6 | 13 | 20 | 14 | |

| AI Framework | 29 | 6 | 13 | 20 | 15 | |

| Multimodal Conversation | 30 | 7 | 14 | 21 | 14:30 | |

| Context-based Audio enhancement | 30 | 7 | 14 | 21 | 15:30 | |

| Connected Autonomous Vehicles | 1 | 8 | 15 | 22 | 13 | |

| AI-Enhanced Video Coding | 1 | 15 | 14 | |||

| AI-based End-to-End Video Coding | 8 | 21 | 14 | |||

| Compression and Understanding of Industrial Data | 8 | 15 | 15 | |||

| Server-based Predictive Multiplayer Gaming | 8 | 9 | 16 | 22 | 13:30 | |

| Communication | 25 | 8 | 9 | 16 | 23 | 14 |

| Industry and Standards | 10 | 14 | ||||

| General Assembly (MPAI-15) | 24 |