2022/03/27

MPAI issues a Call for Patent Pool Administrator for two of its standards

Remuneration of IP is one of the driving forces of progress. So far, however, its management has not glowed with cleverness. Thus, implementing more rational management of IP in standards was one of the reasons why MPAI has been established. The following is how MPAI’s standards development process unfolds:

- After the General Assembly approves the functional requirements of a standard project, MPAI adopts a Framework Licence.

- The Framework Licence contains some data of the eventual licence without providing such data as cost, percentage, dates etc. but containing general clauses on licence availability. For instance, that the licence should be provided “not after products are on the market” and “at total costs that are in line with the total cost of the licenses for similar data coding technologies”.

- Acceptance of the Framework Licence is a condition for contributing to the standard.

- When the General Assembly approves the standard, MPAI identifies the patent holders.

- Patent holders select their preferred patent pool administrator.

Patent holders of Context-based Audio Enhancement (MPAI-CAE) and Multimodal Conversation (MPAI-MMC) have agreed to issue a Call for Patent Pool Administrator and have asked MPAI to publish the call on its website. The patent holders expect to work with the selected entity to facilitate a licensing program that responds to the requirements of the licensees while ensuring the commercial viability of the program. In the future, the coverage of the patent pool may be extended to new versions of MPAI-CAE and MPAI-MMC, and/or to other MPAI standards.

The reason for having a single patent pool is because, unlike monolithic standards such as HEVC or VVC, MPAI standards are “containers” of use cases.

MPAI-CAE provides standard technologies for 4 use cases. The first is Emotion Enhanced Speech. This lets you add a desired emotion or “colour” to an emotion-less speech. The second is Audio Recording Preservation providing a set of AI-based technologies to cut the cost of preserving open audio reel tapes. It should not be a surprise that there is not much commonality between the two sets of technologies underpinning the two use cases.

On the other hand, MPAI-MMC has a use case called Automatic Speech Translation. This lets you utter a sentence in a language (say, English) for the machine to utter it in, say, Korean. The Korean utterance is synthetic but retains the “colour” of the original English utterance.

Automatic Speech Translation has a lot of commonalities with Emotion Enhanced Speech. Therefore, the Emotion Enhanced Speech patent holders are likely to team up with the Automatic Speech Translation patent holders.

This is the decision of the patent holders which is outside of MPAI’s purview but is based on the role set by the MPAI statutes to facilitate the establishment of viable patent pools.

Parties interested in being selected as the entity administering the patent pool are requested to communicate, no later than 1 May 2022, their interest and provide appropriate descriptions of their qualifications to the MPAI Secretariat. The Secretariat will forward the received material to the Patent Holders.

Video coding in MPAI

Video coding has been one of the first areas addressed by MPAI. Video Coding research focuses on improving the classic block-based hybrid coding framework to offer more efficient video compression solutions. AI approaches can play an important role in achieving this goal.

MPAI’s rough estimate from its survey on AI-based video coding is that an improvement of up to 30% can be achieved.

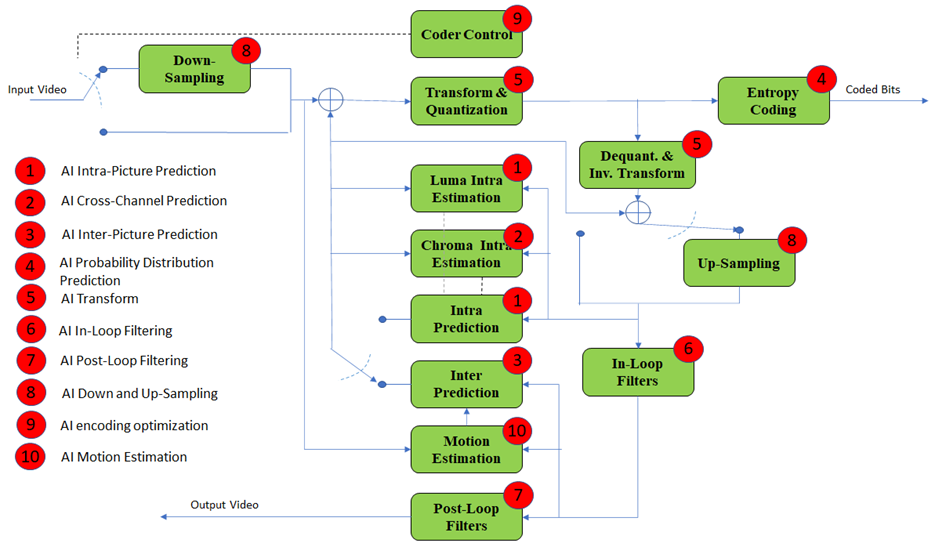

In Figure 1, 10 coding tools are identified that can potentially be improved by the use of AI tools.

Figure 1 – A classic block-based hybrid coding framework

MPAI’s strategy is to start from a high-performance “clean-sheet” data-processing-based coding scheme and add AI-enabled improvements to it, instead of starting from a scheme overloaded by IP problems where some data processing technologies give insignificant improvements.

Therefore, MPAI is investigating whether it is possible to improve the performance of the MPEG-5 Essential Video Coding (EVC) standard modified by enhancing/replacing existing video coding tools with AI tools. In particular, the EVC Baseline Profile has been selected because it employs 20+ years old technologies and has a compression performance close to HEVC.

MPAI calls this project MPAI AI-Enhanced Video Coding (MPAI-EVC) and has made the deliberate decision not to initiate a standard project at this time, because it first wants to set up a unified platform where experiments can be conducted and demonstrate that 25% coding performance improvement can be achieved. The name of the project is “MPAI-EVC Evidence Project”.

Significant results have already been achieved by a growing group of people that includes both MPAI and non-MPAI members. Note: MPAI has a policy of allowing non-members to participate in its preliminary activities.

Once the Project demonstrates that AI tools can improve the MPEG-5 EVC efficiency by at least 25%, MPAI will be in a position to initiate work on the MPAI-EVC standard. The functional requirements have already been developed and only need to be revised. Then the framework licence will be developed and the Call for Technology issued.

MPAI-EVC can cover the short-to-medium term video coding needs. Contact the MPAI Secretariat if you wish to participate.

MPAI has a second video coding project motivated by the consensus in the video coding research community that so-called End-to-End (E2E) video coding schemes can yield significantly higher performance in the longer term.

MPAI has initiated the MPAI End-to-End Video Coding (MPAI-EEV) project in its role as a technical body whose mission is the provision of efficient and usable data coding standards, unconstrained by legacy IP.

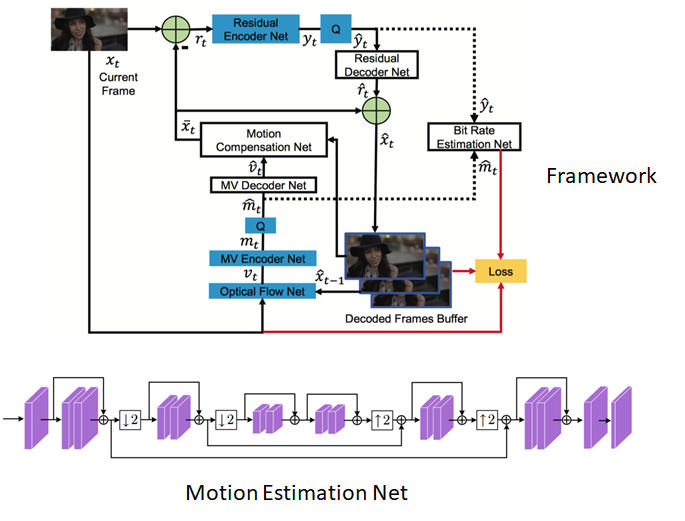

MPAI has selected OpenDVC as a starting point and is investigating the addition of novel motion compensation networks.

Figure 2 – The OpenDVC reference model with a motion compensation network

Participation in MPAI-EEV, too, is open to non members. Contact the MPAI Secretariat if you wish to participate.

Schedule of MPAI meetings

| Group name | 03/24-04/01 | 04/04-04/08 | 04/011-04/15 | 04/18-04/22 | Time

(UTC) |

| Governance of MPAI Ecosystem | 28 | 4 | 11 | 18 | 16 |

| AI Framework | 28 | 4 | 11 | 17 | |

| Mixed-reality Collaborative Spaces | 28 | 4 | 11 | 18 | 18 |

| Multimodal Conversation | 29 | 5 | 12 | 19 | 14 |

| Neural Network Watermaking | 29 | 5 | 12 | 19 | 15 |

| Context-based Audio enhancement | 5 | 12 | 19 | 16 | |

| Connected Autonomous Vehicles | 30 | 6 | 13 | 20 | 13 |

| AI-Enhanced Video Coding | 30 | 13 | 14 | ||

| AI-based End-to-End Video Coding | 20 | 13 | |||

| 6 | 14 | ||||

| Avatar Representation and Animation | 31 | 7 | 14 | 13:30 | |

| Server-based Predictive Multiplayer Gaming | 31 | 7 | 14 | 14:30 | |

| Communication | 31 | 14 | 15 | ||

| Industry and Standards | 8 | 16 | |||

| General Assembly (MPAI-19) | 20 | 15 |