In this issue:

- 23 August: An MPAI watershed

- AI Framework (MPAI-AIF) V2

- Artificial Intelligence for Health Data (MPAI-AIH).

- Avatar Representation and Animation (MPAI-ARA)

- Connected Autonomous Vehicle (MPAI-CAV) – Architecture

- Multimodal Conversation (MPAI-MMC) V2

- MPAI Metaverse Model (MPAI-MMM) – Architecture

- Object and Scene Description (MPAI-OSD)

- XR Venues (MPAI-XRV) – Live Theatrical Stage Performance

- Meetings in the coming August-September meeting cycle

The 35th MPAI General Assembly (MPAI-35) has been a watershed because it approved the publication of:

- Three Calls for Technologies:

- Artificial Intelligence for Health Data (MPAI-AIH).

- Object and Scene Description (MPAI-OSD).

- XR Venues (MPAI-XRV) – Live Theatrical Stage Performance.

- Five Standards with request for Community Comments for publication on 29 September:

- AI Framework (MPAI-AIF) V2

- Avatar Representation and Animation (MPAI-ARA)

- Connected Autonomous Vehicle (MPAI-CAV) – Architecture

- Multimodal Conversation (MPAI-MMC) V2

- MPAI Metaverse Model (MPAI-MMM) – Architecture

MPAI is organising a series of paired online presentations for all seven projects. More information on the table below and its links. All times UTC (-7 WC, -4 EC, +1 UK-PT, +2 EU, +5.5 IN, +8 CN, +9 KR-JP). P1 and P2 are registration links to attend the morning and afternoon presentation sessions, respectively (links in times, e.g., Sep 04 08 & 15 are for registration).

| Call for Technologies | Link | Presentation | Deadline |

| AI for Health Data (AIH) | X | Sep 08 08 & 15 | Oct 19 |

| Object and Scene Description (OSD) | X | Sep 07 09 & 16 | Sep 20 |

| XR Venues – Live Theatrical Stage Performance (XRV) | X | Sep 12 07 & 17 | Nov 20 |

| Standard for Community Comments | Presentation | Deadline | |

| AI Framework (AIF) V2 | X | Sep 11 08 & 15 | Sep 24 |

| Avatar Representation and Animation (ARA) | X | Sep 07 08 & 15 | Sep 27 |

| Connected Autonomous Vehicles – Architecture (CAV) | X | Sep 06 08 & 15 | Sep 26 |

| Multimodal Conversation (MMC) V2 | X | Sep 05 08 & 15 | Sep 25 |

| MPAI Metaverse Model – Architecture (MMM) | X | Sep 01 08 & 15 | Sep 21 |

The MPAI approach to AI standards is based on the belief that by breaking up large AI applications into smaller elements (AI Modules), combined in workflows (AIW), exchanging data with a known semantics (to the extent possible), not only improves explainability of AI applications but also promotes a competitive market of components with standard interfaces, possibly with improved performance compared to other implementations.

MPAI-AIF specifies an environment where it is possible to execute AI Workflows composed of AI Modules. The first step in this process was made in October 2021 when the first version of the MPAI AI Framework standard was published.

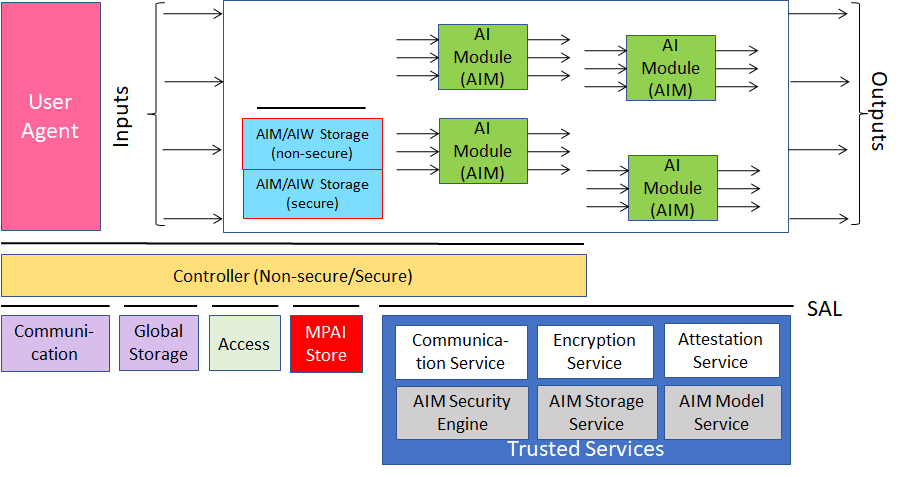

MPAI-AIF V1 assumed that the AI Framework was secure but did not provide support to developers wishing to execute an AI application in a secure environment. MPAI-AIF V2 responds to this requirement. As shown in Figure 1, the standard defines a Security Abstraction Layer (SAL). By accessing the SAL APIs, a developer can indeed create the required level of security with the desired functionalities.

Figure 1 – Reference Model of MPAI-AIF V2

MPAI is publishing a Working Draft of MPAI-AIF V2 and is requesting the community to comment on it. Comments will be used to develop the final draft that MPAI intends to publish at the 36th General Assembly (29 September 2023).

An online presentation of the AI Framework WD will be held on September 11 at 08 and 15 UTC. In the meantime, please read the document at https://mpai.community/standards/mpai-aif/.

The deadline for submitting a response is September 24 at 23:59 UTC.

Artificial Intelligence for Health Data (MPAI-AIH)

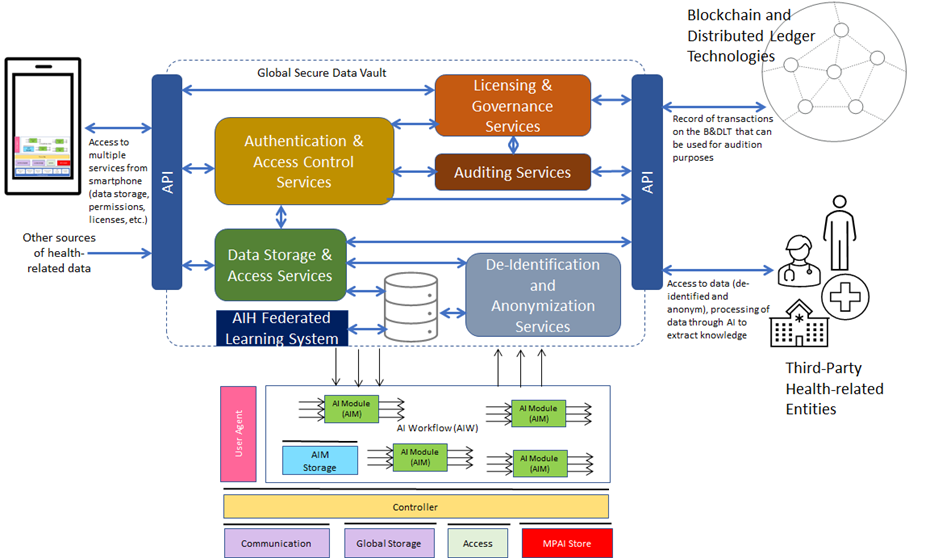

MPAI-AIH aims to specify the interfaces and the relevant data formats of a system called AI Health Platform (AIH Platform) where:

- End Users use handsets with an MPAI AI Framework (AI Health Frontends) to acquire and process health data.

- An AIH Backend collects processed health data delivered by AIH Frontends with associated Smart Contracts specifying the rights granted by End Users.

- Smart Contracts are stored on a blockchain.

- Third Party Users can process their own and End User-provided data based on the relevant smart contracts.

- The AIH Backend periodically collects the AI Models trained by the AIH Frontends while processing the health data, updates its AI Model and distributes it to AI Health Platform Frontends (Federated Learning).

This is depicted in Figure 1 (for simplicity the security part of the AI Framework is not included).

Figure 2 – The AI Health Platform

MPAI is seeking proposals of technologies that enable the implementation of standard components (AI Modules) to make real the vision described above.

An online presentation of the Call for Technologies, the Use Cases and Functional Requirements, and the Framework Licence will be held on September 08 at 08 and 15 UTC. In the meantime, please read the documents at https://mpai.community/standards/mpai-aih/.

The deadline for submitting a response is October 19 at 23:59 UTC.

Avatar Representation and Animation (MPAI-ARA)

There is a long history of computer-created objects called “digital humans”, i.e., digital objects that can be rendered to show a human appearance. In most cases the underlying assumption of these objects has been that creation, animation, and rendering is done in a closed environment. Such digital humans had little or no need for standards. However, in a communication and even more in a metaverse context, there are many cases where a digital human is not constrained within a closed environment. For instance, a client may send data to a remote client that should be able to unambiguously interpret and use the data to reproduce a digital human as intended by the transmitting client.

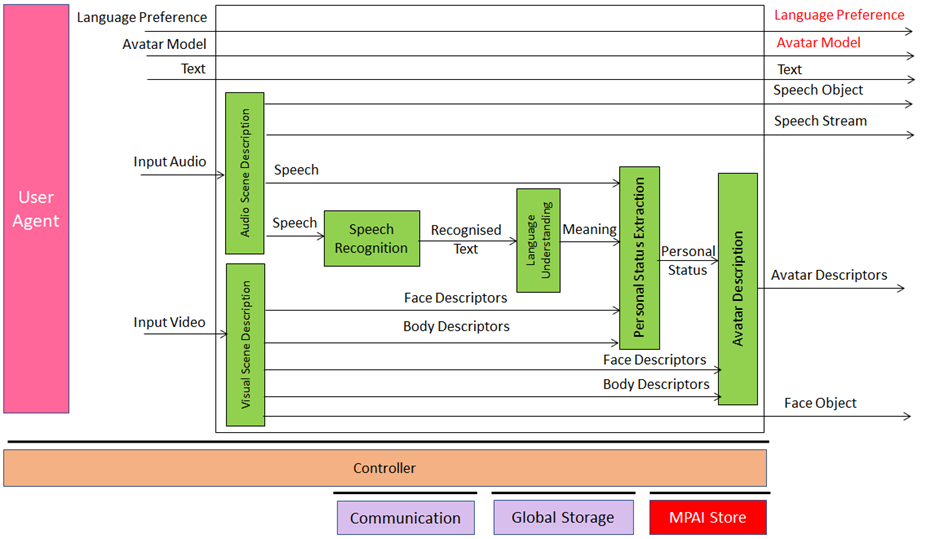

These new usage scenarios require standards. Technical Specification: Avatar Representation and Animation is a first response to the need of a user wishing to enable their transmitting client to send data that a remote client can interpret to render a digital human, having the body movement and the facial expression representing their own movements and expression.

Figure 3 depicts the reference model of the transmitting Client.

Figure 3 – Reference Model of Avatar Videoconference Transmitting Client

MPAI is publishing a Working Draft of MPAI-ARA V1 and is requesting the community to make comments on the WD by 27 September 2023. Comments will be used to develop the final draft that MPAI intends to publish at the 36th General Assembly (29 September 2023).

An online presentation of the WD will be held on September 07 at 08 and 15 UTC. In the meantime, please read the document at https://mpai.community/standards/mpai-ara/.

The deadline for submitting a response is September 27 at 23:59 UTC.

Connected Autonomous Vehicle (MPAI-CAV) – Architecture

Today, self-driving cars are not only technically possible, but several implementations also exist. They promise to bring benefits that will positively affect industry, society, and the environment. Therefore, the transformation of what can be called today’s “niche market” into tomorrow’s expected vibrant mass market is a goal of high societal importance. Rather than by relying on market forces and waiting for users demanding cars with progressively more advanced autonomy, MPAI believes that the goal should be reached by making available the specification of the Architecture of a Connected Autonomous Vehicle (CAV) that includes:

- A CAV Reference Model broken down into Subsystems.

- The Functions of each Subsystem.

- The Data exchanged between Subsystems.

- A breakdown of each Subsystem in Components of which the following is specified:

- The Functions of the Components.

- The Data exchanged between Components.

- The Topology of Components.

- Subsequently, Functional Requirements of the Data exchanged.

- Eventually, standard technologies for the Data exchanged.

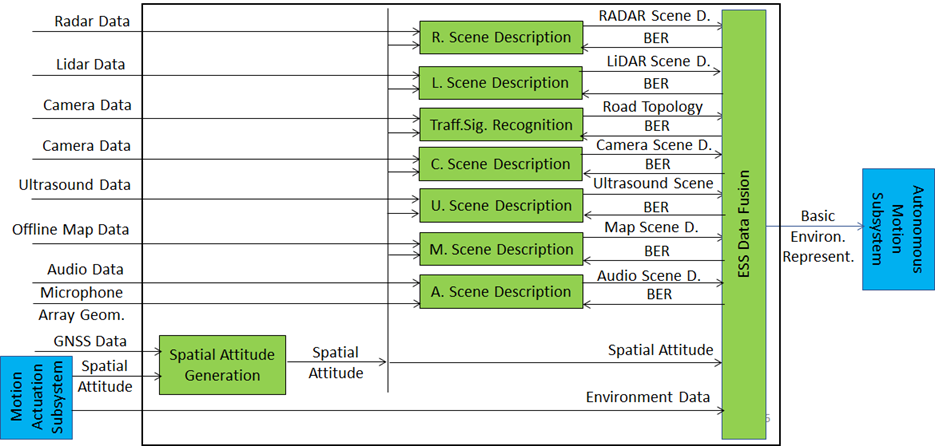

Figure 4 depicts the reference model of the Environment sensing subsystem tasked to sense the environment with a variety of technologies and create the Basic Environment Representation.

Figure 4 – Environment Sensing Subsystem Reference Model

MPAI is publishing a Working Draft of MPAI-CAV V1 and is requesting the community to make comments on the WD. Comments will be used to develop the final draft that MPAI intends to publish at the 36th General Assembly (29 September 2023).

An online presentation of the WD will be held on September 06 at 08 and 15 UTC. In the meantime, please read the document at https://mpai.community/standards/mpai-cav/.

The deadline for submitting a response is September 26 at 23:59 UTC.

Multimodal Conversation (MPAI-MMC) V2

The MPAI mission is to develop AI-enabled data coding standards. MPAI believes that its standards should enable humans to select machines whose internal operation they understand to some degree, rather than machines that are “black boxes” resulting from unknown training with unknown data. Thus, an implemented MPAI standard breaks up monolithic AI applications, yielding a set of interacting components with identified data whose semantics is known, as far as possible.

Technical Specification: Multimodal Conversation (MPAI-MMC) is an implementation of this vision for human-machine conversation. Extending the role of emotion as introduced in Version 1 of the standard, MPAI-MMC V2 introduces Personal Status, the representation of the internal status of humans that a machine estimates and that it artificially creates for itself with the goal of improving its conversation with the human or, even with another machine. Personal Status is applied to MPAI-MMC specific Use Cases, such as Conversation about a Scene, Virtual Secretary for Videoconference, and Human-Connected Autonomous Vehicle Interaction.

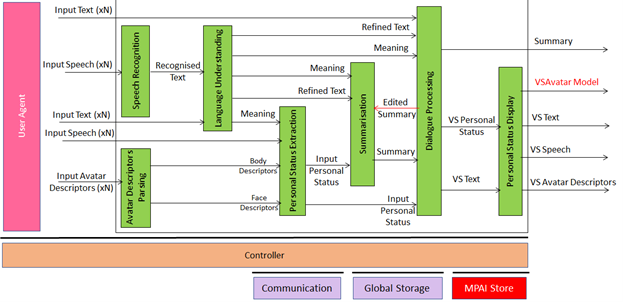

Figure 5 depicts the reference model of the Virtual Secretary for Videoconference.

Figure 5 – Reference Model of the Virtual Secretary for Videoconference

MPAI is publishing a Working Draft of MPAI-MMC V2 and is requesting the community to make comments on the WD. Comments will be used to develop the final draft that MPAI intends to publish at the 36th General Assembly (29 September 2023).

An online presentation of the WD will be held on September 05 at 08 and 15 UTC. In the meantime, please read the document at https://mpai.community/standards/mpai-mmc/.

The deadline for submitting a response is September 25 at 23:59 UTC.

MPAI Metaverse Model (MPAI-MMM) – Architecture

Metaverse is a word conveying different meaning to different persons. Generally, the word metaverse is characterised as a system that captures data from the real world, processes it, and combines it with internally generated data to create virtual environments that users can interact with. System developers have made technology decisions that best responded to their needs, often without considering the choices that other developers might have made for similar purposes.

Recently, however, there have been mounting concerns that such metaverse “walled gardens” do not fully exploit the opportunities offered by current and expected technologies. Calls have been made to make metaverse instances “Interoperable”.

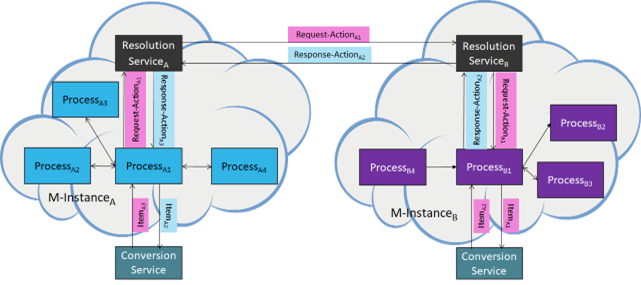

Two MPAI Technical Reports on Metaverse Functionalities and Functionality Profiles published earlier this year have laid down the groundwork. With the Technical Specification – MPAI Metaverse Model – Architecture, MPAI provides the first Interoperability tools by specifying the Functional Requirements of Processes, Items, Actions, and Data Types that allow two or more metaverse instances to Interoperate via a Conversion Service if they implement the Operation Model and produce Data whose Format complies with the Specification’s Functional Requirements.

Figure 6 depicts one aspect of the Specification where a Process in a metaverse instance requests a Process in another metaverse instance to perform an Action by relying on the instances’ Resolution Service.

Figure 6 – Resolution and Conversion Services

MPAI is publishing a Working Draft of MPAI-MMM – Architecture V1 and is requesting the community to make comments on the WD. MPAI intends to publish the final version at the 36th General Assembly on 29 September 2023.

An online presentation of the WD will be held on September 01 at 08 and 15 UTC. In the meantime, please read the document at https://mpai.community/standards/mpai-mmm/.

The deadline for submitting a response is September 21 at 23:59 UTC.

Object and Scene Description (MPAI-OSD)

Object and Scene Description is a project seeking to define a set of technologies for coordinated use in many use cases target of MPAI projects and standards. Examples are: Spatial Attitude, Point of View, Audio Scene Descriptors, Visual Scene Descriptors, Audio-Visual Scene Description, and Instance Identifier.

MPAI is seeking proposals of technologies that enable the implementation of standard components (AI Modules) to make real the vision described above.

An online presentation of the Call for Technologies, the Use Cases, Functional Requirements, and the Framework Licence will be held on September 07 at 09 and 16 UTC. In the meantime, please read the document at https://mpai.community/standards/mpai-osd/.

The deadline for submitting a response is September 20 at 23:59 UTC.

XR Venues (MPAI-XRV) – Live Theatrical Stage Performance

Theatrical stage performances such as Broadway theatres, musicals, dramas, operas, and other performing arts increasingly use video scrims, backdrops, and projection mapping to create digital sets rather than constructing physical stage sets. This allows animated backdrops and reduces the cost of mounting shows. The use of immersion domes – especially LED volumes – promises to surround audiences with virtual environments that the live performers can inhabit and interact with.

The MPAI XR Venues (XRV) – Live Theatrical Stage Performance project intends to define AI Modules that facilitate setting up live multisensory immersive stage performances which ordinarily require extensive on-site show control staff to operate. With XRV it will be possible to have more direct, precise yet spontaneous show implementation and control that achieve the show director’s vision but free staff from repetitive and technical tasks letting them amplify their artistic and creative skills.

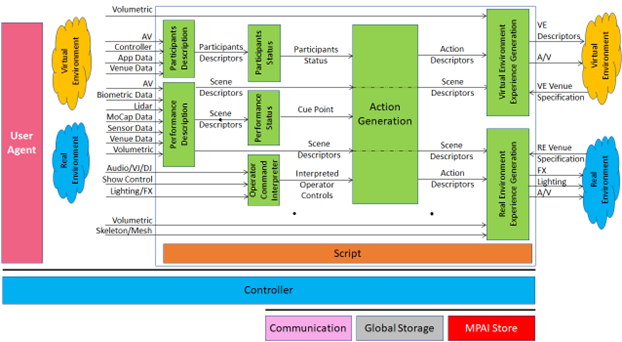

An XRV Live Theatrical Stage Performance can extend into the metaverse as a digital twin. In this case, elements of the Virtual Environment experience can be projected in the Real Environment and elements of the Real Environment experience can be rendered in the Virtual Environment (metaverse).

The figure shows how the XRV system captures the Real (stage and audience) and Virtual (metaverse) Environment, AI-processes the captured data, injects new components into the Real and Virtual Environments.

Figure 7 – Reference Model of MPAI-XRV – Live Theatrical Stage Performance

MPAI is seeking proposals of technologies that enable the implementation of standard components (AI Modules) to make real the vision described above.

An online presentation of the Call for Technologies, the Use Cases and Functional Requirements, and the Framework Licence will be held on September 05 at 07 and 17 UTC. In the meantime, please read the documents at https://mpai.community/standards/mpai-xrv/.

The deadline for submitting a response is November 20 at 23:59 UTC.

Meetings in the coming August-September meeting cycle

| Group name | 25 Aug | 28 Aug – 1 Sep | 4 – 8 Sep | 11 – 15 Sep | 18 – 22 Sep | 25 – 29 Sep | Time (UTC) |

| AI Framework | 28 | 11 | 18 | 25 | 15 | ||

| AI-based End-to-End Video Coding | 30 | 13 | 27 | 14 | |||

| AI-Enhanced Video Coding | 6 | 20 | 14 | ||||

| Artificial Intelligence for Health Data | 1 | 15 | 14 | ||||

| Avatar Representation and Animation | 31 | 14 | 21 | 28 | 13:30 | ||

| Communication | 31 | 14 | 15 | ||||

| Connected Autonomous Vehicles | 30 | 13 | 20 | 27 | 15 | ||

| Context-based Audio enhancement | 29 | 5 | 12 | 19 | 26 | 16 | |

| Governance of MPAI Ecosystem | 25 | 16 | |||||

| Industry and Standards | 1 | 15 | 16 | ||||

| MPAI Metaverse Model | 8 | 29 | 14 | ||||

| 25 | 15 | 22 | 15 | ||||

| Multimodal Conversation | 29 | 12 | 19 | 26 | 14 | ||

| Neural Network Watermarking | 5 | 14 | |||||

| 29 | 12 | 19 | 26 | 15 | |||

| Server-based Predictive Multiplayer Gaming | 31 | 7 | 14 | 21 | 28 | 14:30 | |

| XR Venues | 29 | 5 | 19 | 26 | 17 | ||

| General Assembly (MPAI-36) | 29 | 15 |

This newsletter serves the purpose of keeping the expanding diverse MPAI community connected.

We are keen to hear from you, so don’t hesitate to give us your feedback.