Highlights

- Five years on, MPAI takes stock of its journey and sets its sights on new challenges

- The MPAI Ecosystem

- New versions of MPAI standards

- New developments in audio

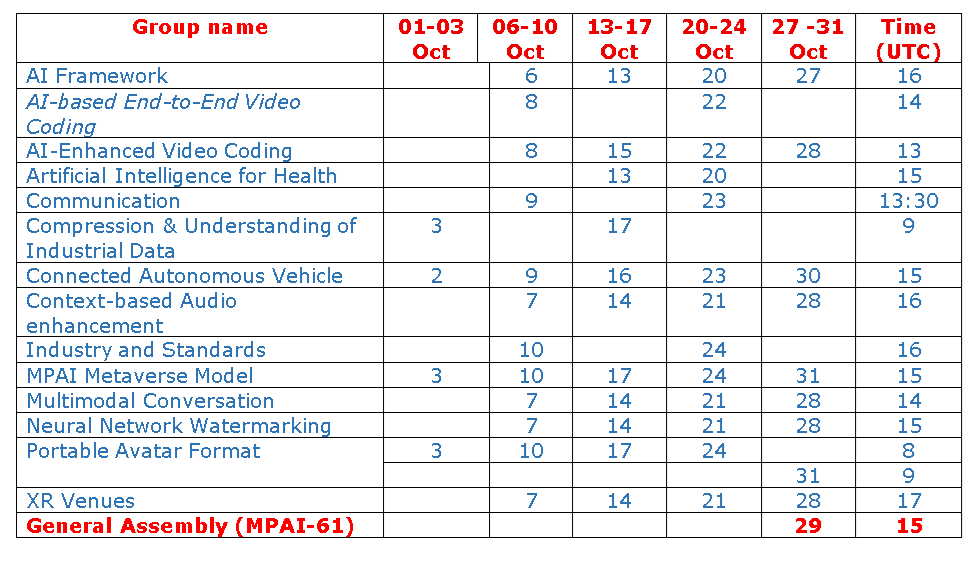

- Meetings in the October 2025 cycle.

Five years on, MPAI takes stock of its journey and sets its sights on new challenges

Where there are organisations counting years of existence in decades or centuries, there should not be much to celebrate for an organisation that only counts as few as five years of existence. But there are years and years – even days and days – like in one day as a lion or a hundred years as a sheep.

The last five were not the years of a sheep but as one day as a lion.

We started with the idea of an organisation dedicated to standards for AI-based data coding because we thought that standards would bring benefits to a domain mostly alien to it. Not like some standards that look more like legal tools designed to oppress users but standards offering fair opportunities to all parties in the chain extending from innovators to end users.

An ambitious organisation like MPAI could not operate like four friends in a bar. The MPAI operation rules were developed and are now enshrined in the MPAI Patent Policy. The ambitions of MPAI were further enhanced by the definition of the MPAI Ecosystem extending from MPAI to implementers, integrators, and end users with the introduction of a new actor called MPAI Store, now incorporated in Scotland as a company limited by guarantee. There is a standard – Governance of the MPAI Ecosystem (MPAI-GME) setting the rules of operation of the Ecosystem.

The idea of a mission was there but what about implementing it? We acted as lions and posited that monolithic AI should become component-based AI. Now a large share of our standards are based on the AI Framework (MPAI-AIF) standard, specifying an environment where AI Workflows composed of AI Modules can be initialised, dynamically configured, and controlled. MPAI-AIF also provided a stimulus to adoption of JSON Schema as a “language” to represent data types, AI Modules, and AI Workflows in MPAI standards. Today there is virtually no MPAI standard that does not use that language.

Having laid down the technical foundations, we started the buildings. One was designed to host the quite representative area of human and machine conversation extending beyond the “word” to cover other sometimes ethereal but information-carrying sensations and feelings. The standard called Multimodal Conversation (MPAI-MMC) is the first attempt at digitally representing this ethereal information with the Personal Status data type and Human-Machine Communication (MPAI-HMC) is an excellent example of its application.

Another investigation stream since the early MPAI days is audio sitting at the MPAI table as “Context-based Audio Enhancement” leading to the Context-based Audio Enhancement – Use Cases (MPAI-CAE) standard. Finally, with Compression and Understanding of Industrial Data (MPAI-CUI), MPAI demonstrated that data from so far unexplored domains like finance could benefit from standards.

Just one year after its establishment, MPAI could claim success by publishing its first three standards: MPAI-CUI, MPAI-GME, and MPAI-MMC and, by the end of 2021, another two: MPAI-AIF and MPAI-CAE.

Since its early days, MPAI was convinced that standards should have as much visibility as possible. For this reason, it established a successful cooperation with the Institute of Electric and Electronic Engineers (IEEE) – Standard Association (SA). Today, starting from three standards in 2022, nine MPAI standards have been adopted by IEEE without modifications and three more are in the pipeline.

The creation of MPAI Development Committees and Working Groups and their activity continued unrelenting. The use of watermarking and then fingerprinting to trace the use of neural networks let to the development of Neural Network Watermarking – Traceability (NNW-NNT). Connected Autonomous Vehicles was started in late 2020 and is now a standard with the name Connected Autonomous Vehicle – Technologies (CAV-TEC). MPAI was probably the first standard development organisation to initiate activities leading to a metaverse standard and now it can claim to have a solid candidate to lead the move to interoperable metaverses with MPAI Metaverse Model – Technologies (MMM-TEC). Since its early days, MPAI worked on online gaming, producing the Server-based Predictive multiplayer Gaming – Mitigation of Data Loss Effects (SPG-MDL) standard where a set of AI Modules predicts the game state of an online multiplayer game.

MPAI abhors the attitude of other standards bodies who develop unnecessarily “siloed” standards where technologies are treated exclusively from the point of view of the domain of that standard without considering similar technologies in other domains. Object and Scene Description (MPAI-OSD) and Portable Avatar Format (MPAI-PAF) do specify AI Workflows specific to their domains but their AI Modules and Data Types were specified for wide reuse in many other MPAI standards. This attitude is not confined to these two standards as the same can be said of MPAI-CAE and MPAI-MMC.

Atypical – but no less important – standards are AI Module Profiles (MPAI-PRF) establishing a machine-readable description to identify AI Module Profiles and Data Types, Formats, and Attributes (MPAI-TFA) providing a standard way to add information about data for processing by a machine.

Last comes a standard that embodies probably the very first activity – AI for video. AI-Enhanced Video Coding – Up-sampling Filter for Video applications (EVC-UFV) offers an AI super-resolution filter vastly superior to currently used filters.

Five years ago, MPAI was very bold in targeting standards for AI, then just a nice technology to talk about. In five years, however, AI is all over the place and much talked about. What will the future offer for MPAI?

Some answers are clear:

- With its impressive portfolio of 15 standards, there will be much maintenance and enhancement work to do.

- Two new standards are being developed and should be completed in a short time: AI for Health – Health Secure Platform and XR Venues – Live Theatrical Performance.

- A Call for Technologies is open, and responses are expected: Neural Network Watermarking – Technologies.

- A new Call for Technologies on Pursuing Goals in the metaverse is being prepared. This will require the development of a significant number of “behaviours” on top of a “baseline” Small Language Model.

- Development of reference implementations to enhance the value and attractiveness of existing standards.

While AI continues its lightning speed of development, MPAI will continue watching and identifying standardisation opportunities in different data coding domains enabled by AI.

Long live MPAI!

MPAI-60 has approved the new version of Technical Specification: Governance of the MPAI Ecosystem (MPAI-GME) V2.0. It is time to have a look at the new version of this document.

Before talking about ecosystems, let’s talk about standards. What is a standard? Borrowing from Jean-Jacques Rousseau’s grand Social Contract notion, we could say that a standard creates a “society” through a “mutual agreement” to “operate” under a common “set of rules” for the “benefit of all”. Granted that the rules set by a standard are not as all-inclusive as that of a real social contract and that the operation concerns a minimal part of what is governed by a real social contract, it is true that a standard sets rules that all members of the society created by a standard – the standard setter, the implementers-aggregators-distributors, and the end users – must adhere to if the standard is to provide “benefits for all”.

This is the reason why in the past this newsletter has attached the adjective divine to the name standard because standards can also make a chaotic “society” orderly.

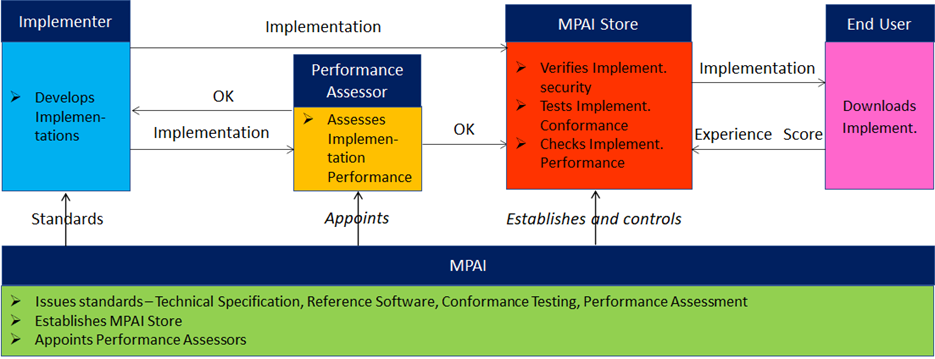

The MPAI Ecosystem is the “society” created by the set of standards published by MPAI. All societies need a set of rules to operate and Governance of the MPAI Ecosystem V2.0 is the constitution that will govern the MPAI society for the next few years. Let’s look inside.

MPAI is the root of trust of the MPAI Ecosystem with the following authority:

- Defines the rules of Governance.

- Develops the 4 components of an MPAI Standard (Technical, Reference Software, Conformance Testing, and Performance Assessment specifications).

- Assigns the Ecosystem activities envisaged for the MPAI Store to a specific company.

- Appoints Performance Assessors.

MPAI Store has the following roles and duties:

- Acts as the Registration Authority of Implementer IDs.

- Establishes distribution agreements with Implementers.

- Tests the conformance of implementations.

- May receive and publish notifications from Performance Assessors.

- Posts software Implementations for download.

- Manages a reputation system publishing reports of user experiences.

Implementers

- Obtain an Implementer ID from the MPAI Store.

- Make implementations.

- Submit implementations to the MPAI Store.

- May submit Implementations to Performance Assessors.

Performance Assessors

- Are appointed for a particular domain and Performance category.

- Assess the Performance of Implementations.

End Users

- Download AI Workflows or AI Modules.

- May provide feedback to the MPAI Store Reputation system.

The Figure graphically represents the Ecosystems and the operation of its Actors.

New versions of MPAI standards

The development of new standards and the extension of approved standard requires that some existing MPAI standards be integrated with new functionalities. In the last moths there has been an intense activity in this area.

Standards approved for publication in final form:

| Acronym | Title | Ver. | pptx | video |

| MPAI-GME | Governance of the MPAI Ecosystem | 2.0 | ppt | YT |

| MMM-TEC | MPAI Metaverse Model – Technologies V2.1 | 2.1 | ppt | YT |

| MPAI-OSD | Object and Scene Description | 1.4 | ppt | YT |

| NNW- NNT | Neural Network Watermarking – Traceability | 1.1 | ppt | YT |

| MPAI-PAF | Portable Avatar Format | 1.5 | ppt | YT |

| MPAI-TFA | Data Types, Formats, and Attributes | 1.4 | ppt | YT |

Standards approved for publication with a request for Community Comments.

| Acronym | Title | Ver. | pptx | video |

| EVC-UFV | AI-based Enhanced Video Coding – Up-sampling Filter for Video Applications | 1.0 | YT

|

|

| MPAI-HMC | Human and Machine Communication | 2.1 | ppt | YT |

| MPAI-MMC | Multimodal Conversation | 2.4 | ppt | YT |

Published standards being revised or extended

| Acronym | Title | Ver. |

| MPAI-AIF | AI Framework | 1.3 |

| CAE-USC | Context-based Audio Enhancement – Use Cases | 2.4 |

| CAV-TEC | Connected Autonomous Vehicle – Technologies | 1.1 |

| CUI-CPP | Compression and Understanding of Industrial Data – Company Performance Prediction | 2.0 |

Standards under development

| Acronym | Title | Ver. |

| MPAI-AIH | AI for Health – Health Secure Platform | 1.0 |

| MPAI-XRV | XRV Venues – Live Theatrical Performance | 1.0 |

Audio has been one of the first activities started by MPAI and one of the first standards approved in the first 2021 batch. Technical Specification: Context-based Audio Enhancement (MPAI-CAE) – Use Cases (CAE-USC) specifies four AI Workflows with its AI Modules and Data Types: Audio Recording Preservation specifies a procedure implementable as an AI Workflow executed in the AI Framework to preserve open-reel audio tapes, Enhanced Audioconference Experience specifies an AIW to extract the audio object from a scene, Emotion-Enhanced Speech specifies AIWs to add emotion to emotionless speech, and Speech Restoration System specifies a process to restore missing speech segments.

Early in 2025, MPAI published a Call for Technologies for Six Degrees of Freedom Audio and is now engaged in the development of two standards. One is called Six Degrees of Freedom (CAE-6DF) and the other is called Audio Object Rendering (CAE-AOR).

The ongoing work in CAE-6DF standard aims to provide navigable immersive audio for the case where a multi-perspective description of the sound field in the form of higher-order Ambisonics is available or can be extracted.

An example use case is navigable drama where the user is able to experience the same scene not only from a fixed perspective but navigate and hear both the sources and the acoustics of the stage in which the recording is made, attending at will at conversations between different groups of actors. Another example use case is where a user can listen to the same concert from different positions also changing their perspective at will via locomotion. In other words, CAE-6DF brings the affordance of locomotion in the presented sound scene including an accurate rendition of the acoustics of the venue in which the scene was recorded.

The CAE-6DF standard defines an encoder and a decoder where the processing requirements for the decoder are intentionally kept low in order to also cater to devices with computational constraints. The CAE-6DF encoder separates the sound field captured at multiple points into the combination of 1) a parametric representation that is a linear combination of plane waves and 2) a diffuse field component that represents the residual field. The parametric representation contains the directional cues that can be efficiently interpolated with respect to the viewer position at the decoder. The diffuse components can further be compressed using a lossy or lossless compression method to reduce the data size. The CAE-6DF decoder contains the necessary mechanisms to interpolate the sound field based on the user’s location within the navigable volume and respond to their head orientation to provide interactivity. The decoder is terminal-agnostic, meaning it can produce both headphone-based (i.e. binaural) and loudspeaker-based outputs (i.e. AllRAD-decoded Ambisonics) using third-party renderers.

While CAE-6DF does not incorporate any visual component per se, the mechanisms to spatio-temporally align multiple 360° videos or volumetric content are also described.

In CAE-AOR, the device receives Audio Scene Descriptors and User Commands. With these a User can modify the Scene Descriptors before rendering the Audio Scene.

Meetings in the October 2025 cycle