<– Human-CAV Interaction (HCI) Go to ToC Autonomous Motion Subsystem (AMS) –>

Index

5.2 Reference Architecture of Subsystem

5.3 Input/Output Data of Subsystem.. 15

5.5 Input/Output Data of AI Modules

5.1 Functions of Subsystem

The Environment Sensing Subsystem (ESS):

- Uses all Subsystem devices to acquire as much as possible information from the Environment in the form of electromagnetic and acoustic data.

- Receives Spatial Attitude information and Environment data (e.g., temperature, pressure, humidity, etc.) from the Motion Actuation Subsystem.

- Produces a sequence of Basic Environment Representations (BER) throughout the travel.

- Passes the Basic Environment Representations to the Autonomous Motion Subsystem.

5.2 Reference Architecture of Subsystem

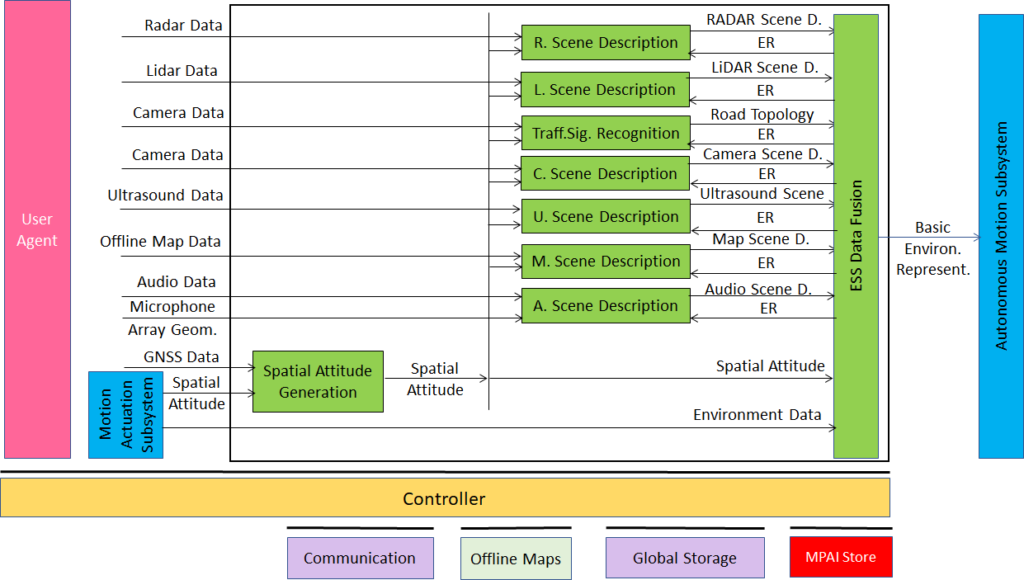

Figure 4 gives the Environment Sensing Subsystem Reference Model.

Figure 4 – Environment Sensing Subsystem Reference Model

The typical sequence of operations of the Environment Sensing Subsystem AIW is:

- Spatial Attitude Generation computes the CAV’s Spatial Attitude using the Spatial Attitude information provided by the Motion Actuation Subsystem and the GNSS.

- Environment Sensing Technologies (EST), e.g., RADAR, LiDAR, camera produce EST-specific data streams.

- EST-specific Scene Descriptors, e.g., RADAR Scene Description and LiDAR Scene Description

- Receive EST-specific data streams.

- Access the Basic Environment Representation at a previous time interval.

- Produce EST-specific Scene Descriptors.

- ESS Data Fusion combine the different EST-specific Scene Descriptors into the time-dependent Basic Environment Representation that includes Alert information.

Figure 4 assumes that Traffic Signalisation Recognition produces the Road Topology by analysing Camera Data. The model of Figure 4 can easily by extended to the case where Data from other ESTs is processed to compute or help compute the Road Topology.

5.3 Input/Output Data of Subsystem

The currently considered Environment Sensing Technologies (EST) are:

- Global navigation satellite system or GNSS (~1 & 1.5 GHz Radio).

- Geographical position and orientation, and their time derivatives up to 2nd order (Spatial Attitude).

- Visual Data in the visible range, possibly supplemented by depth information (400 to 700 THz).

- LiDAR Data (~200 THz infrared).

- RaDAR Data (~25 & 75 GHz).

- Ultrasound Data (> 20 kHz).

- Audio Data in the audible range (16 Hz to 16 kHz).

- Spatial Attitude (from the Motion Actuation Subsystem).

- Other environmental data (temperature, humidity, …).

Table 6 gives the input/output data of Environment Sensing Subsystem.

Table 6 – I/O data of Environment Sensing Subsystem

| Input data | From | Comment |

| Spatial Attitude from AMS | Motion Actuation Subsystem | To be fused with GNSS data |

| Other Environment Data | Motion Actuation Subsystem | Temperature etc. to be added to Basic Environment Representation |

| Global Navigation Satellite System (GNSS) | ~1 & 1.5 GHz Radio | Get Pose from GNSS |

| Radar | ~25 & 75 GHz Radio | Capture Environment with Radar |

| Lidar | ~200 THz infrared | Capture Environment with Lidar |

| Ultrasound | Audio (>20 kHz) | Capture Environment with Ultrasound |

| Cameras (2/D and 3D) | Video (400-800 THz) | Capture Environment with Cameras |

| Microphones | Audio (16 Hz-16 kHz) | Capture Environment with Microphones |

| Output data | To | Comment |

| Alert | Autonomous Motion Subsystem | Critical last minute Environment Description from EST |

| Basic Environment Representation | Autonomous Motion Subsystem | Locate CAV in the Environment |

5.4 Functions of AI Modules

Table 7 gives the functionality of all Environment Sensing Subsystem AIMs.

Table 7 – AI Modules of the Environment Sensing Subsystem

| AIM | Function |

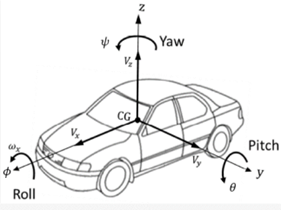

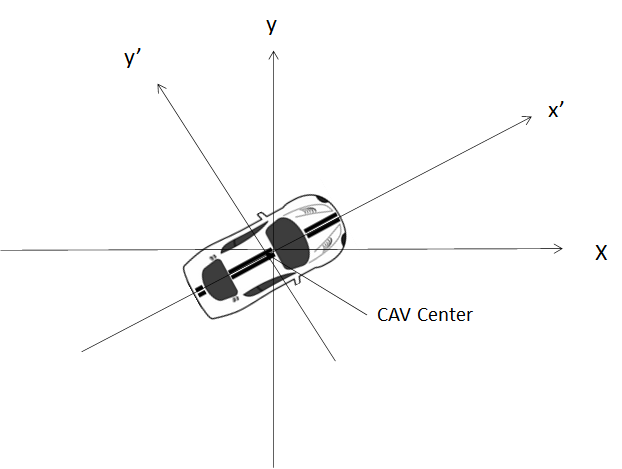

| Spatial Attitude Generation | Computes the CAV Spatial Attitude using information received from GNSS and Motion Actuation Subsystem with respect to a predetermined point in the CAV defined as the origin (0,0,0) of a set of (x,y,z) Cartesian coordinates with respect to the local coordinates. |

| RADAR Scene Description | Produces RADAR Scene Descriptors from RADAR Data |

| LiDAR Scene Description | Produces LiDAR Scene Descriptors from LiDAR Data |

| Camera Scene Description | Produces Camera Scene Descriptors from Camera Data |

| Traffic Signalisation Recognition | Produces Road Topology of the Environment from Camera and LiDAR Data. |

| Ultrasound Scene Description | Produces Ultrasound Scene Descriptors from Ultrasound Data. |

| Audio Scene Description | Produces Audio Scene Descriptors from Audio Data. |

| Online Map Scene Description | Produces Online Map Data Scene Descriptors from Online Map Data. |

| Environment Sensing Subsystem Data Fusion | Selects critical Environment Representation as Alert; produces CAV’s Basic Environment Representation by fusing the Scene Descriptors of the different ESTs,

The Basic Environment Representation (BER) includes all available information from ESS and MAS that enables the CAV to define a Path in the Decision Horizon Time. The BER results from the integration of:

|

|

|

| Figure 5 – Roll, Pitch, and Yaw in a vehicle [10] | Figure 6 – Spatial Attitude in a CAV |

5.5 Input/Output Data of AI Modules

For each AIM (1st column), Table 8 gives the input (2nd column) and the output data (3rd column). The following 3-digit subsections give the requirements of the data formats in columns 2 and 3.

Table 8 – Environment Sensing Subsystem AIMs and Data

| AIM | Input | Output |

| Spatial Attitude Generation | GNSS Data

Spatial Attitude form MAS |

Spatial Attitude |

| Radar Scene Description | Radar Data

Basic Environment Representation |

Radar Scene Descriptors |

| Lidar Scene Description | Lidar Data

Basic Environment Representation |

Lidar Scene Descriptors |

| Traffic Signalisation Recognition | Camera Data

Basic Environment Representation |

Road Topology |

| Camera Scene Description | Camera Data

Basic Environment Representation |

Lidar Scene Descriptors |

| Ultrasound Scene Description | Ultrasound Data

Basic Environment Representation |

Ultrasound Scene Descriptors |

| Audio Scene Description | Audio Data

Basic Environment Representation |

Audio Scene Descriptors |

| Environment Sensing Subsystem Data Fusion | RADAR Scene Descriptors

LiDAR Scene Descriptors Road Topology Lidar Scene Descriptors Ultrasound Scene Descriptors Audio Scene Descriptors Map Scene Descriptors Spatial Attitude Other Environment Data, |

Basic Environment Representation

Alert |

<– Human-CAV Interaction (HCI) Go to ToC Autonomous Motion Subsystem (AMS) –>