| 1 Functions | 2 Reference Model. | 3 I/O Data |

| 4 Functions of AI Modules | 5 I/O Data of AI Module | 6 AIW, AIMs, and JSON Metadata |

1 Functions

The MPAI Connected Autonomous Vehicle (CAV) – Architecture specifies the Reference Model of a Vehicle – called Connected Autonomous Vehicle (CAV) – able to reach a destination by understanding the environment using its own sensors, exchanging information with other CAVs, and and actuating motion. (see here for an introduction to MPAI-CAV and here for the full specification). The Human-CAV interaction (HCI) Subsystem has the function to recognise the human owner or renter, respond to humans’ commands and queries, converse with humans during the travel, converse with the Autonomous Motion Subsystem in response to humans’ requests, and communicate with HCIs on board other CAVs.

2 Reference Model

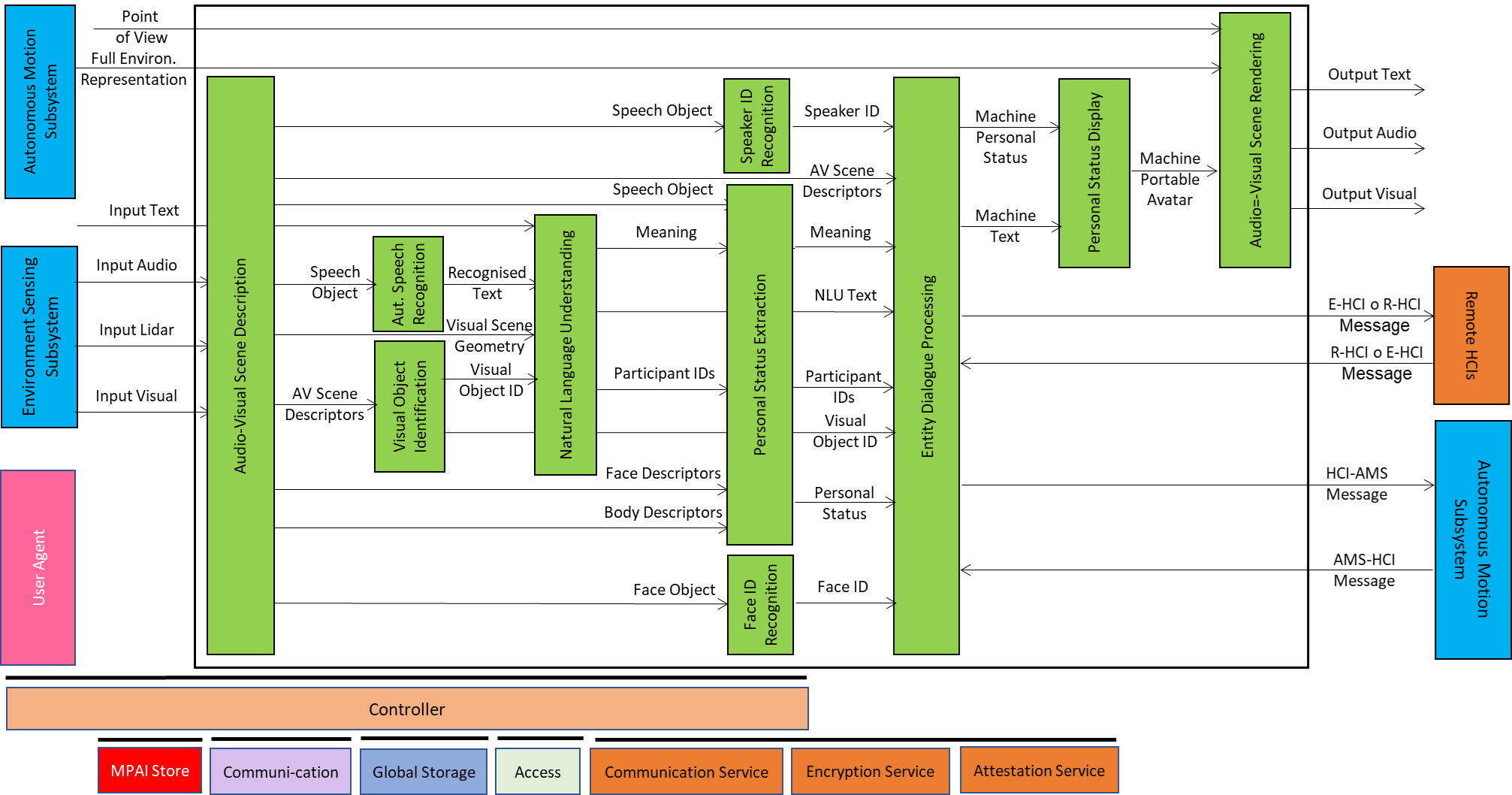

Figure 1 represents the Human-CAV Interaction (HCI) Reference Model. This includes data such as Audio and Visual, Inter-HCI Information, HCI-AMS Message, and AMS-HCI Message that are not part of the specification but are used to define the full scope of the Human-CAV Interaction Subsystem.

Figure 1 – Human-CAV Interaction Reference Model

A group of humans approaches the CAV outside the CAV or is sitting inside the CAV:

- Audio-Visual Scene Description produces Audio Scene Descriptors in the form of Audio (Speech) Objects corresponding to each speaking human in the Environment (outside or inside the CAV) and Visual Scene Descriptors in the form of Descriptors of Faces and Bodies. Note that all non-Speech Objects are removed from the Audio Scene.

- Automatic Speech Recognition recognises the speech of each human and produces Recognised Text supporting multiple Speech Objects as input. Each Speech Object has an identifier to enable the Speaker Identity Recognition provide labelled Recognised Texts.

- Visual Object Identification produces Identifiers of Visual Objects indicated by a human.

- Natural Language Understanding extracts Meaning and produces Refined Text from the Recognised Text of each Input Speech potentially using spatial information of Visual Object Identifiers.

- Speaker Identity Recognition and Face Identity Recognition authenticate the humans that the HCI is interacting with. If the Face Identity Recognition AIM provides Face IDs corresponding to the Speaker IDs, the Entity Dialogue Processing AIM can correctly associate the Speaker IDs (and the corresponding Recognised Texts) with the Face IDs.

- Personal Status Extraction extracts the Personal Status of the humans.

- Personal Status Display produces the ready-to-render Machine Portable Avatar [3] conveying Machine Speech and Machine Personal Status.

- Audio-Visual Scene Rendering visualises either information from Machine Portable Avatar or the AMS’s Full Environment Representation based on the Point of View provided by human.

HCI interacts with the AMS to request a Route to a destination, requests views of the AMS’s Full Environment Representation for passengers’ benefit and receives information from the AMS that may be relevant to passengers. HCI may also communicate with Remote HCIs. Note that this document does not address the format in which the interactions interaction of HCI with AMS and with remote HCIs (see Figure 1).

The HCI interacts with the humans in the cabin in several ways:

- By responding to commands/queries from one or more humans at the same time, e.g.:

- Commands to go to a waypoint, park at a place, etc.

- Commands with an effect in the cabin, e.g., turn off air conditioning, turn on the radio, call a person, open window or door, search for information etc.

- By conversing with and responding to questions from one or more humans at the same time about travel-related issues (in-depth domain-specific conversation), e.g.:

- Humans request information, e.g., time to destination, route conditions, weather at destination, etc.

- CAV offers alternatives to humans, e.g., long but safe way, short but likely to have interruptions.

- Humans ask questions about objects in the cabin.

- By following the conversation on travel matters held by humans in the cabin if 1) the passengers allow the HCI to do so, and 2) the processing is carried out inside the CAV.

3 I/O Data

Table 1 gives the input/output data of Human-CAV Interaction.

Note that communication with the Autonomous Motion Subsystem (AMS) and remote HCI Subsystem is not specified here.

Table 1 – I/O data of Human-CAV Interaction

| Input data | From | Description |

| Input Audio | Environment Sensing Subsystem | User authentication User command User conversation with HCI |

| Input Text | User | Text complementing/replacing User input |

| Input Visual | Environment Sensing Subsystem | User authentication User command User conversation with HCI |

| AMS-HCI Message | Autonomous Motion Subsystem | AMS response to HCI request. |

| Remote-Ego HCI Message | Remote HCI | Remote HCI to Ego HCI. |

| Output data | To | Comments |

| Output Audio | Cabin Passengers | HCI’s avatar Audio. |

| Output Visual | Cabin Passengers | HCI’s avatar Visual. |

| HCI-AMS Message | AMS Subsystem | HCI request to AMS, e.g., Route or Point of View. |

| Ego-Remote HCI Message | Remote HCI | Ego HCI to Remote HCI. |

4 Functions of AI Modules

Table 2 gives the functions of all Human-CAV Interaction AIMs.

Table 2 – Functions of Human-CAV Interaction’s AI Modules

| AIM | Function |

| Audio-Visual Scene Description | 1. Receives Input Audio and Visual captured by the appropriate (indoor or outdoor) Input Audio (Microphone Array), Input Visual and Input LiDAR.

2. Produces the Audio-Visual Scene Descriptors. |

| Automatic Speech Recognition | 1. Receives Speech Objects.

2. Produces Recognised Text. |

| Visual Object Identification | 1. Receives Visual Scenes Descriptors

2. Uses Visual Objects and visual information from human (finger pointing). 3. Provides the ID of the class of objects of which the indicated Visual Object is an Instance. |

| Natural Language Understanding | 1. Receives Recognised Text.

2. Uses context information (e.g., Instance ID of object). 3. Produces NLU Text (either Refined or Input) and Meaning. |

| Speaker Identity Recognition | 1. Receives Speech Object.

2. Produces Speaker ID. |

| Personal Status Extraction | 1. Receives Speech Object, Meaning, Refined Text, Face Descriptors and Body Descriptors of a human with a Participant ID.

2. Produces the Personal Status of a human. |

| Face Identity Recognition | 1. Receives Face Object of a human with a Participant ID.

2. Produces Face ID. |

| Entity Dialogue Processing | 1. Receives Speaker and Face ID, AV Scene Descriptors, Meaning, Text from Natural Language Understanding, Visual Object ID, and Personal Status.

2. Produces Machine Text (HCI response) and Machine (HCI) Personal Status. |

| Personal Status Display | 1. Receives Machine Text and Machine Personal Status.

2. Produces Machine’s Portable Avatar. |

| Audio-Visual Scene Rendering | 1. Receives AV Scene Descriptors or Portable Avatar

2. Produces Output Text, Output Audio, and Output Visual. |

5 I/O Data of AI Modules

Table 3 gives the AI Modules of the Human-CAV Interaction depicted in Figure 3.

Table 3 – AI Modules of Human-CAV interaction

| AIM | Input | Output |

| Audio -Visual Scene Description | – Input Audio |- Input Visual |

– AV Scene Descriptors |

| Automatic Speech Recognition | – Speech Object | – Recognised Text |

| Visual Object Identification | – AV Scene Descriptors – Visual Objects |

– Visual Object Instance ID |

| Natural Language Understanding | – Recognised Text – AV Scene Descriptors – Visual Object ID – Input Text |

– Natural Language Understanding Text – Meaning |

| Speaker Identity Recognition | – Speech Object | – Speaker ID |

| Personal Status Extraction | – Meaning – Input Speech – Face Descriptors – Body Descriptors |

– Personal Status |

| Face Identity Recognition | – Face Object | – Face ID |

| Entity Dialogue Processing | – Ego-Remote HCI Message – AMS-HCI Message – Speaker ID – Meaning – Natural Language Understanding Text – Personal Status – Face ID |

– Ego-Remote HCI Message – HCI-AMS Message – Machine Text – Machine Personal Status |

| Personal Status Display | – Machine Personal Status – Machine Text |

– Machine Portable Avatar |

| Audio-Visual Scene Rendering | – AV Scene Descriptors – Machine Portable Avatar – Point of View |

– Output Text – Output Audio – Output Visual |

6 AIW, AIMs and JSON Metadata

Table 4 – AIW, AIMs and JSON Metadata