MPAI-MMC to be adopted as IEEE standard

On the day MPAI Multimodal Conversation (MPAI-MMC) reached its full 6 months since its approval, the IEEE hosted the kick-off meeting of the P3300 working group tasked with the adoption of the MPAI technical specification as an IEEE standard. Earlier, MPAI and IEEE had signed an agreement whereby MPAI grants IEEE the right to publish MPAI-MMC as an IEEE standard.

At its first meeting, the WG has approved the working draft of IEEE 3300 and requested IEEE to ballot the WD. In a couple of months, MPAI-MMC is expected to become IEEE 3300.

The creation of the WG and the development of the IEEE 3300 standard are the natural steps following the issuance of the Call for Patent Pool Administrator by the MPAI-MMC patent holders. The next step will be the development of the Use Cases and Functional Requirements for MPAI-MMC Version 2 that MMC-DC and other groups are busy preparing.

The IEEE 3300 WD is verbatim MPAI-MMC, so this article is a good opportunity to recall the MPAI document and its structure. If you want to follow this description with the actual text, please download it.

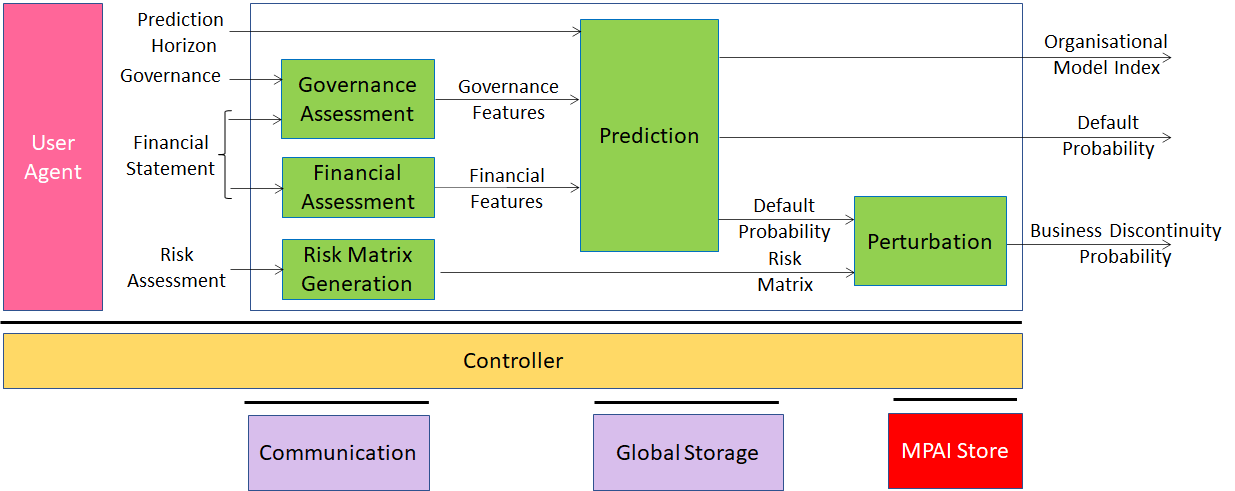

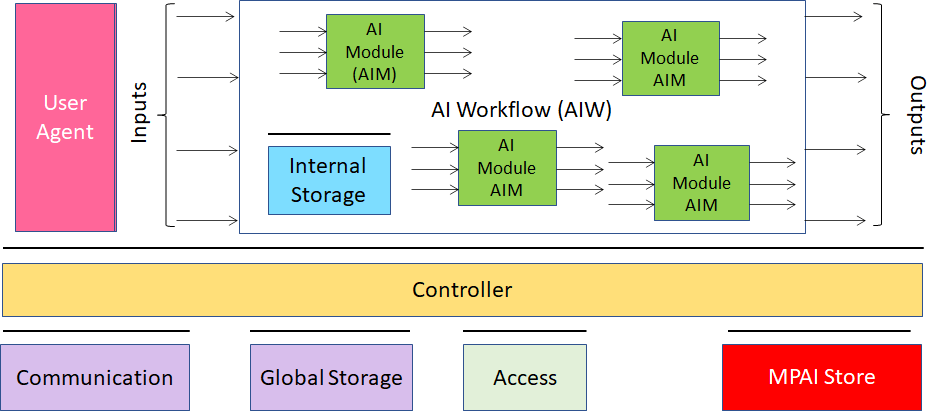

| Chapter 1 is an informative introduction to MPAI, the AI Framework (MPAI-AIF) approach to AI data coding standards including the notion of AI Modules (AIM) organised in and AI Workflow executed in the AI Framework (AIF), and the governance of the MPAI ecosystem. |  |

Chapter 2 is a functional specification of the 5 use cases:

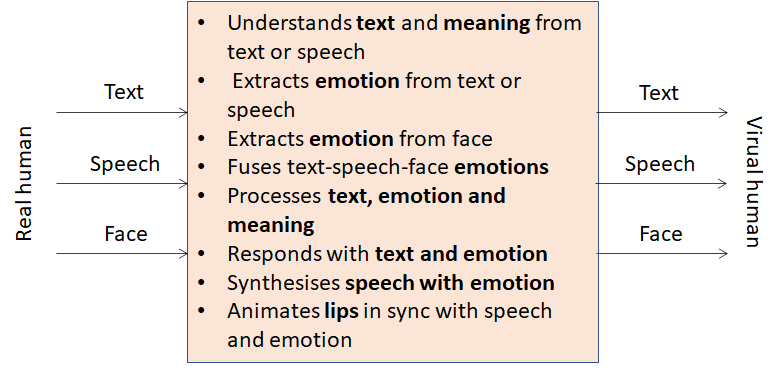

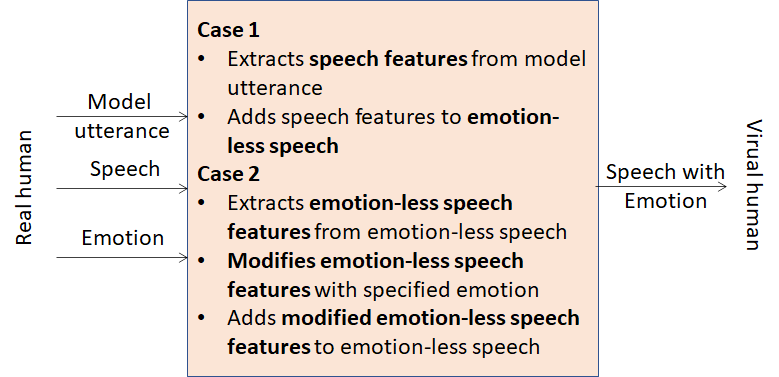

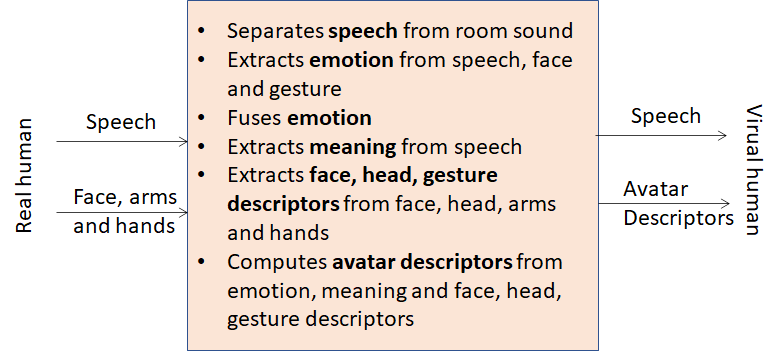

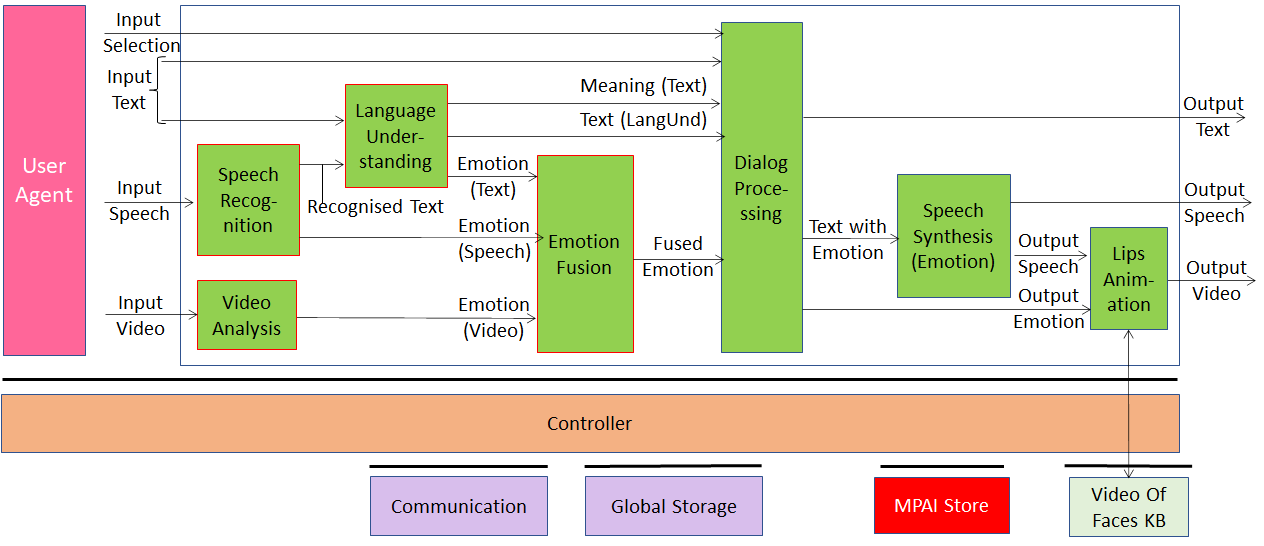

| “Conversation with Emotion” (CWE): a human is holding an audio-visual conversation with a machine impersonated by a synthetic voice and an animated face. Both the human and the machine express emotion. |  |

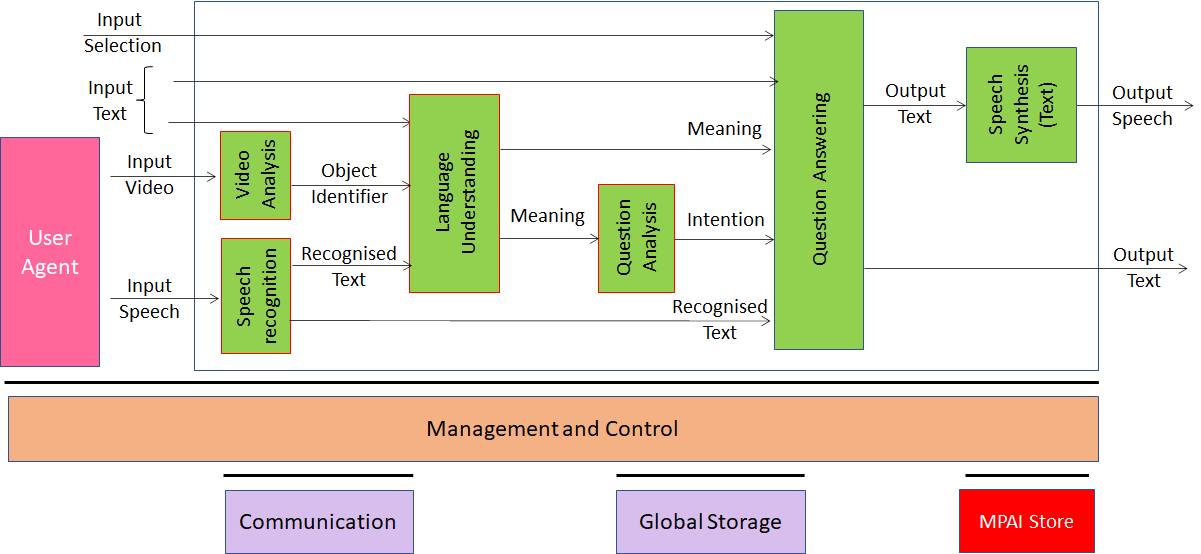

| “Multimodal Question Answering” (MQA): a human is holding an audio-visual conversation with a machine impersonated by a synthetic voice. The human asks a question about an object held in thei hand. |  |

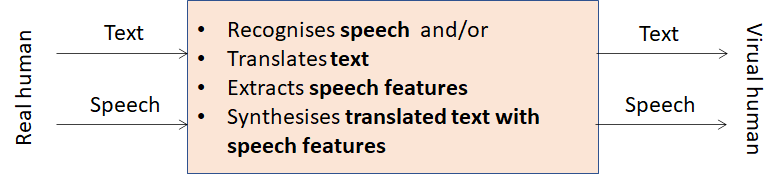

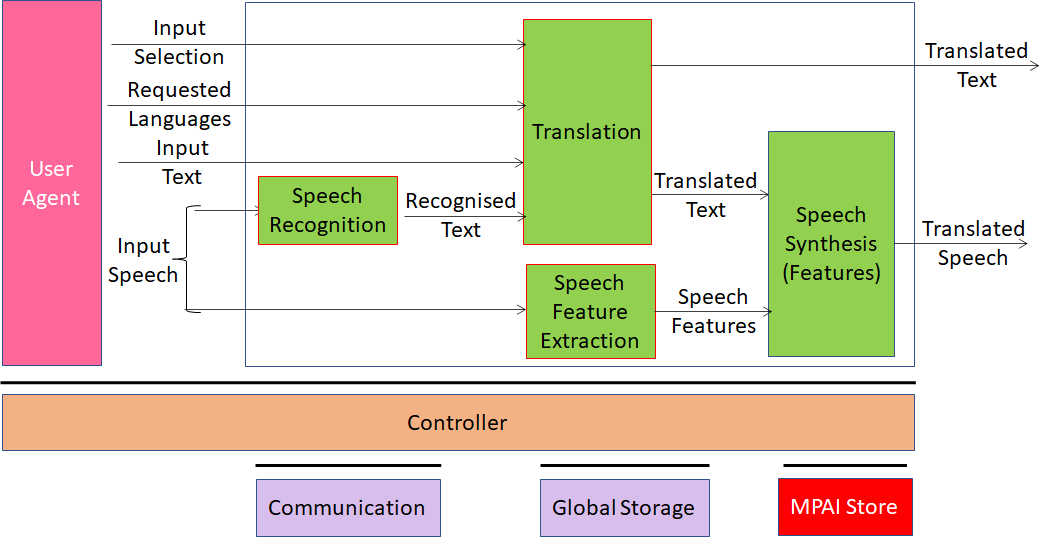

| Three Uses Cases supporting conversational translation applications. In each Use Case, users can specify whether speech or text is used as input and, if it is speech, whether their speech features are preserved in the interpreted speech:

– “Unidirectional Speech Translation” (UST). |

|

Chapter 3 contains definitions of terms that are specific to MPAI-MMC.

Chapter 4 contains normative and information references.

Chapter 5 contains the specification of the 5 use cases. For each of them, the following is specified:

- The Scope of the Use Case

- The syntax and semantics of the data entering and leaving the AIW

- The Architecture of AIMs composing the AIW implementing the Use Case

- The functions of the AIMs

- The JSON Metadata describing the AIW

Chapter 6 contains the specification of all the AIMs of all the Use Cases:

- A note about the meaning of AIM interoperability

- The syntax and semantics of the data entering and leaving all the AIMs of the 5 AIWs

- The formats of all the AIM data

Annex 1 defines the terms not specific to MPAI-MMC

Annex 2 contains notices and disclaimers concerning MPAI standards (informative)

Annex 3 provides a brief introduction to the Governance of the MPAI Ecosystem (informative)

Annex 4 and the following annexes provide the AIW and AIM metadata of all MPAI-MMC Use Cases.

MPAI-MMC is just the initial step. Two more MPAI Technical Specifications have been submitted for adoption: AI Framework (MPAI-AIF) and Context-based Audio Enhancement.

MPAI is looking forward to a mutually beneficial collaboration with IEEE.