Introduction

This article responds to the question: where is MPAI today, 1st day of 2021, 3 months after its foundation, with its mission and plans?

Converting a mission into a work plan

Looking back, the MPAI mission “Moving Picture, Audio and Data Coding by Artificial Intelligence” looked very attractive, but the task of converting that nice-looking mission into a work plan wasdaunting. Is there anything to standardise in Artificial Intelligence (AI)? Thousands of companies use AI but do not need standards. Isn’t it so that AI signals the end of media and data coding standardisation?

The first answer is that we should first agree on a definition of standard. One is “the agreement reached by a group of individuals who recognise the advantage of all doing certain things in an agreed way”. There is, however, an older definition of standard that says “the agreement that permits large production runs of component parts that are readily fitted to other parts without adjustment”.

Everybody knows that implementing an MPEG audio or video codec means following a minutely prescribed procedure implied by definition #1. But what about an MPAI “codec”?

In the AI world, a neural network does the job it has been designed for and the network designer does not have to share with anyone else how his neural network works. This is true for the “simple” AI applications, like using AI to recognise a particular object, and for some of the large-scale AI applications that major OTTs run on the cloud.

The application scope of AI is expanding, however, and application developers do not necessarily have the know-how, capability or resources to develop all the pieces needed to make a complete AI application. Even if they wanted to, they could very well end up with an inferior solution because they would have to spread their resources across multiple technologies instead of concentrating on those they know best and acquire the others from the market.

MPAI has adopted the definition of standard as “the agreement that permits large production runs of component parts that are readily fitted to other parts without adjustment”. Therefore, MPAI standards target components, not systems, not the inside of the components, but the outside of the components. The goal is, indeed, to ensure standard users that the components will be “readily fitted to other parts without adjustment”.

The MPAI definition of standard appeared in an old version of the Encyclopaedia Britannica. Probably that the definition was inspired decades before, at the dawn of industrial standards and spearheaded by the British Standards Institute, the first modern industry standard association, when drilling, reaming and threading were all the rage in the industry of the time.

Drilling, reaming and threading in AI

AI has nothing to do with drilling, reaming and threading (actually, it could, but this is not a story for today). However, MPAI addresses the problem of standards in the same way a car manufacturer addresses the problem of procuring nuts and bolt.

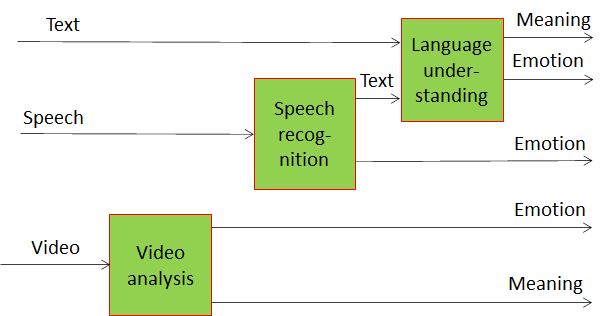

Let us consider an example AI problem, a system that allows a machine to have a more meaningful dialogue with a human than it is possible today. Today, with speech recognition and synthesis technologies, it is already possible to have a meaningful man-machine dialogue. However, if you are offering a service and you happen to deal with an angry customer, it is highly desirable for the machine to understand the customer’s state of mind, i.e., her “emotion” and reconfigure the machine’s answers appropriately, lest the customer gets angrier. In yet another level of complexity, if your customer is having an audio-visual conversation with the machine, it would be useful for the machine to extract the person’s emotions from her face traits.

Sure, some companies can offer complete systems, full of neural networks designed to do the job. There is a problem, though, what control do you, as a user, have on the way AI is used in this big black box? The answer is unfortunately none, and this is one of the problems of mass use of AI where millions and in the future billions of people will deal with machines that show levels of intelligence, without people knowing how that (artificial) intelligence has been programmed before being injected in a machine or a service.

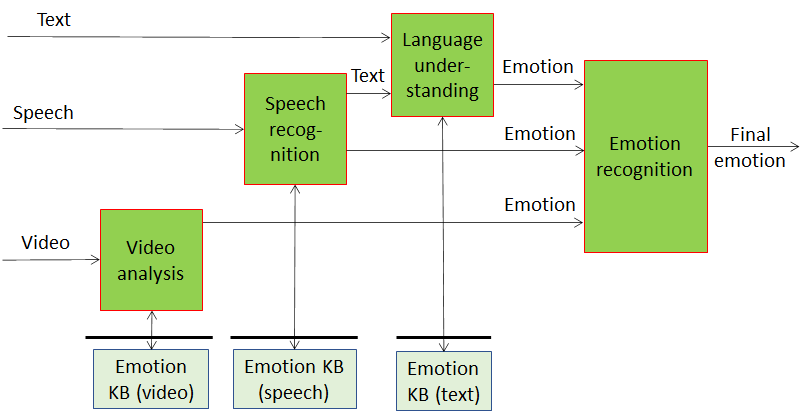

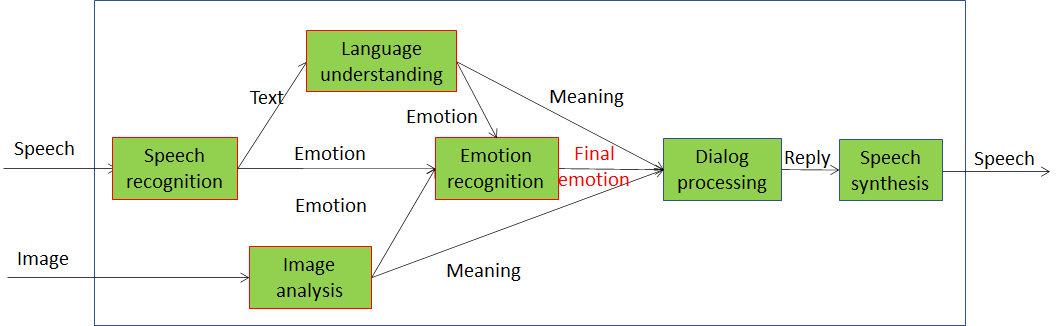

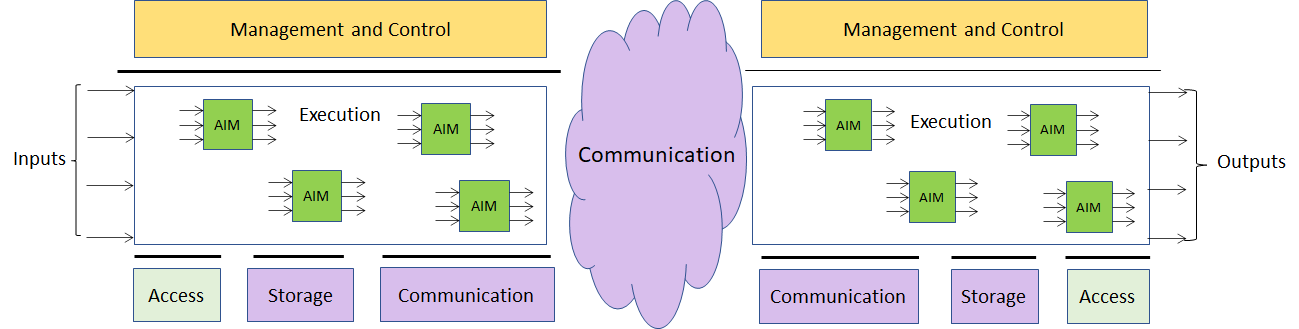

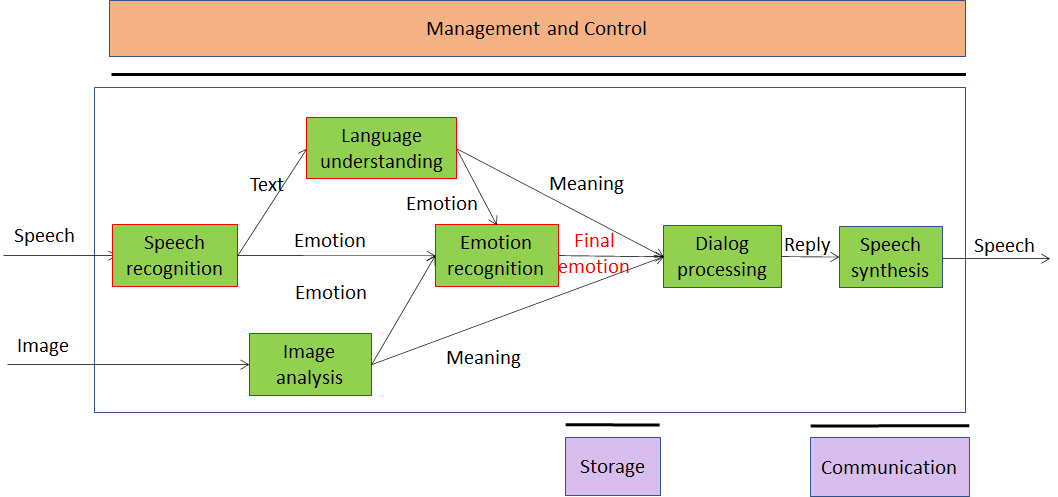

MPAI does not have in its mission nor can it offer a full solution to this problem. However, MPAI standards can offer a path that may lead to a less uncontrolled deployment of AI. This is exemplified by Figure 1 below.

Figure 1 – Human-machine conversation with emotion

Each of the six modules in the figure can be neural networks that have been trained to do a particular job. If the interfaces of the “Speech recognition” module, i.e., the AI equivalent of “mechanical threading”, are respected, the module can be replaced by another having the same interfaces. Eventually you can have a system with the same functionality but, possibly, with different performance. Individual modules can be tested in appropriate testing environments to assess how well a module does the job it claims it does.

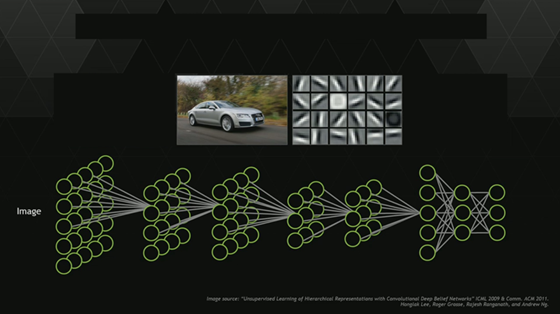

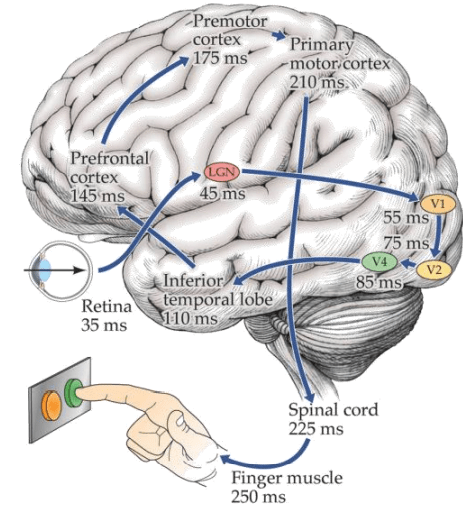

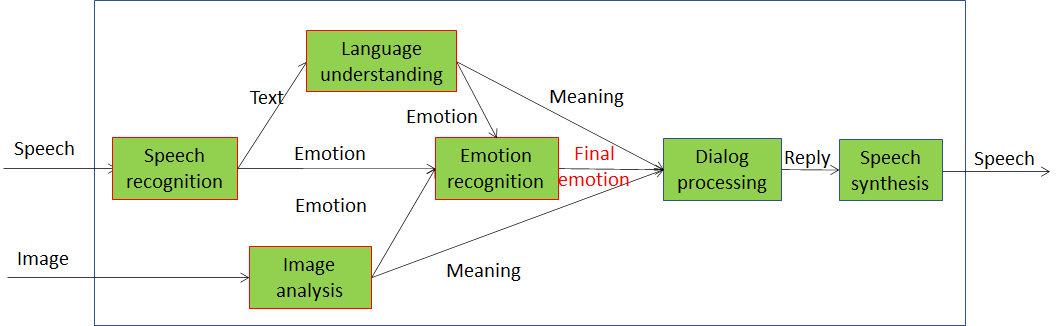

It is useful to compare this approach with the way we understand the human brain operates. Our brain is not a network of variously connected 100 billion neurons. It is a system of “modules” whose nature and functions have been researched for more than a century. Each “module” is made of smaller components. All “modules” and their connections are implemented with the same technology: interconnected neurons.

Figure 2, courtesy of Prof. Wen Gao of Pengcheng Lab, Shenzhen, Guangdong, China, shows the processing steps of an image in the human brain until the content of the image is “understood” and the“push a button” action is ordered.

Figure 2 – The path from the retina to finger actuation in a human

A module of the figure is the Lateral Geniculate Nucleus (LGN). This connects the optic nerve to the occipital lobe. The LGN has 6 layers, kind of sub-modules, each of which performs distinct functions. Likewise for the other modules crossed by the path.

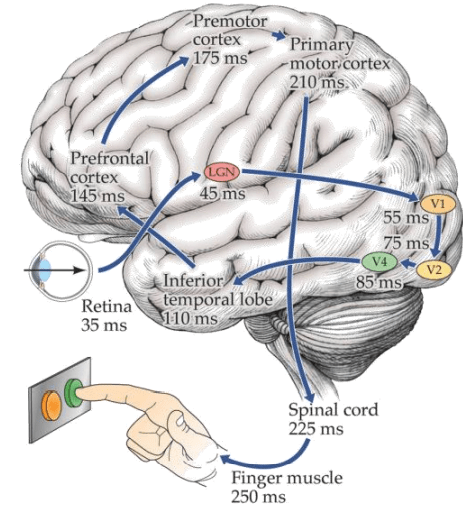

Independent modules need an environment

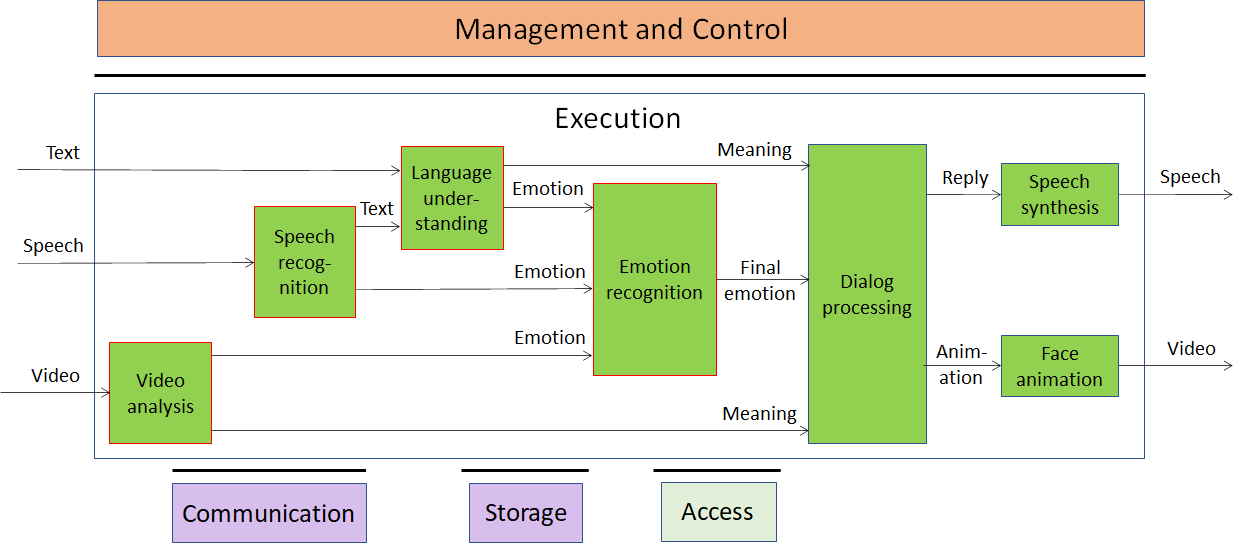

WE do not know what “entity” in the human brain controls the thousands of processes that take place in it, but we know that without an infrastructure governing the operation we cannot make the modules of Figure 1 to operate and produce the desired results.

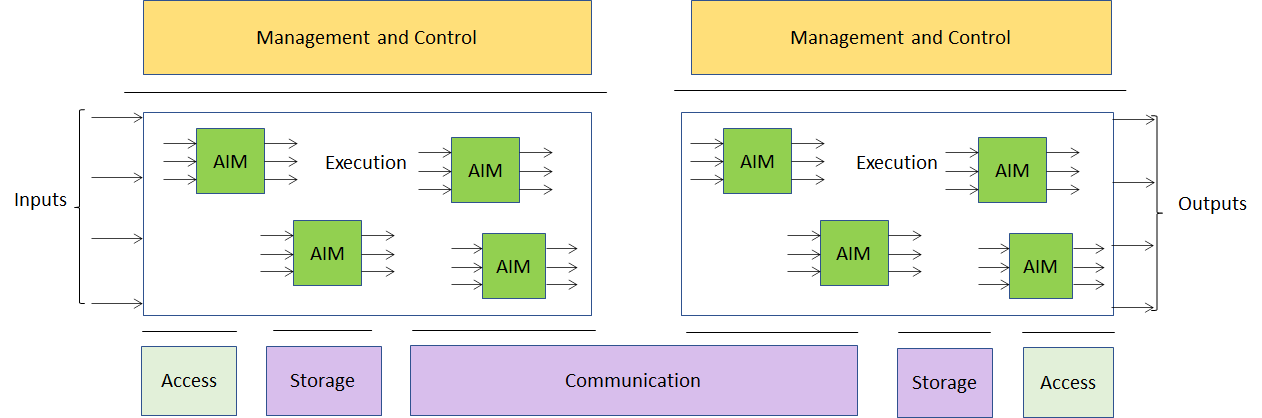

The environment where “AI modules” operate is clearly a target for a standard and MPAI has already defined the functional requirements for what it calls AI Framework, depicted in Figure 3. A Call for Technologies has been launched and submissions are due 2021/02/15.

Figure 3 – The MPAI AI Framework model (MPAI-AIF)

The inputs at the left-hand side correspond to the visual information from the retina in Figure 2, the outputs correspond to the activation of the muscle. One AI Module (AIM) could correspond to the LGN and another to the V1 visual cortex, Storage could correspond to the short-term memory, Access to the long-term memory and Communication to the structure of axons connecting the 100 billion neurons. The AI Framework model considers the possibility to have distributed instances of AI Frameworks, something for which we have no correspondence, unless we believe in the possibility for a human to hypnotise another human and control their actions 😉.

The other element of the AI Framework that has no correspondence with the human brain – until proven otherwise, I mean – is the Management and Control component. In MPAI this plays clearly a very important role as demonstrated by the MPAI-AIF Functional Requirements.

Implementing human-machine conversation with emotion

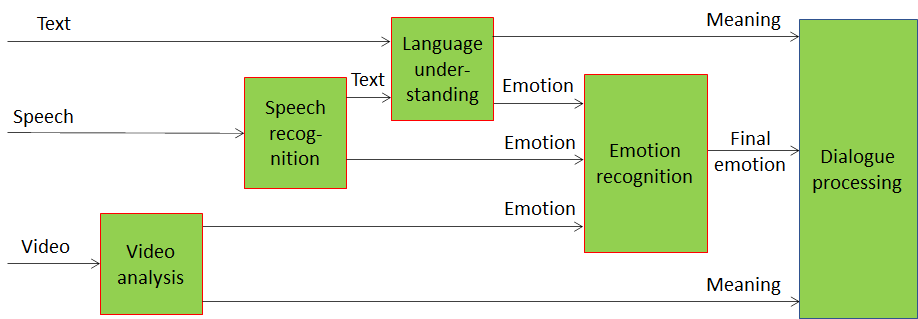

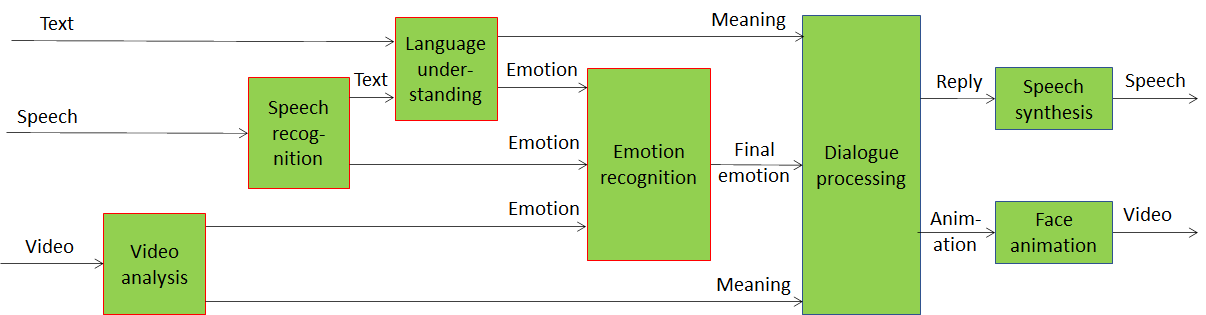

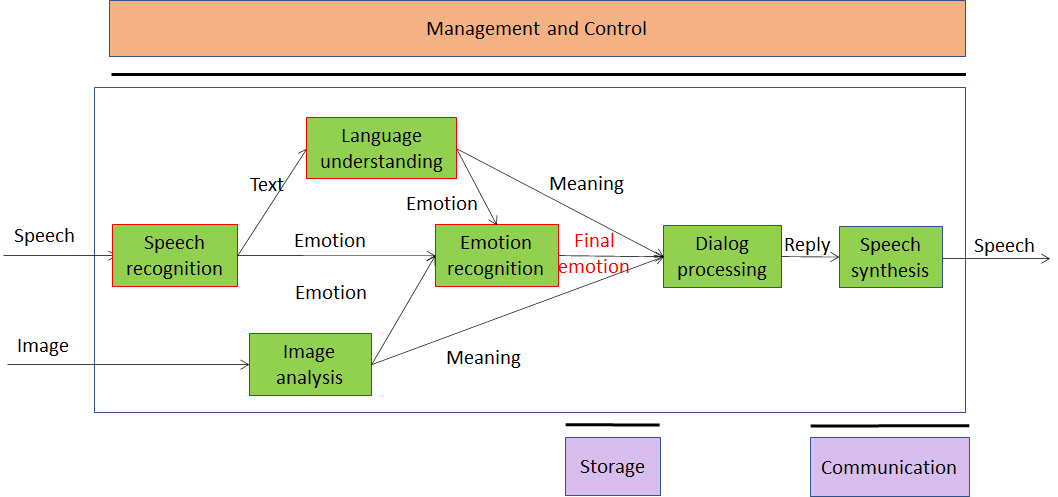

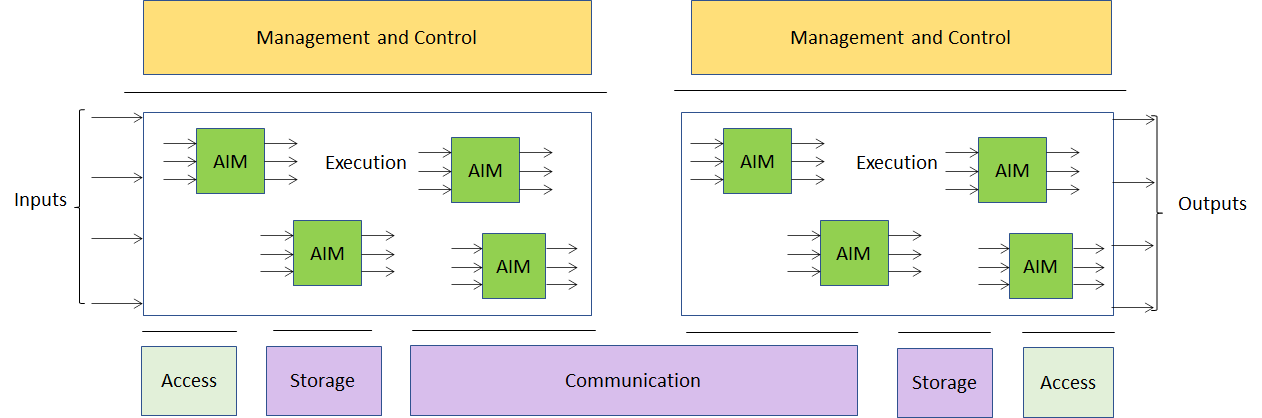

Figure 1 is a variant of an MPAI Use Case called Conversation with Emotion, one of the 7 Use Cases that have reached the Commercial Requirements stage in MPAI. An implementation using the AI Framework can be depicted as in Figure 4.

Figure 4 – A fully AI-based implementation of human-machine conversation with emotion

If the six AIMs are implemented according to the emerging MPAI-AIF standard, then they can be individually obtained from an open “AIM market” and added to or replaced in Figure 4. Of course, a machine capable to have a conversation with a human can be implemented in many ways. However, a non standard system must be designed and implemented in all its components, and users have less visibility of how the machine works.

One could ask: why should AI Modules be “AI”? Why can’t they be simply Modules, i.e., implemented with legacy data processing technologies? Indeed, data processing in this and other fields has a decade-long history. While AI technologies are fast maturing, some implementers may wish to re-use some legacy Modules they have in their stores.

The AI Framework is open to this possibility and Figure 5 shows how this can be implemented. AI Modules contain the necessary intelligence in the neural networks inside the AIM, while legacy modules typically need Access to an external Knowledge Base.

Figure 5 – A mixed AI-legacy implementation of human-machine conversation with emotion

Conclusions

This article has described how MPAI is implementing its mission of developing standards in the Moving Picture, Audio and Data Coding by Artificial Intelligence domain. The method described blends the needs to have a common reference reference (the “agreement”, as called above) with the need to leave ample room to competition between actual implementations of MPAI standards.

The subdivision of a possibly complex AI system in elementary blocks – AI Modules – not only promotes the establishment of a competitive market of AI Modules, but gives users an insight on how the components of the AI system operate, hence giving back to humans more control on AI systems. It also lowers the threshold to the introduction of AI spreading its benefits to a larger number of people.