An overview of the MPAI Metaverse Model – Technologies standard

The MPAI Metaverse Model – Technologies standard – in short, MMM-TEC V2.0 – is the first open metaverse standard enabling independently designed and implemented defines a metaverse instance (that MMM-TEC calls M-Instances) and clients to interoperate. These are the main MMM-TEC elements:

- The Architecture is based on Processes acting on Items based on the Rights they hold.

- Items represent any abstract and concrete objects in an M-Instance.

- Processes – possibly in different M-Instances – communicate using the Inter-Process Protocol (IPP).

- Process Actions represent the payload of a message sent by a Process.

- Qualifiers are containers of technology-specific information of an Item.

- The MPAI-MMM API enables fast development of M-Instances.

- Verification Use Cases verify the completeness of the standard.

- The MMM Open-Source Software implementation can easily be installed.

Below is a more extended introduction to MMM-TEC. Here is the text of Technical Specification: MPAI Metaverse Model (MPAI-MMM) – Technologies (MMM-TEC) V2.0.

MMM-TEC defines an M-Instance as an Information and Communication Technologies platform populated by Processes. They perform a range of activities, such as operate with various degrees of autonomy and interactivity, sense data from the real world, produce various types of entities called Items, perform or request other Processes to perform activities represented by Process Actions or request other Processes – possibly in other M-Instances, hold or acquire Rights on Items, and act on the real world on a variety of ways. They can perform Process Actions based on Rights they may hold, acquire, or be granted.

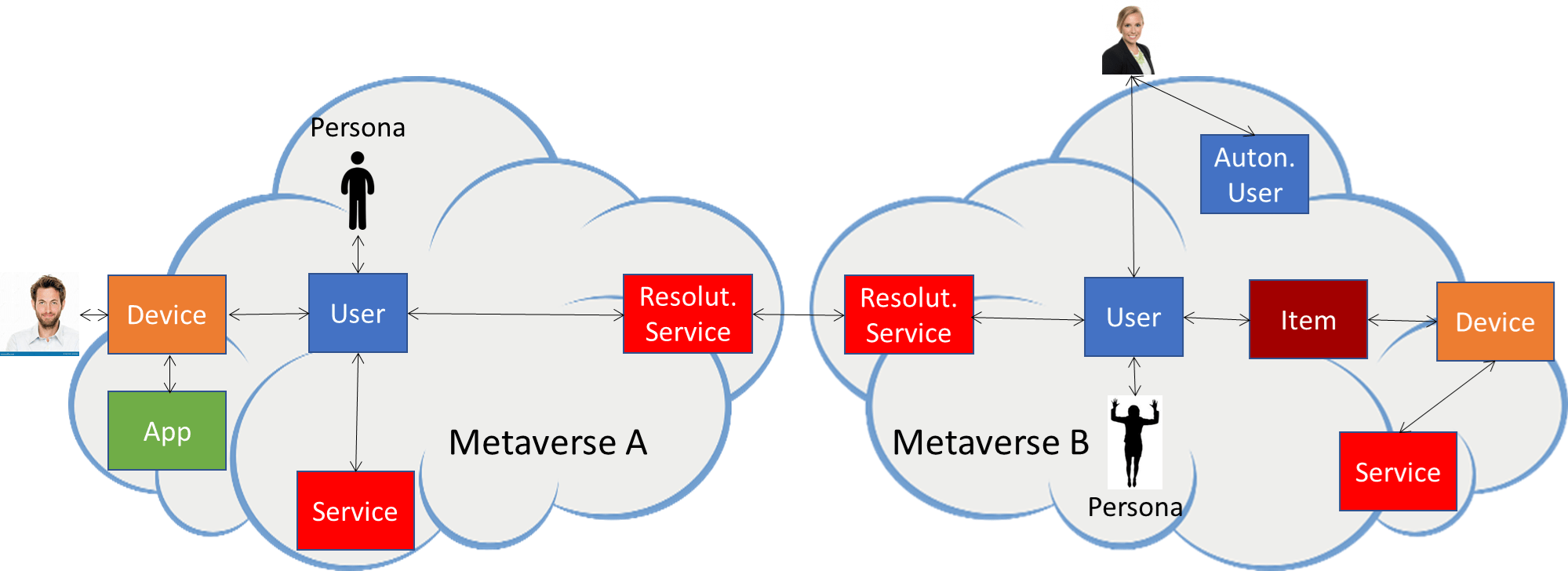

Processes may be characterised as:

- Services providing specific functionalities, such as content authoring.

- Devices connecting the Universe to the M-Instance and the M-Instance to the Universe.

- Apps running on Devices.

- Users representing and acting on behalf of human entities residing in the Universe. A User is rendered as a Persona, i.e., an avatar.

Figure 1 depicts the main elements on which the MMM-TEC Specification is based: human, Devices, Apps, Users, Services, and Personae.

Figure 1 – Main elements of an M-Instance

Figure 1 – Main elements of an M-Instance

Processes Sense Data from U-Environments, i.e., portions of the Universe and may produce three types of Items, i.e., Data that has been Identified in – and thus recognised by – the M-Instance:

- Digitised– i.e., sensed from the Universe – possibly animated by activities in the Universe.

- Virtual– i.e., imported from the Universe as Data or internally generated – possibly autonomous or driven by activities in the Universe.

- Mixed – Digitised and Virtual.

Processes Perform – either on their initiative, or driven by the actions of humans or machines in the Universe – Process Actions that combine:

- An Action, possibly prepended by:

- MM:to indicate Actions performed inside the M-Instance, e.g., MM-Animate using a stream to animate a 3D Model with a Spatial Attitude (defined as Position, Orientation, and their velocities and accelerations).

- MU:to indicate Actions in the M-Instance influencing the Universe, e.g., MU-Actuate to render one of its Items to a U-Location as Media with a Spatial Attitude.

- UM: to indicate Actions in the Universe influencing the M-Instance, e.g., UM-Embed to place an Item produced by Identifying a scene, UM-Captured at a U-Location, at an M-Location with a Spatial Attitude.

- Items on which the Action is performed or are required for performance, such as Asset, 3D Model, Audio Object, Audio-Visual Scene, etc.

- M-Locations and/or U-Locationswhere the Process Action is performed.

- Processes with which the Action is performed.

- Time(s) during which the Process Action is requested to be and is performed.

Processes may hold Rights on an Item, i.e., they may perform the set of Process Actions listed in their Rights. An Item may include Rights signalling which Processes may perform Process Actions on it. Processes affect U-Environments and/or M-Instances using Items in ways that are Consistent with the goals of the M-Instance as expressed by the Rules, within the M-Capabilities of the M-Instance, e.g., to support Transactions, and respecting applicable laws and regulations.

Processes perform activities strictly inside the M-Instance or have various degrees of interaction with Data sensed from and/or actuated in the Universe.

Processes may request other Processes to perform Process Actions on their behalf by using the Inter-Process Protocol, possibly after Transacting a Value (i.e., an Amount in a Currency) to a Wallet.

An M-Instance is managed by an M-Instance Manager. At the initial time, the M-Instance Manager has Rights covering the M-Instance and may decide to define certain subsets inside the M-Instance – called M-Environments – on which it has Rights and attach Rights to them.

A Registering human may:

- Request to Register to open an Account of a certain class.

- Be requested to provide their Personal Profile and possibly to perform a Transaction to open an Account.

- Obtain in exchange a set of Rights that their Processes may perform. Rights have Levels indicating that the Rights are

- Internal, g., assigned by the M-Instance at Registration time according to the M-Instance Rules and the Account type.

- Acquired, g., obtained by initiative of the Process.

- Granted to the Process by another Process.

MMM-TEC V2.0 does not specify how an M-Instance verifies that the Process Actions performed by a Process comply with the Process’s Rights or the M-Instance Rules. An M-Instance may decide to verify the full set of Activity Data (the log of performed Process Actions), or to make verifications based on claims by another Process, to make random verifications, or to not make any verification at all. Therefore, MMM-TEC V2.0 does not specify how a M-Instance Manager can sanction non-complying Processes.

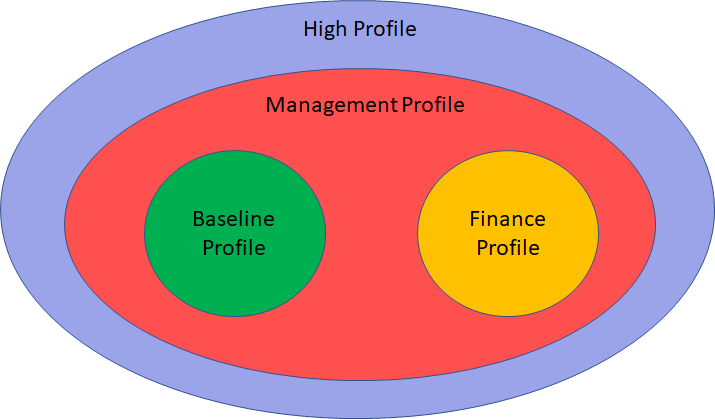

In some cases, an M-Instance could be wastefully too costly as an undertaking if all the technologies required by the MMM Technical Specification were mandatorily to be implemented, even if a specific M-Instance had limited scope. MMM-TEC V2.0 specifies Profiles to facilitate the take-off of M-Instance implementations that conform to the MMM-TEC V2.0 specification without unduly burdening some other implementations.

A Profile includes only a subset of the Process Actions that are expected to be needed and are shared by a sizeable number of applications. MMM-TEC v2.0 defines four Profiles (see Figure 2:

- Baseline Profile enables basic applications such as lecture, meeting, and hang-out.

- Finance Profile enables trading activities.

- Management Profile includes enables a controlled ecosystem with more advanced functionalities.

- High Profile enables all the functionalities of the Management Profile with a few additional functionalities of its own.

Figure 2 – MMM-TEC V2.0 Profiles

Figure 2 – MMM-TEC V2.0 Profiles

MPAI developed and used some use cases in the two MPAI-MMM Technical Reports (1 and 2) published in 2023 to develop the MMM-ARC and MMM-TEC Technical Specifications. However, MMM-TEC V2.0 includes various Verification Use Cases that use Process Actions to verify that the currently specified Actions and Items completely support those Use Cases.

The fast development of certain technology areas is one of the issues that has so far prevented the development of metaverse interoperability standards. MMM-TEC deals with this issue by providing JSON syntax and semantics for all Items. When needed, the JSON syntax references Qualifiers, MPAI-defined Data Types that supply additional information to the Data in the form of:

- Sub-Type (e.g., the colour space of a Visual Data Type).

- Format (e.g., the compression or the file/streaming format of Speech).

- Attributes (e.g., the Binaural Cues of an Audio Object).

For instance, a Process receiving an Object can understand from the Qualifier referenced in the Object whether it has the required technology to process it, or else it has to rely on a Conversion Service to obtain a version of the Object matching its P-Capabilities. This approach should help to prolong the life of the MMM-TEC specification as in many cases only the Qualifier specification will need to be updated, not the MMM-TEC specification.

Finally, MMM-TEC V2.0 specifies the MPAI-MMM API. By calling the APIs, a developer can easily develop M-Instances and applications.

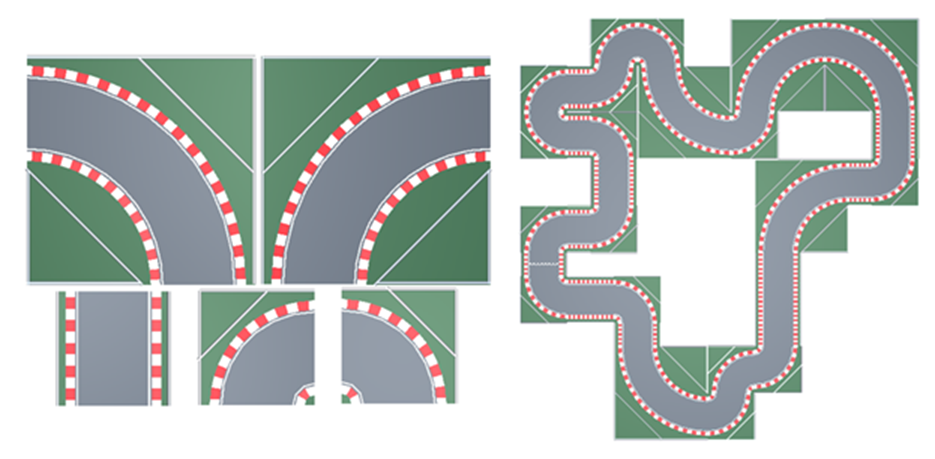

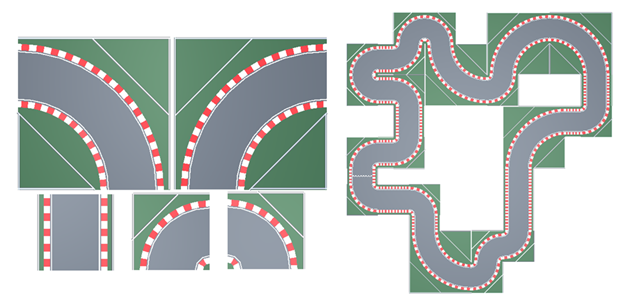

Figure 2 – Modular tiles and an example of a racetrack

Figure 2 – Modular tiles and an example of a racetrack