Highlights

- MPAI harnesses its technologies for more secure communication

- Video recordings and presentations of eight recent standards published

- How MPAI communicates

- Some upcoming new standards

- Meetings in the coming March-April meeting cycle

MPAI harnesses its technologies for more secure communication.

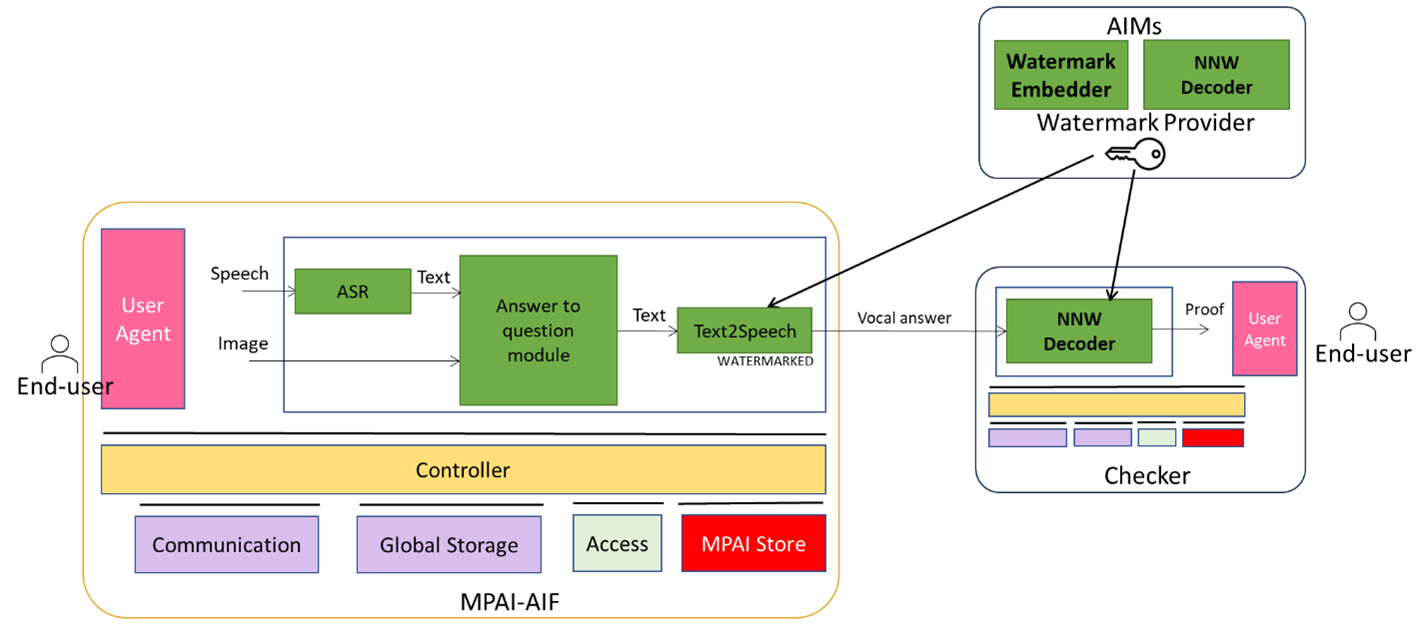

In its activities, MPAI has laid down the foundations of several technology elements that it can now leverage to support new applications. In this newsletter, we will talk about two of them. One is the AI Framework (MPAI-AIF), that has been around for more than two years as a standard. It is a standard environment enabling dynamic configuration, initialisation, and control of AI Workflows (AIW) composed or AI Modules (AIM). The second is Neural Network Watermarking (MPAI-NNW), for which MPAI has developed standard methods to assess the impact of applying a watermarking technology to a neural network on its performance.

MPAI is now making available a new version V1.1 of the MPAI-NNW Reference Software that includes the AIF Framework and an AI Workflow composed of an AIM for Automatic Speech Recognition (ASR), an AIM that responds to queries that include a text (output of the ASR AIM) and an image, and a third Text-To-Speech (TTS) AIM that converts the text output of the second AIM to speech. The inference of the TTS AIM is watermarked, so that the issuer of the query (speech + image) can ascertain whether the response they receive is from the service that they intended to use for their request.

Figure 1 – Workflow for watermarking generative Neural Network

On the 16th of April at 15 UTC, MPAI will make a public online presentation of the new version of the MPAI-NNW Reference Software. Register here to attend. The full software implementation of the system will be made available after the presentation. Register here to attend.

Video recordings and presentations of eight recent standards published

Here you can get a quick overview to get familiar with the standards., buy you can also see the ppt of the presentations, the link to the YouTube recording (YT) and the WimTV recording (nYT)

Portable Avatar Format (MPAI-PAF) enables a receiver to render a speaking avatar embedded in an audio-visual scene using the relevant information provided by the sender and specifies the Personal Status Display Composite AI Module allowing the conversion to a Portable Avatar of text and Personal Status generated by a machine. See more at Portable Avatar Format (MPAI-PAF) ppt YT nYT

MPAI Metaverse Model (MPAI-MMM) – Architecture enables interoperability of two metaverse instances by using 1) the specified Operation Model, 2) the same or compatible Functional Profiles and 3) the same technologies or different technologies while accessing conversion services which losslessly convert one metaverse’s data formats to the other’s. See more at MPAI Metaverse Model (MPAI-MMM) – Architecture ppt YT nYT

Object and Scene Description (MPAI-OSD) specifies data formats, such as Spatial Attitude and Audio-Visual Scene Descriptors, to enable description and localisation of uni- and multi-modal Objects and Scenes for uniform use across MPAI standards. See more at Object and Scene Description (MPAI-OSD) ppt YT nYT

Multimodal Conversation (MPAI-MMC) specifies technologies for analysis of text, speech and other non-verbal components used in human-machine and machine-machine conversation enabling more human-like conversation between humans and machines that emulate conversations between humans in completeness and intensity. See more at Multimodal Conversation (MPAI-MMC) ppt YT nYT

Context-based Audio Enhancement (MPAI-CAE) specifies technologies that improve the user experience for audio-related applications in entertainment, communication, teleconferencing, restoration etc. in contexts such as in the home, in the car, on-the-go, in the studio. See more at Context-based Audio Enhancement (MPAI-CAE) ppt YT nYT

Human and Machine Communication (MPAI-HMC) enables advanced forms of communication between Entities, where an Entity is, either a human present in a real space or represented in a Virtual Space, or a Machine represented in a Virtual Space or rendered in the real space as an interacting speaking avatar. See more at Human and Machine Communication (MPAI-HMC) ppt YT nYT

Connected Autonomous Vehicle (MPAI-CAV) – Architecture enables the industry to accelerate the development of explainable, efficient, and affordable CAVs by specifying the Architecture of a CAV based on subsystems and components with standard functions and interfaces. See more at Connected Autonomous Vehicle (MPAI-CAV) – Architecture ppt YT nYT

AI Framework (MPAI-AIF) enables dynamic configuration, initialisation, and control of AI Workflows (AIW) composed of AI Modules (AIM) in environments ranging from MCU to HPC. A standard set of APIs supports non-secure and secure communication, internal and external storage, security functionalities, and access to the MPAI Store. See more at AI Framework (MPAI-AIF) ppt YT nYT

Here are links to the three standards that were not presented:

| Compression and Understanding of Industrial Data (MPAI-CUI) | ppt | YT | nYT |

| Governance of the MPAI Ecosystem (MPAI-GME) | ppt | YT | nYT |

| Neural Network Watermarking MPAI-NNW) | ppt | YT | nYT |

Having a grasp of the MPAI work requires a non-insignificant effort. Because of this, MPAI dedicates resources to communicate what it is, describe its assets, and introduce its plans. Let’s have a look at the multiple ways offered to you to keep abreast of MPAI activities.

- The principal channel is the MPAI website blog located at the right shoulder of the MPAI web page. This is where you can find any major MPAI-related news. The amount of information there is simply staggering.

- The MPAI website offers several specialised pages:

- The Press Releases page includes all MPAI PRs, typically published after a General Assembly.

- The Newsletters page includes all MPAI Newsletters, typically distributed after a General Assembly.

- The MPAI Events page includes slides and videos of presentations made by MPAI.

- The General Assemblies page includes all documents approved for publication by MPAI General Assemblies.

- The Standards page is an excellent dashboard to navigate the world of MPAI technical activities.

- The Interviews page lets you get a quick overview of standards or use cases as presented by those who have contributed to their development.

- The Publications page provides links to papers authored by MPAI members in technical journals about MPAI activity results.

- The Tweet Carousel page is a collection of animated posts made on social media.

MPAI has an active presence on various social media: LinkedIn, Twitter, Facebook, Instagram, and YouTube.

MPAI sends a monthly Newsletter to its list of subscribers. If you are not subscribed yet, send an email to the MPAI Secretariat.

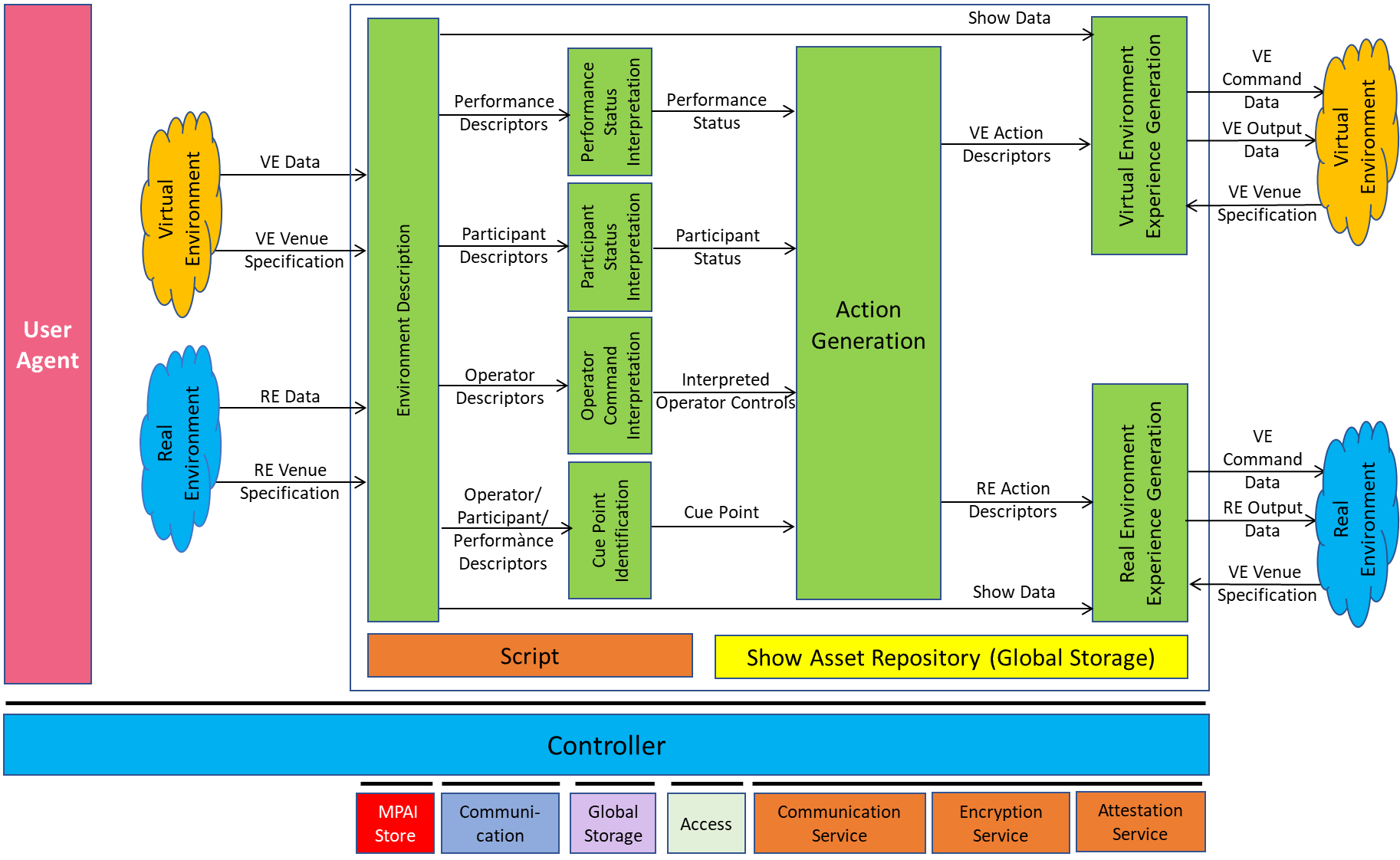

Extended Reality (XR) Venues addressing contexts (“Venues”, used as a synonym for Real and Virtual Environments) where the use of XR is enhanced by Artificial Intelligence (AI). Out of the nine use cases identified so far, MPAI has decided to develop a specification for the Live Theatrical Stage Performance use case. Those in the field know that theatrical stage performances such as Broadway theatres, musicals, dramas, operas, and other performing arts increasingly using video scrims, backdrops, and projection mapping to create digital sets. The entire stage and theatre are becoming digital virtual environments. The cost of mounting shows in physical stage sets can be reduced and the user experience greatly enhanced as immersion domes can surround audiences with virtual environments where live performers interact with the audience. The performance can now extend into the metaverse, and that experience can be projected in the dome while elements of the real experience can be rendered in the metaverse.

MPAI-XRV – Live Theatrical Performance (LTP) addresses the functional requirements and the data types of AI Modules that facilitate live multisensory immersive performances. The use of AI Modules organised in AI Workflows will allow more direct, precise yet spontaneous show implementation and control to achieve the show director’s vision.

Figure 2 depicts the reference model of XRV-LTP. This has undergone significant evolutions to align the model to the complex reality that the model represents. The Real and Virtual Environments at the left and the right-hand side of the figure represent the same environments seen as source (left) and sink (destination). Virtual Environment.

Figure 2 – Reference Model of XRV – Live Theatrical Performance (XRV-LTP)

Thus, input data are from the Real Environment and the Virtual Environment, and the Real Venue and Virtual Venue Specification provide information about the respective technical choices of the Venue. The Environment Descriptors AIM extracts relevant descriptors from the data in such a way that the formats of the descriptors are independent from the specifics of the venue. The “Interpretation” AIMs interpret the descriptors and temporally localise the performance in the script (cue point). Interpretations, cue point, and Show Data (a subset of the descriptors) feed the Action Generation AIM to enable it to produce Virtual and Real Environment Action Descriptors. The Virtual and Real Environment Experience Generation AIMs produce the Virtual and Real Environment Command Data changing the respective Environments.

MPAI is planning on releasing Technical Specification: XR Venues (MPAI-XRV) Live Theatrical Stage Performance in the next few months,

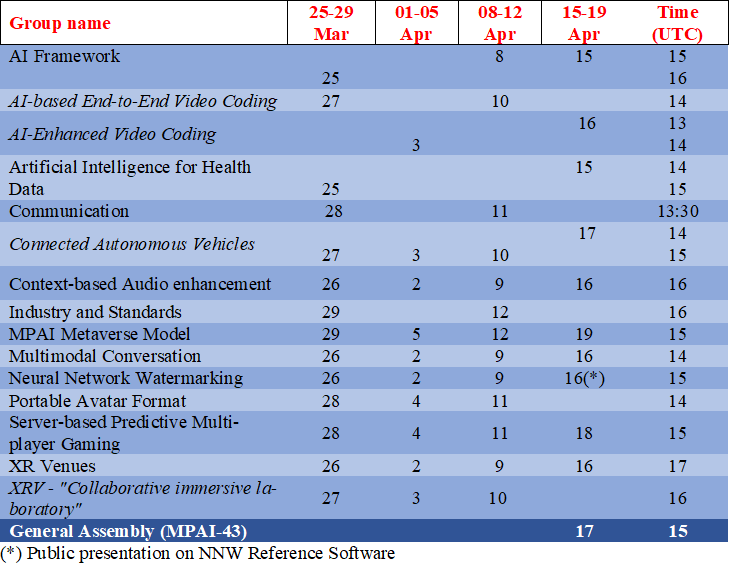

Meetings in the coming March-April meeting cycle

Participation in meetings indicated in normal font are open to MPAI members only. Legal entities and representatives of academic departments supporting the MPAI mission and able to contribute to the development of standards for the efficient use of data can become MPAI members.

Meetings in italic are open to non-members. If you wish to attend, send an email to secretariat@mpai.community.

This newsletter serves the purpose of keeping the expanding diverse MPAI community connected.

We are keen to hear from you, so don’t hesitate to give us your feedback.