MPAI publishes the MPAI-MMM API as part of MPAI Metaverse Model – Technologies (MMM-TEC)

Geneva, Switzerland – 16th April 2025. MPAI – Moving Picture, Audio and Data Coding by Artificial Intelligence – the international, non-profit, unaffiliated organisation developing AI-based data coding standards – has concluded its 55th General Assembly (MPAI-55) with the final release of the Connected Autonomous Vehicle and the MPAI Metaverse Model standards.

The MPAI Metaverse Model (MPAI-MMM) – Technologies (MMM-TEC) V2.0 standard specifies:

- The Functional Requirements of the Processesoperating in a metaverse instance (M-Instance).

- The Items, i.e., the Data Types and their Qualifiers recognised in an M-Instance.

- The Process Actionsthat a Process can perform on Items.

- The Protocolsenabling a Process to communicate with another Process.

- The MPAI Metaverse Model

- The MPAI-MMM API.

Availability of APIs enable the rapid development of M-Instances and clients that interoperate with M-Instances conforming with the MMM-TEC V2.0 standard.

An online presentation of the MMM-TEC V2.0 standard will be presented on 9 May at 15 UTC. Register at https://tinyurl.com/5a2d4ucv.

The Connected Autonomous Vehicle (MPAI-CAV) – Technologies (CAV-TEC) V1.0 standard specifies the Reference Model partitioning a CAV into subsystems and components. The standard Reference Model promotes CAV componentisation by enabling:

- Researchersto optimise component technologies.

- Component manufacturersto bring their standard-conforming components to an open market.

- Car manufacturersto access a global market of interchangeable components.

- Regulatorsto oversee conformance testing of components following standard procedures.

- Usersto rely on Connected Autonomous Vehicles whose operation they can explain.

An online presentation of the CAV-TEC V1.0 standard will be presented on 8 May at 15 UTC. Register at https://tinyurl.com/372739sa.

MPAI is continuing its work plan that involves the following activities:

- AI Framework (MPAI-AIF): building a community of MPAI-AIF-based implementers.

- AI for Health (MPAI-AIH): developing the specification of a system enabling clients to improve models processing health data and federated learning to share the training.

- Context-based Audio Enhancement (CAE-DC): developing the Audio Six Degrees of Freedom (CAE-6DF) standard.

- Connected Autonomous Vehicle (MPAI-CAV): investigating extensions of the current CAV-TEC standard.

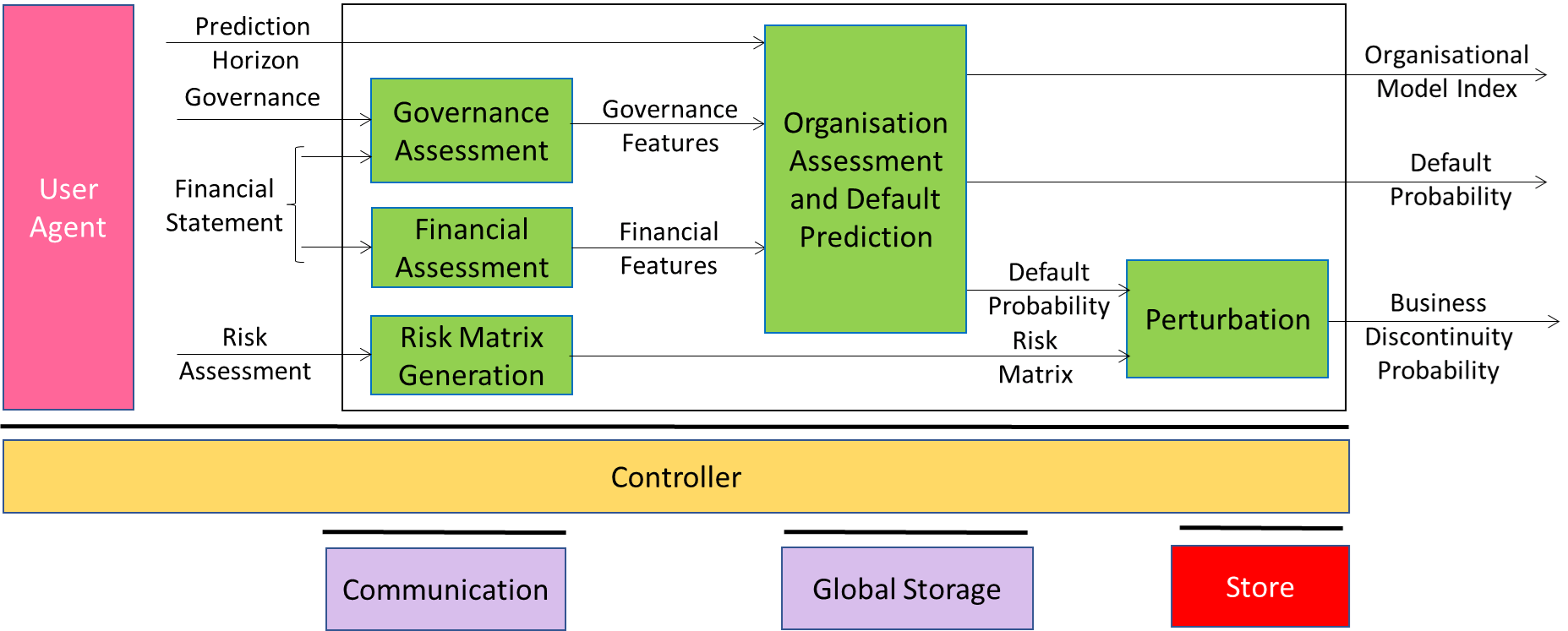

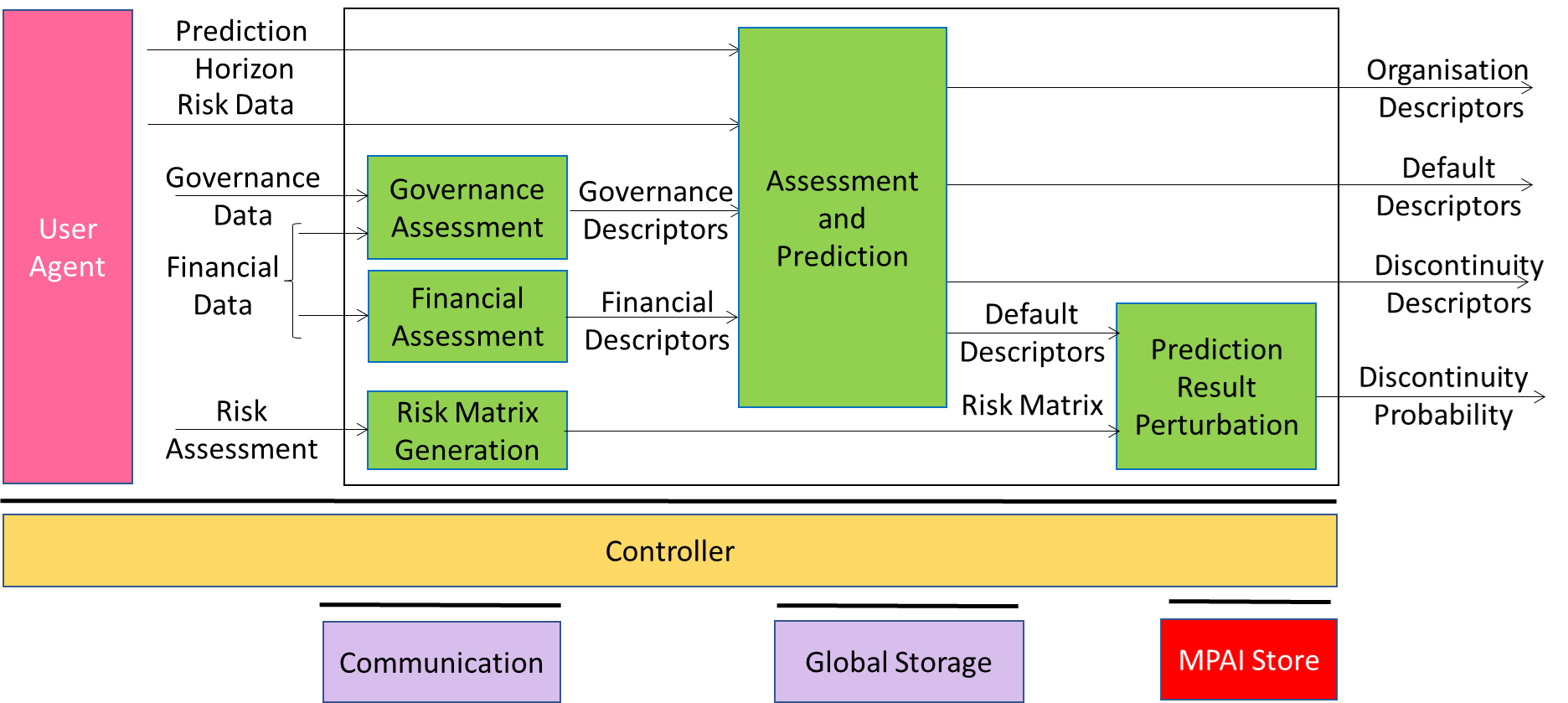

- Compression and Understanding of Industrial Data (MPAI-CUI): developing Company Performance Prediction standard V2.0.

- End-to-End Video Coding (MPAI-EEV): exploring the potential of video coding using AI-based End-to-End Video coding.

- AI-Enhanced Video Coding (MPAI-EVC): developing the Up-sampling Filter for Video applications (EVC-UFV) standard.

- Governance of the MPAI Ecosystem (MPAI-GME): working on version 2.0 of the Specification.

- Human and Machine Communication (MPAI-HMC): developing reference software and performance assessment.

- Multimodal Conversation (MPAI-MMC): Developing technologies for more Natural-Language-based user interfaces capable of handling more complex questions.

- MPAI Metaverse Model (MPAI-MMM): extending the MMM-TEC specs to support more applications.

- Neural Network Watermarking (MPAI-NNW): studying the use of fingerprinting as a technology for neural network traceability.

- Object and Scene Description (MPAI-PAF): studying applications requiring more space-time handling applications.

- Portable Avatar Format (MPAI-PAF): studying more applications using digital humans needing new technologies.

- AI Module Profiles (MPAI-PRF): specifying which features AI Workflow or more AI Modules need to support.

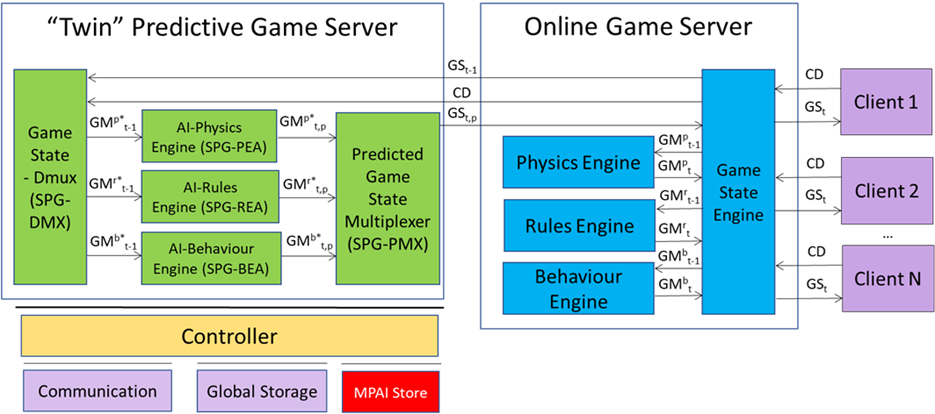

- Server-based Predictive Multiplayer Gaming (MPAI-SPG): exploring new standard opportunities in the domain.

- Data Types, Formats, and Attribes (MPAI-TFA) extending the standard to data types used by MPAI standards (e.g., aomotive and health).

- XR Venues (MPAI-XRV): developing the standard for improved development and execion of Live Theatrical Performances and studying the prospects of Collaborative Immersive Laboratories.

Legal entities and representatives of academic departments supporting the MPAI mission and able to contribute to the development of standards for the efficient use of data can become MPAI members.

Please visit the MPAI website, contact the MPAI secretariat for specific information, subscribe to the MPAI Newsletter and follow MPAI on social media: LinkedIn, Twitter, Facebook, Instagram, and YouTube.

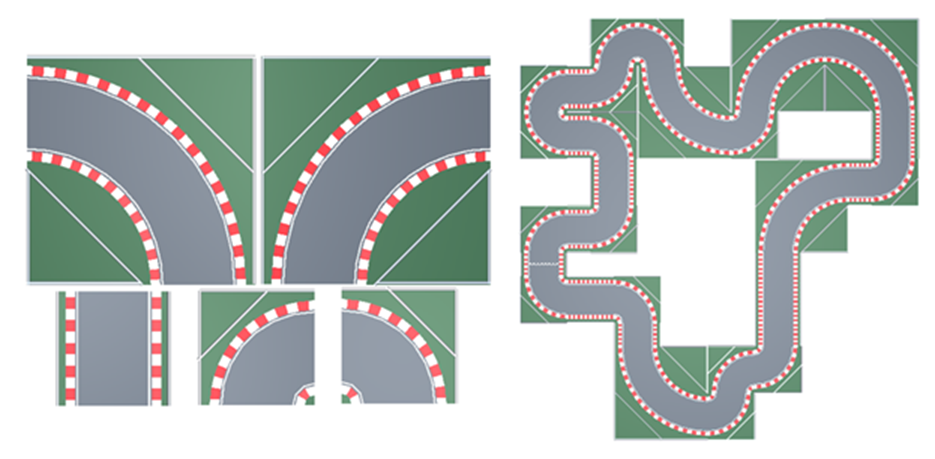

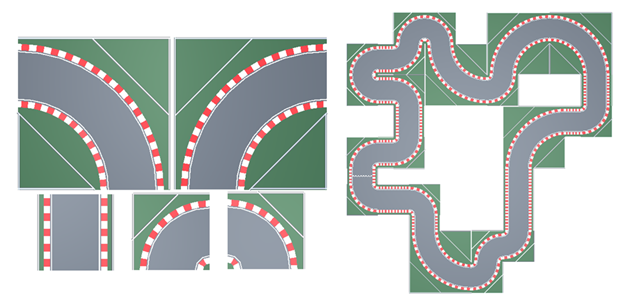

Figure 2 – Modular tiles and an example of a racetrack

Figure 2 – Modular tiles and an example of a racetrack