More members for the MPAI Standards Family

The latest – 15 June 2024 – MPAI General Assembly (MPAI-44) proved, if ever there was a need, that MPAI produces non only standards, but also promising new projects. MPAI-44 launched three new projects on 6 degrees of freedom audio (CSAE-6DF), technologies for connected autonomous vehicle components, and technologies for the MPAI Metaverse Model.

The CAE-6DF Call is seeking innovative technologies that enable users to walk in a virtual space representing a remote real space and enjoy the same experience as if they were in the remote space. Visit the CAE-6DF page and register to attend the online presentation of the Call of the 28th of May, communicate your intention to respond to the Call by the 4th of June, and submit your response by the 16th of September.

The CAV-TEC Call requests technologies that build on the Reference Architecture of the Connected Autonomous Vehicle (CAV-ARC) to achieve a componentisation of connected autonomous vehicles. Visit the CAV-TEC page and register to attend the online presentation of the Call of the 6th of July, communicate your intention to respond to the Call by the 14th of June, and submit your response by the 5th of July.

The MMM-TEC Call requests technologies that build on the Reference Architecture of the MPAI Metaverse Model (MMM-ARC) and enable a metaverse instance to interoperate with other metaverse instances. Visit the MMM-TEC page and register to attend the online presentation of the Call of the 31st of July, communicate your intention to respond to the Call by the 7th of June, and submit your response by the 6th of July.

MPAI-44 has brought more results.

Four existing standards have been republished with significant new material that extends the current functionalities of the four and supports the needs of the three new projects in the form of extensions of existing standards that are published for Community Comments:

- Object and Scene Description (MPAI-OSD) V1.1 adds a new Use Case for automatic audio-visual analysis of television programs and new functionalities for Visual and Audio-Visual Objects and Scenes.

- The Cases of Context-based Audio Experience (MPAI-CAE) V2.2 standard (CAE-USC) supports new functionalities for Audio Object and Scene Descriptors.

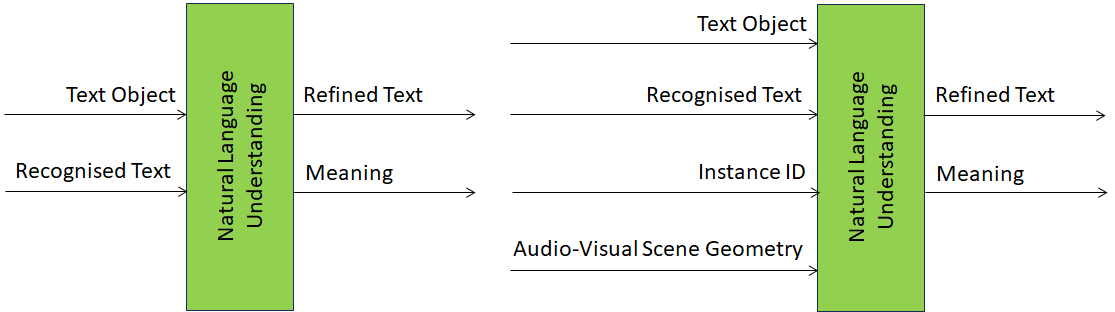

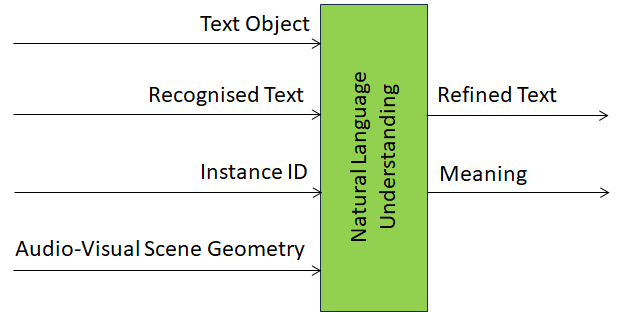

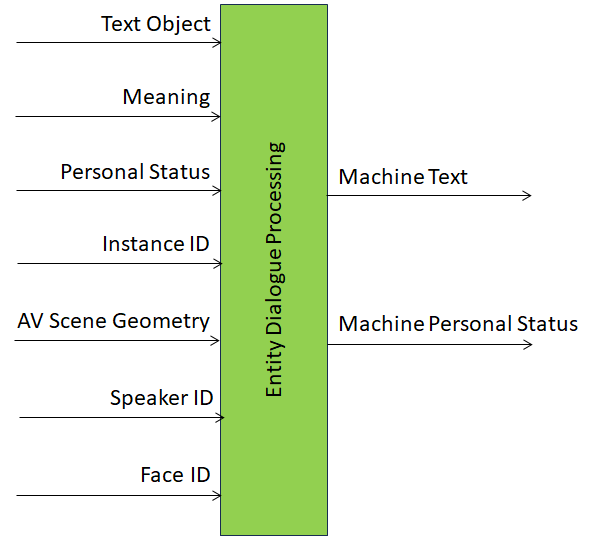

- The Multimodal Conversation (MPAI-MMC) V2.2 standard introduces new AI Modules and new Data Formats to support the new MPAI-OSD Television Media Analysis Use Case.

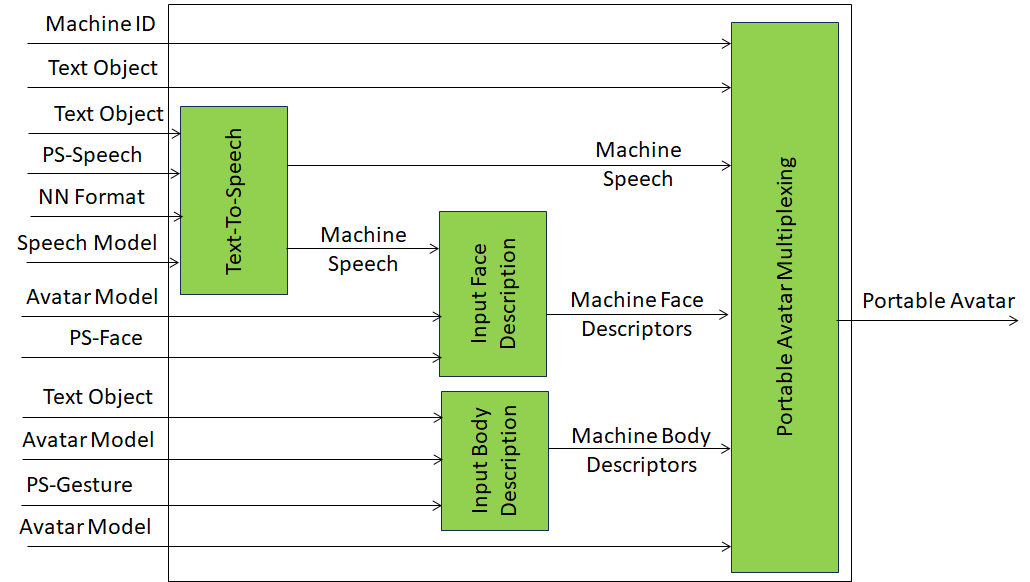

- The Portable Avatar Format (MPAI-PAF) V1.2 standard extends the specification of the Portable Avatar to support new functionality requested by the CAV-TEC and MMM-TEC Calls.

Everybody is welcome to review the draft standards (new versions of existing standards) and send comments to the MPAI Secretariat by 23:59 UTC of the relevant day. Comments will be considered when the standards will be published in final form.

The standards mentioned above cover a significant share of the MPAI portfolio, but your navigation need not stop here. If you wish to delve into the other MPAI standards, you can go to their appropriate web pages where you can read overviews and find many links to relevant web pages. Each MPAI page contains a web version and other support material such as PowerPoint presentations and video recordings.